The internet is an ocean of information that is often not easily accessible through an API, which can provide limited access to the data or not even be available. And that's where web scraping comes in.

What is web scraping?

Web scraping is the art of leveraging the power of automation to open the web and extract structured web data at scale. The data collected can then be used for countless applications, such as training machine learning algorithms, price monitoring, market research, lead generation, and more.

JavaScript and Python are two of the most popular and versatile programming languages. Both languages are at the forefront of innovation in web scraping, boasting a vast selection of frameworks and libraries that offer tools to overcome even the most complex scraping scenarios.

This article will analyze some of the latest web scraping libraries and frameworks available for each language and discuss the best scraping use cases for Python and JavaScript.

Why choose JavaScript for web scraping?

JavaScript is currently the most used programming language in the world. Its popularity is due primarily to its flexibility. JavaScript is used for web development, building web servers, game development, mobile apps, and, of course, web scraping.

JavaScript is rightfully referred to as the language of the web. Close to 97.8% of all websites use it as a client-side programming language. Not surprisingly, some of the most advanced web scraping and browser automation libraries are also written in JavaScript, making it even more attractive for those who want to extract data from the web.

Additionally, JavaScript boasts a large and vibrant community. There's plenty of information available online, so you can easily find help whenever you feel stuck on a project.

Running JavaScript on the server with Node.js

Node.js is an open-source JavaScript runtime, enabling JavaScript to be used on the server-side to build fast and scalable network applications.

Node.js is well known for the performance and speed it provides. Node.js efficiency comes from its single-threaded structure and asynchronous nature, enabling it to execute JavaScript code in the main thread while handling input/output operations in other threads.

On top of that, Node.js uses the V8 JavaScript engine, an open-source, high-performance JavaScript and WebAssembly engine written initially for Google Chrome. The V8 engine enables Node.js to compile JavaScript code into machine code at execution by implementing a JIT (Just-In-Time) compiler, significantly improving the execution speed.

Thanks to Node.js capabilities, the JavaScript ecosystem has a variety of highly efficient web scraping libraries such as Got, Cheerio, Puppeteer, and Playwright.

Why use Python for web scraping?

Python, like JavaScript, is an extremely versatile language. Python can be used for developing websites and software, task automation, data analysis, and data visualization. Its easy-to-learn syntax contributed greatly to Python's popularity among many non-programmers, such as accountants and scientists, for automating everyday tasks, organizing finances, and conducting research.

Python is the king of data processing. Data extracted from the web can be easily manipulated and cleaned using Python's Pandas library and visualized using Matplotlib. This makes web scraping a powerful skill in any Pythonista's toolbox.

Python is the dominant programming language in machine learning and data science. These fields benefit heavily from having access to large data sets to train algorithms and create prediction models. Consequently, Python boasts some of the most popular web scraping libraries and frameworks, such as BeautifulSoup, Selenium, Playwright, and Scrapy.

What is the difference between HTTP clients and HTML parsers?

HTTP clients are a central piece of web scraping. Almost every web scraping tool uses an HTTP client behind the scenes to query the website server you are trying to collect data from.

Parsing, on the other hand, means analyzing and converting a program into a format that a runtime environment can run. For example, the browser parses HTML into a DOM tree.

Following this same logic, HTML parsing libraries such as Cheerio (JavaScript) and BeautifulSoup (Python) parse data directly from web pages so you can use it in your projects and applications.

Got and Got Scraping - HTTP client for JavaScript

Got Scraping is a modern package extension of the Got HTTP client. Its primary purpose is to send browser-like requests to the server. This feature enables the scraping bot to blend in with the website traffic, making it less likely to be detected and blocked.

It addresses common drawbacks in modern web scraping by offering built-in tools to avoid website anti-scraping protections.

For example, the code below uses Got Scraping to retrieve the Hacker News website HTML body and print it in the terminal.

const { gotScraping } = require('got-scraping');

gotScraping

.get('https://news.ycombinator.com/')

.then( ({ body }) => console.log(body))Requests - HTTP client for Python

Requests is an HTTP Python library. The goal of the project is to make HTTP requests simpler and more human-friendly, hence the title "Requests, HTTP for humans."

Requests is a widely popular Python library, to the point which it has even been proposed that Requests be distributed with Python by default.

To highlight the differences between Got Scraping and Requests, let's retrieve Hacker News website HTML body and print it in the terminal, but now using Requests.

import requests

response = requests.get('https://news.ycombinator.com/')

print(response.content)Cheerio - HTML and XML parser for JavaScript

Cheerio is a fast and flexible implementation of core jQuery designed to run on the server-side, working with raw HTML data.

To exemplify how Cheerio parses HTML, let's use it together with Got Scraping to extract data from Hacker News.

import { gotScraping } from 'got-scraping';

import cheerio from 'cheerio';

const response = await gotScraping('https://news.ycombinator.com/');

const html = response.body;

// Use Cheerio to parse the HTML

const $ = cheerio.load(html);

// Select all the elements with the class name "athing"

const entries = $('.athing');

// Loop through the entries

for (const entry of entries) {

const element = $(entry);

// Write each element's text to the terminal

console.log(element.text());

}After the script run is finished, you should see the data from the most recent news printed in your terminal.

Need help understanding the code? Find out more about querying data with Cheerio and CSS selectors on Apify's web scraping academy. 👨💻

However, Cheerio does have some limitations. For instance, it does not interpret results as a browser does. Thus, it is not able to do things such as:

-

Execute JavaScript

-

Produce visual rendering

-

Apply CSS or load external resources

If your use case requires any of these functionalities, you will need browser automation software like Puppeteer or Playwright, which we will explore further in this article.

Cheerio Scraper is a ready-made solution for crawling websites using plain HTTP requests. It retrieves the HTML pages, parses them using the Cheerio Node.js library and lets you extract any data from them. And Cheerio web scraping is really fast.

Beautiful Soup - HTML and XML parser for Python

Beautiful Soup is a Python library used to extract HTML and XML elements from a web page with just a few lines of code, making it the right choice to tackle simple tasks with speed. It is also relatively easy to set up, learn, and master, which makes it the ideal web scraping tool for beginners.

To exemplify BeautifulSoup's features and compare its syntax and approach to its Node.js counterpart, Cheerio, let's scrape Hacker News and print to the terminal the most upvoted article.

from bs4 import BeautifulSoup

import requests

response = requests.get("https://news.ycombinator.com/news")

yc_web_page = response.text

soup = BeautifulSoup(yc_web_page, "html.parser")

articles = soup.find_all(name="a", class_="titlelink")

article_texts = []

article_links = []

for article_tag in articles:

text = article_tag.getText()

article_texts.append(text)

link = article_tag.get("href")

article_links.append(link)

article_upvotes = [int(score.getText().split()[0]) for score in soup.find_all(name="span", class_="score")]

largest_number = max(article_upvotes)

largest_index = article_upvotes.index(largest_number)

print(article_texts[largest_index])

print(article_links[largest_index])BeautifulSoup offers an elegant and efficient way of scraping websites using Python. However, there are a few significant drawbacks to Beautiful Soup, such as:

-

Slow web scraper. The library's limitations become apparent when scraping large datasets. Its performance can be improved with multithreading, but it adds another layer of complexity to the scraper which might be demotivating for some users. In this regard, Scrapy is noticeably faster than BeautifulSoup due to its ability to use asynchronous system calls.

-

Unable to scrape dynamic web pages. Beautiful Soup is unable to mimic a web client and, therefore, cannot scrape dynamic JavaScript text on websites.

Browser automation tools

Browsers are a way for people to access and interact with the information available on the web. Nevertheless, a human is not always a requirement for this interaction to happen. Browser automation tools can mimic human actions and automate a web browser to perform repetitive and error-prone tasks.

The role of browser automation tools in web scraping is intimately related to their ability to render JavaScript code and interact with dynamic websites.

As previously discussed, one of the main limitations of HTML parsers is that they cannot scrape dynamically generated content. However, by combining the power of web automation software with HTML parsers, we can go beyond simple automation and render JavaScript to extract data from complex web pages.

Selenium

Selenium is primarily a browser automation tool developed for web testing, which is also found in off-label use as a web scraper. It uses the WebDriver protocol to control a headless browser and perform actions like clicking buttons, filling out forms, and scrolling.

Selenium is popular in the Python community, but it is also fully implemented and supported in JavaScript (Node.js), Python, Ruby, Java, Kotlin (programming language), and C#.

Because of its ability to render JavaScript on a web page, Selenium can help scrape dynamic websites. This is a handy feature, considering that many modern websites, especially in e-commerce, use JavaScript to load their content dynamically.

As an example, let's go ahead and scrape Amazon to get information from Douglas Adams' book, The Hitchhiker's Guide to the Galaxy. The script below will initialize a browser instance controlled by Selenium and parse the JavaScript in Amazon's website so we can extract data from it.

from selenium import webdriver

from selenium.webdriver.common.by import By

from webdriver_manager.chrome import ChromeDriverManager

# Insert the website URL that we want to scrape

url = "https://www.amazon.com/Hitchhikers-Guide-Galaxy-Douglas-Adams-ebook/dp/B000XUBC2C/ref=tmm_kin_swatch_0" \

"?_encoding=UTF8&qid=1642536225&sr=8-1 "

driver = webdriver.Chrome(ChromeDriverManager().install())

driver.get(url)

# Create a dictionary with the scraped data

book = {

"book_title": driver.find_element(By.ID, 'productTitle').text,

"author": driver.find_element(By.CSS_SELECTOR, '.a-link-normal.contributorNameID').text,

"edition": driver.find_element(By.ID, 'productSubtitle').text,

"price": driver.find_element(By.CSS_SELECTOR, '.a-size-base.a-color-price.a-color-price').text,

}

# Print the dictionary contents to the console

print(book)Despite its advantages, Selenium was not designed to be a web scraper and, because of that, has some noticeable limitations:

-

User-friendliness. Selenium has a steeper learning curve when compared to Beautiful Soup, requiring a more complex setup and experience to master.

-

Slow. Scraping vast amounts of data in Selenium can be a slow and inefficient process, making it unsuitable for large-scale tasks.

Puppeteer - JavaScript browser automation tool

Developed and maintained by Google, Puppeteer is a Node.js library that provides a high-level API to manipulate a headless Chrome programmatically, which can also be configured to use a full, non-headless browser.

Puppeteer's ability to emulate a real browser allows it to render JavaScript and overcome many of the limitations of the tools mentioned above. Some of the examples of its features are:

-

Crawl a Single Page Application and generate pre-rendered content.

-

Take screenshots and generate PDFs of pages.

-

Automate manual user interactions, such as UI testing, form submissions, and keyboard inputs.

To demonstrate some of Puppeteer's capabilities, let's go to Amazon and scrape The Hitchhiker's Guide to the Galaxy product page and take a screenshot of the website.

import puppeteer from "puppeteer";

(async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto(

"https://www.amazon.com/Hitchhikers-Guide-Galaxy-Douglas-Adams-ebook/dp/B000XUBC2C/ref=tmm_kin_swatch_0?_encoding=UTF8&qid=1642536225&sr=8-1"

);

const book = await page.evaluate(() => {

return {

title: document.querySelector("#productTitle").innerText,

author: document.querySelector(".a-link-normal.contributorNameID")

.innerText,

edition: document.querySelector("#productSubtitle").innerText,

price: document.querySelector(

".a-size-base.a-color-price.a-color-price"

).innerText,

};

});

await page.screenshot({ path: "book.png" });

console.log(book);

await browser.close();

})();By the end of the script's run, we will see an object containing the book's title, author, edition and price printed to the console.

Puppeteer can mimic most human interactions in a browser. The ability to control a browser programmatically greatly expands the realm of possibility of what is achievable using this library. Besides web scraping, Puppeteer can be used for workflow automation and automated testing.

Playwright - JavaScript and Python browser automation tool

Playwright is a Node.js library developed and maintained by Microsoft.

A significant part of Playwright's developer team is composed of the same engineers that worked on Puppeteer. Because of that, both libraries have many similarities, lowering the learning curve and reducing the hassle of migrating from one library to another.

One of the major differences is that Playwright offers cross-browser support, being able to drive Chromium, WebKit (Safari's browser engine), and Firefox, while Puppeteer only supports Chromium.

Additionally, you can use Playwright's API in TypeScript, JavaScript, Python, .NET, Java.

To highlight some of Playwright's core features as well as its similarities with Puppeteer and differences with Selenium, let's go back to Amazon's website and once again collect information from Douglas Adams' The Hitchhiker's Guide to the Galaxy.

Playwright JavaScript version:

const playwright = require('playwright');

(async () => {

const browser = await playwright.webkit.launch();

const page = await browser.newPage();

await page.goto('https://www.amazon.com/Hitchhikers-Guide-Galaxy-Douglas-Adams-ebook/dp/B000XUBC2C/ref=tmm_kin_swatch_0?_encoding=UTF8&qid=1642536225&sr=8-1');

const book = {

bookTitle: await (await page.$('#productTitle')).innerText(),

author: await (await page.$('.a-link-normal.contributorNameID')).innerText(),

edition: await (await page.$('#productSubtitle')).innerText(),

price: await (await page.$('.a-size-base.a-color-price.a-color-price')).innerText(),

};

console.log(book);

await page.screenshot({ path: 'book.png' });

await browser.close();

})();Playwright Python version:

from playwright.sync_api import sync_playwright

with sync_playwright() as p:

browser = p.webkit.launch()

page = browser.new_page()

page.goto("https://www.amazon.com/Hitchhikers-Guide-Galaxy-Douglas-Adams-ebook/dp/B000XUBC2C/ref=tmm_kin_swatch_0"

"?_encoding=UTF8&qid=1642536225&sr=8-1")

# Create a dictionary with the scraped data

book = {

"book_title": page.query_selector('#productTitle').inner_text(),

"author": page.query_selector('.a-link-normal.contributorNameID').inner_text(),

"edition": page.query_selector('#productSubtitle').inner_text(),

"price": page.query_selector('.a-size-base.a-color-price.a-color-price').inner_text(),

}

print(book)

page.screenshot(path="book.png")

browser.close()Despite being a relatively new library, Playwright is rapidly gaining adepts amongst the developer community. Because of its modern features, cross-browser, multi-language support, and ease of use, it can be said that Playwright has already surpassed its older brother Puppeteer.

Full-featured web scraping libraries

Crawlee

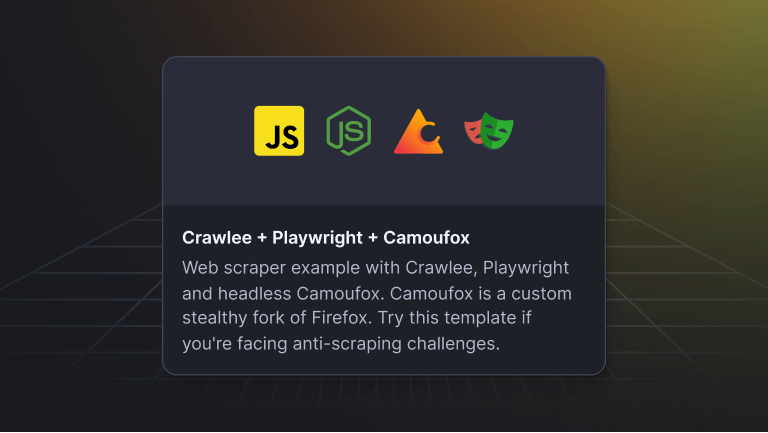

Crawlee is an open-source Node.js web scraping and automation library. It excels at bypassing modern website anti-bot defenses and saving development time when building crawlers. It offers a complete collection of tools for every automation and scraping use cases, such as CheerioCrawler, PuppeteerCrawler, and PlaywrightCrawler.

Crawlee not only shares many of the features of the previously mentioned tools but builds on top of them to enhance performance and seamlessly integrate storage, export of results, and proxy rotation. It works on any system and can be used as a standalone or serverless microservice on the Apify platform.

Here are some of the key features that make Crawlee stand out in web scraping and automation scene:

🛠 Features

- Single interface for HTTP and headless browser crawling

- Automatic scaling with available system resources

- Integrated proxy rotation and session management

- Lifecycles customizable with hooks

- CLI to bootstrap your projects

- Configurable routing, error handling, and retries

- Dockerfiles ready to deploy

👾 HTTP crawling

- Zero configuration HTTP2 support, even for proxies

- Automatic generation of browser-like headers

- Replication of browser TLS fingerprints

- Integrated fast HTML parsers. Cheerio and JSDOM

💻 Real browser crawling

- JavaScript rendering and screenshots

- Headless and headful support

- Zero-config generation of human-like fingerprints

- Automatic browser management

- Use Playwright and Puppeteer with the same interface

- Cross-browser support (Chrome, Firefox, Webkit, etc)

The example below demonstrates how to use CheerioCrawler in combination with RequestQueue to recursively scrape the Hacker News website using CheerioCrawler.

The crawler starts with a single URL, finds links to the following pages, enqueues them, and continues until no more desired links are available. The results are then stored on your disk.

// main.js

import { CheerioCrawler } from "crawlee";

import { router } from "./routes.js";

const startUrls = ["https://news.ycombinator.com/"];

const crawler = new CheerioCrawler({

requestHandler: router,

});

await crawler.run(startUrls);// routes.js

import { Dataset, createCheerioRouter } from "crawlee";

export const router = createCheerioRouter();

router.addDefaultHandler(async ({ enqueueLinks, log, $ }) => {

log.info(`enqueueing new URLs`);

await enqueueLinks({

globs: ["https://news.ycombinator.com/?p=*"],

});

const data = $(".athing")

.map((index, post) => {

return {

postUrL: $(post).find(".title a").attr("href"),

title: $(post).find(".title a").text(),

rank: $(post).find(".rank").text(),

};

})

.toArray();

await Dataset.pushData({

data,

});

});

If you want to dive deeper into Crawlee, check out this video tutorial, where we build an Amazon scraper using Crawlee and the extra learning resources listed below. 📚

🤖 Here are some useful links to help you get started with Crawlee:

Build your first crawler with Crawlee

Crawlee code examples

Apify's Web Scraping Academy

Crawlee data storage types

Scrapy

Scrapy is a full-featured web scraping framework and is the go-to choice for large-scale scraping projects in Python.

Scrapy is written with Twisted, a popular event-driven networking framework, which gives it some asynchronous capabilities. For instance, Scrapy doesn't have to wait for a response when handling multiple requests, contributing to its efficiency.

Reliable cloud infrastructure for your Scrapy project. Run, monitor, schedule, and scale your spiders in the cloud. Learn more →

In the example below, we use Scrapy to crawl IMDB's best movies list and retrieve the title, year, duration, genre, and rating of each listed movie.

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule

class BestMoviesSpider(CrawlSpider):

name = 'best_movies'

allowed_domains = ['imdb.com']

user_agent = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/76.0.3809.100 Safari/537.36'

def start_requests(self):

yield scrapy.Request(url='https://www.imdb.com/search/title/?groups=top_250&sort=user_rating', headers={

'User-Agent': self.user_agent

})

rules = (

Rule(LinkExtractor(restrict_xpaths="//h3[@class='lister-item-header']/a"), callback='parse_item', follow=True, process_request='set_user_agent'),

Rule(LinkExtractor(restrict_xpaths="(//a[@class='lister-page-next next-page'])[2]"), process_request='set_user_agent')

)

def set_user_agent(self, request):

request.headers['User-Agent'] = self.user_agent

return request

def parse_item(self, response):

yield {

'title': response.xpath("//div[@class='title_wrapper']/h1/text()").get(),

'year': response.xpath("//span[@id='titleYear']/a/text()").get(),

'duration': response.xpath("normalize-space((//time)[1]/text())").get(),

'genre': response.xpath("//div[@class='subtext']/a[1]/text()").get(),

'rating': response.xpath("//span[@itemprop='ratingValue']/text()").get(),

'movie_url': response.url

}

Despite being an efficient and complete scraper, Scrapy has one significant drawback: it lacks user-friendliness. It requires a lot of setups and pre-requisite knowledge. Besides, to scrape dynamic websites, Scrapy requires integration with Splash, making the learning curve to master this framework even steeper.

Apify Python SDK

Regardless of your choice of framework for web scraping in Python, you can take your application to the next level by using Apify's Python SDK to develop, deploy, and integrate your Python scrapers with the Apify Platform. Here are some of Apify's web scraping platform's top features:

-

Host your code in the cloud and tap into the computational power of Apify's servers.

-

Schedule Actor runs. Schedules allow you to run Actors and tasks regularly or at any time you specify.

-

Integration with existing actors and third-party services. Use the Python SDK to create Apify actors. Store your scraped data in Apify datasets to be processed, visualized, and shared across multiple services such as Zapier, Make, Gmail, and Google Drive.

Ready to start web scraping with Python?🐍

Check out these articles on how to get started with Apify's Python SDK:

Apify SDK for Python

Web scraping with Python: a comprehensive guide

How to process data in Python using Pandas

Conclusion: Programming language to choose for web scraping in 2025

In short, the right choice of language and framework will depend on the requirements of your project and your programming background.

JavaScript is the language of the web. Thus, it offers an excellent opportunity for you to use only one language to understand the inner workings of a website and scrape data from it. This will make your code cleaner and ease the learning process in the long run.

On the other hand, Python might be your best choice if you are also interested in data science and machine learning. These fields greatly benefit from having access to large sets of data. Therefore, by mastering Python, you can obtain the necessary data through web scraping, process it, and then directly apply it to your project.

But choosing your preferred programming language doesn't have to be a zero-sum game. You can combine JavaScript and Python to get the best of both worlds.

On Apify Store you can try hundreds of existing web scraping solutions for free. As a next step, you can use Apify's Python API Client to access the output data from those ready-made solutions and then process it using Python's extensive collection of data manipulation libraries.

If you are still not sure about how to get started with web scraping, you can request a custom solution to outsource entire projects to Apify, and we will take care of everything for you 😉.

Finally, don't forget to join Apify's community on Discord to connect with other web scraping and automation enthusiasts. 🚀

Useful Resources

Want to learn more about web scraping and automation? Here are some recommendations for you:

👨💻 Web Scraping Academy

🔎 What is the difference between web scraping and crawling?

💬 Join our Discord