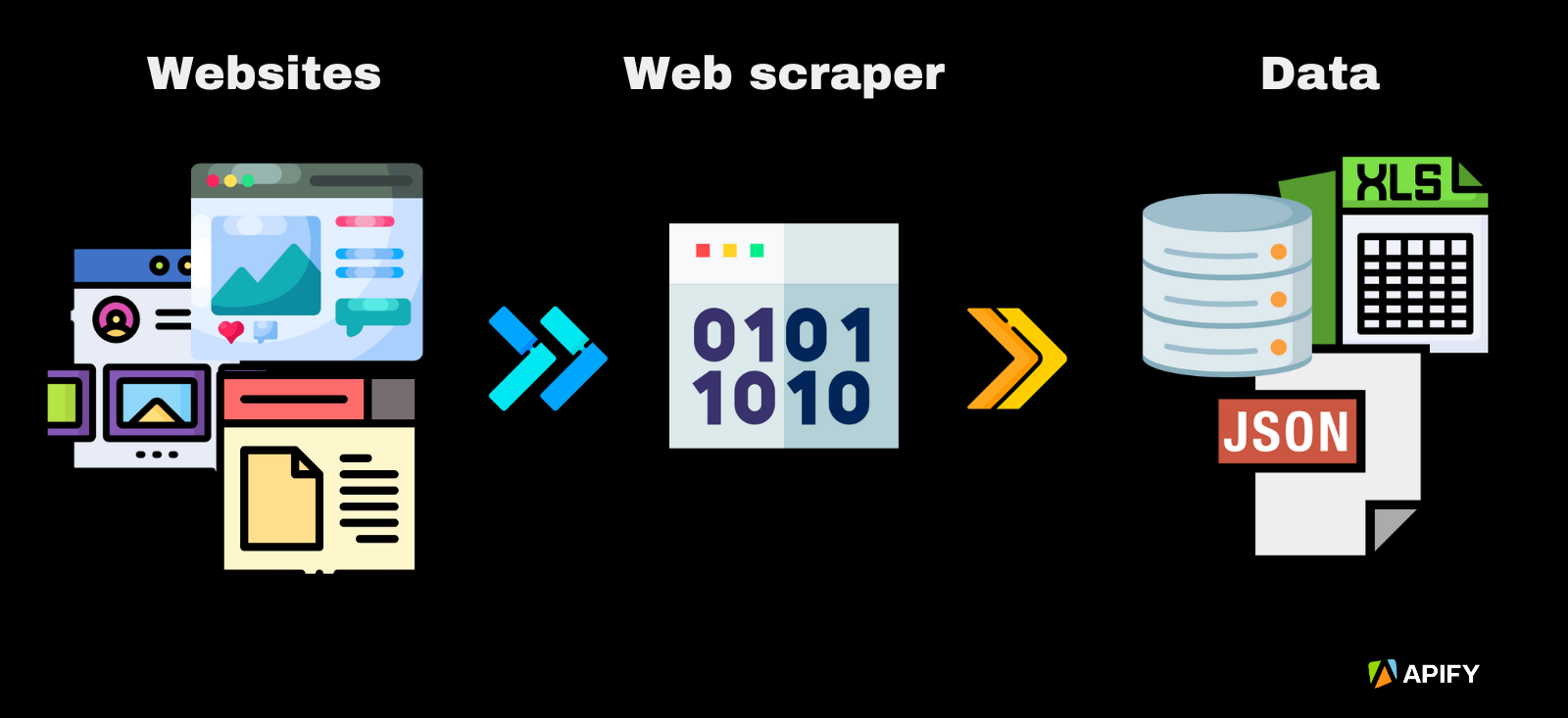

Web scraping is the process of automatically extracting data from a website. You use a program called a web scraper to access a web page, interpret the data, and extract what you need. The data is saved in a structured format such as an Excel file, JSON, or XML so that you can use it in spreadsheets or apps.

You could do this manually by copying and pasting, but scraping is typically performed using an automated tool that can pull data at scale from web pages and do it very fast.

What's the definition of web scraping?

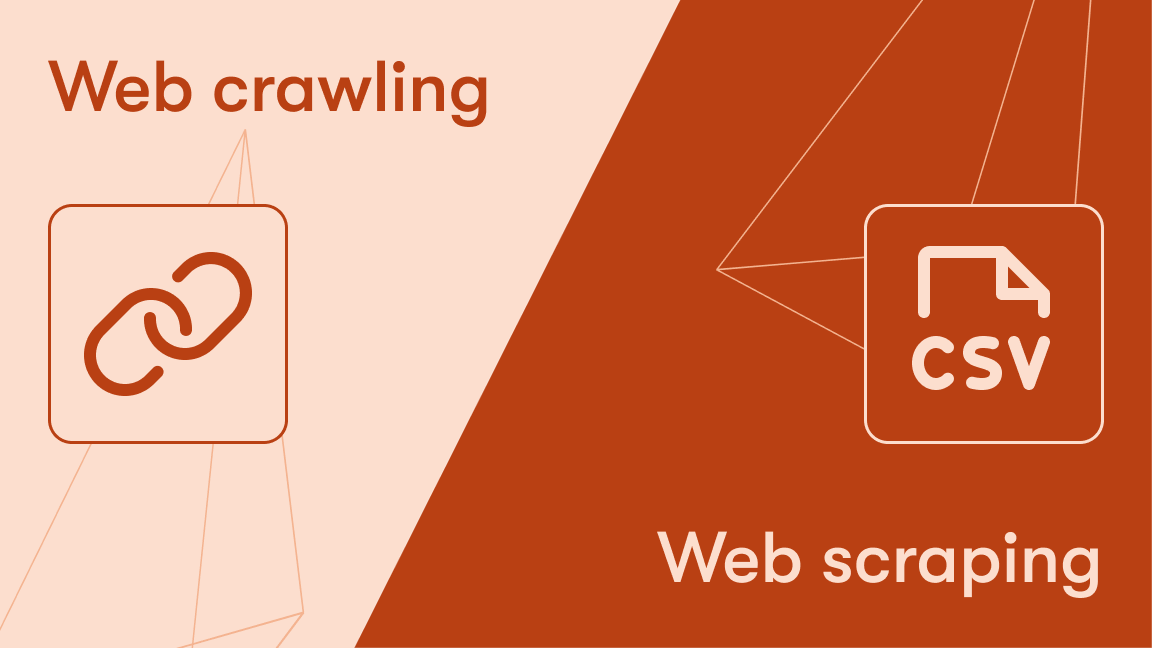

Web scraping is the automated process of extracting data from a website. Web scraping is also known as web harvesting, data extraction, web data extraction, data scraping, and data mining.

You might also hear it called screen scraping, but that's something a bit different.

Why web scrape?

It's already impossible for humans to process even a fraction of the data on the web. According to recent statistics, the amount of data created in 2024 is 402.74 million terabytes each day. That's why web scraping has become an essential tool. We need machines to rapidly read that data for us so that we can use it in new and interesting ways.

To give you some idea of what I mean, imagine how long it might take you to manually copy and paste text from 100 web pages. A machine could do it in less than a second if you give it the correct instructions. It can also do it repeatedly, tirelessly, and at any scale. Forget about 100 pages. In the time it would take you to open just the first few, a computer could deal with 1 million pages!

Web scraping can be used for everything from academic research to business intelligence. It's used to gather data at scale on product prices, weather information, market trends, and much more.

How does a web scraper work?

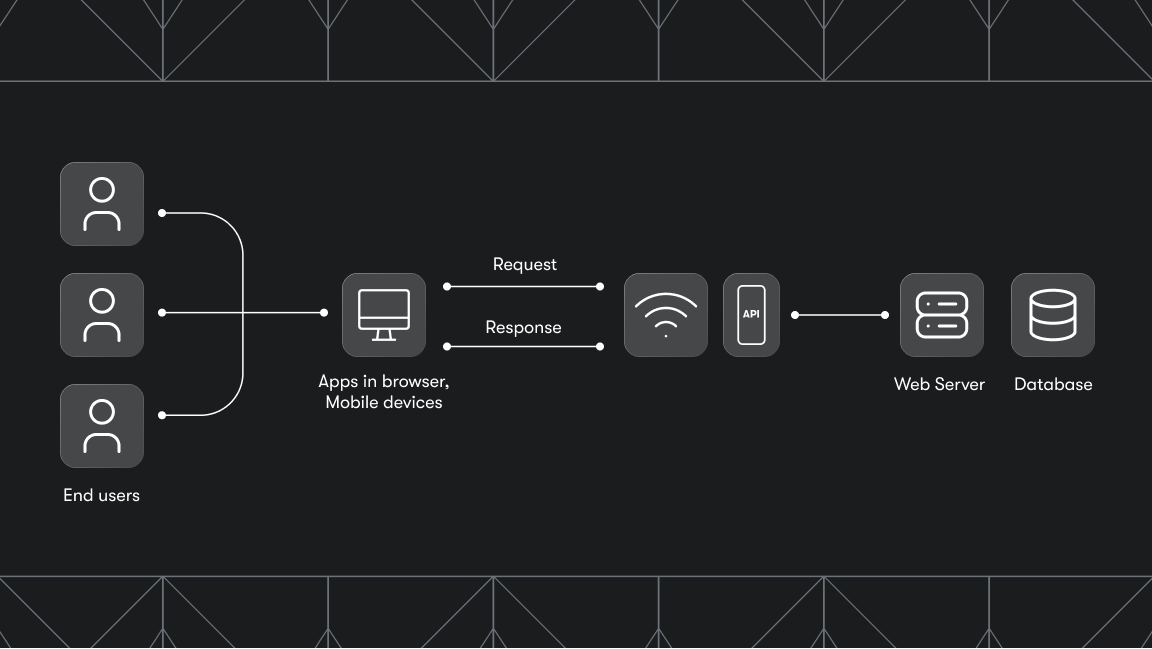

Web scrapers operate by sending HTTP requests to a web server, the same way that a browser does when you visit a site. Once the server responds with the page's HTML code, the scraper parses (basically, it breaks it down and tries to understand it) this code to locate particular HTML tags, classes, or attributes that contain the data to be scraped.

Most information on a web page is "wrapped" in tags that enable the browser to make sense of it, and it's these tags that make it possible for scrapers to get what you need.

Web scrapers can extract data from multiple web pages at a time, making them great for large-scale data mining.

What is scraping data?

Scraping data is the point of web scraping. It's all about getting that juicy unstructured data and transforming it into something more useful.

This unstructured data might include text, images, prices, contact details, or any other information publicly displayed on a web page.

The scraped data goes through data preparation tools, where it is cleaned, transformed into structured data, and stored in a database or file for further analysis or use. Structured data is just a way to say that the information is easy for computers to read.

Web scraping is used to extract what type of data?

Web scraping can be used to extract lots of different types of data from the internet. It can gather textual information such as product descriptions, prices, contact details, and customer reviews, as well as visual content like images and videos.

Depending on the use case, you can target specific data such as real estate listings, stock market trends, job postings, market research, or travel fares. It's also used for lead generation, to collect sentiment data from social media, news articles for content aggregation, content scraping by the media, and scientific data for academic research.

What is web scraping used for?

Lead generation

Web scraping is used to gather contact information and details about potential business leads from various online platforms. By collecting data from websites like LinkedIn, businesses can identify and target specific demographics. This kind of contact scraping can generate better leads.

Market research

Understanding market dynamics is crucial for any business. Web scraping allows analysts and researchers to collect vast amounts of data from various sources. This information, which might include customer reviews, competitor strategies, or market trends, helps to build a comprehensive picture of the industry landscape and enables brand monitoring.

Price monitoring and competitive intelligence

Price monitoring involves tracking the fluctuations in the prices of goods or services over time. It lets businesses keep an eye on these changes, allowing them to adapt their pricing models and strategies.

Competitive intelligence takes the concept of price monitoring a step further by employing advanced analytics and insights gathered through price scraping. Combining competitor analysis with market trends, customer behavior, and other influencing factors, can lead to a more nuanced and strategic approach to pricing.

Real estate listing scraping

In the real estate industry, web scraping is used to gather detailed information about properties listed online. By transforming this raw data into actionable property intelligence, real estate professionals can refine pricing strategies, improve market forecasting, and deliver more personalized services to clients.

Sentiment analysis

Web scraping plays a vital role in sentiment analysis by gathering opinions, reviews, and comments from social media, forums, and review sites. Market research companies can analyze this data to gauge public sentiment about products, services, or brand image, enabling them to respond to customer needs and preferences effectively.

Job market analysis

Recruitment agencies and HR professionals can make use of web scraping to monitor job postings on sites like Indeed. By analyzing job descriptions, salary trends, and skill requirements, they can gain insights into labor market dynamics, helping both employers and jobseekers.

Academic research

Researchers and academics can use web scraping to collect data from publicly available sources for scientific studies and analyses. This can include information on climate patterns, historical documents, social behavior, or data for generative AI or machine learning.

Travel fare aggregators

Travel aggregators and comparison sites use web scraping to gather information on flight fares, hotel prices, and vacation packages from various providers. This enables them to offer customers an overview of available options and pricing.

News and content aggregation

Web scraping enables media companies and news aggregators to collect articles, blogs, and news stories from different sources. This content scraping assists in creating centralized platforms where users can access diverse content from various publishers.

Stock market analysis

Investors and financial analysts use web scraping to track stock prices, market news, and financial reports. By continuously monitoring relevant data, they can identify trends, make predictions, and formulate investment strategies aligned with market movements.

Healthcare data extraction

In the healthcare sector, web scraping can be used to collect data on disease outbreaks, medical research, patient reviews, and more. This information can support public health initiatives, medical studies, and healthcare service improvements. Scraping was used extensively during the COVID-19 pandemic.

Comparison websites

Comparison websites are a great example of how web scraping can benefit consumers. These platforms use web scraping to extract data on products or services from online retailers and service providers. Aggregating information such as prices, features, customer reviews, and availability, lets these websites present users with a side-by-side view of their options.

What are the benefits of web scraping?

Web scraping gives anyone the means to access and analyze vast amounts of data from the web. Here are some of the pros and cons of web scraping:

Pros of web scraping:

- Fast and efficient

- Data extraction at scale

- Cost-effective and flexible

- Reliable and robust performance

- Low maintenance costs

- Delivers structured data

Cons of web scraping:

- Web scraping has a learning curve

- Needs perpetual maintenance

- Data extraction isn't data analysis

- Scrapers can get blocked

But at the end of the day, automating the data collection process primarily means that web scrapers save time and resources. And that's a massive benefit for any business.

Want to start web scraping?

Visit Apify Store if you just want to use a pre-built scraper. You can find scrapers there for e-commerce websites, lead generation, and more. If you can't find what you need, you can request a web scraper from our certified Apify partners.

And if you're ready to build your own web scrapers, check out our Web Scraping Academy. It has a great web scraping for beginners section, along with more advanced web scraping courses.