I have a confession to make.

Numbers weren't always my strength, and Google Sheets used to intimidate me. However, my work requires analyzing data and finding patterns. Here's how I learned to handle data analysis, even without a spreadsheet background.

Thankfully, tools like ChatGPT have made data analysis easier.

AI can analyze thousands of data points in minutes – much faster than manual review. This helps you make informed decisions quickly, whether you're watching competitor moves, tracking market prices, or understanding what customers think.

Of course, I’m preaching to the choir. It’s not like you enjoy manually combing through thousands of spreadsheets for fun (or maybe you do, and I need to apologize).

This guide explains how to use AI for data analysis, with practical examples you can apply today.

The basics: using AI for data analytics

AI algorithms can spot patterns in millions of data points that human analysts might miss entirely. What used to take weeks of spreadsheet analysis now happens in minutes.

You can use four types of AI for data analytics: machine learning, deep learning, NLP, and computer vision. Here’s a simple definition of the different types of AI you can use for data analysis with concrete examples:

- Machine learning is when computers learn from data to make better guesses. For example, you can look at past sales and guess what people might buy next.

- Deep learning helps computers understand complicated data. You can find hidden patterns in medical records to predict health issues before they happen.

- NLP (Natural Language Processing) helps computers understand words. You can share customer reviews to get insights into customer sentiment.

- Computer vision lets computers see and understand pictures or videos. You can use computer vision to improve the customer flow around a store by analyzing customer flow around a store.

You can use AI for data analysis at the individual level, like when I analyze relatively small SEO data sets. Or, you can do it at an enterprise level, like how Tesla analyzes petabytes of data to improve driving.

Pros & cons of using AI for data analytics

The three major benefits of using AI for data analytics are speed, accuracy, and scalability.

AI can process massive data sets fast, saving you time over what it would take to do the same task manually. Then there’s accuracy — since AI doesn’t get tired or overwhelmed, it catches patterns and trends you might miss after staring at a screen for too long.

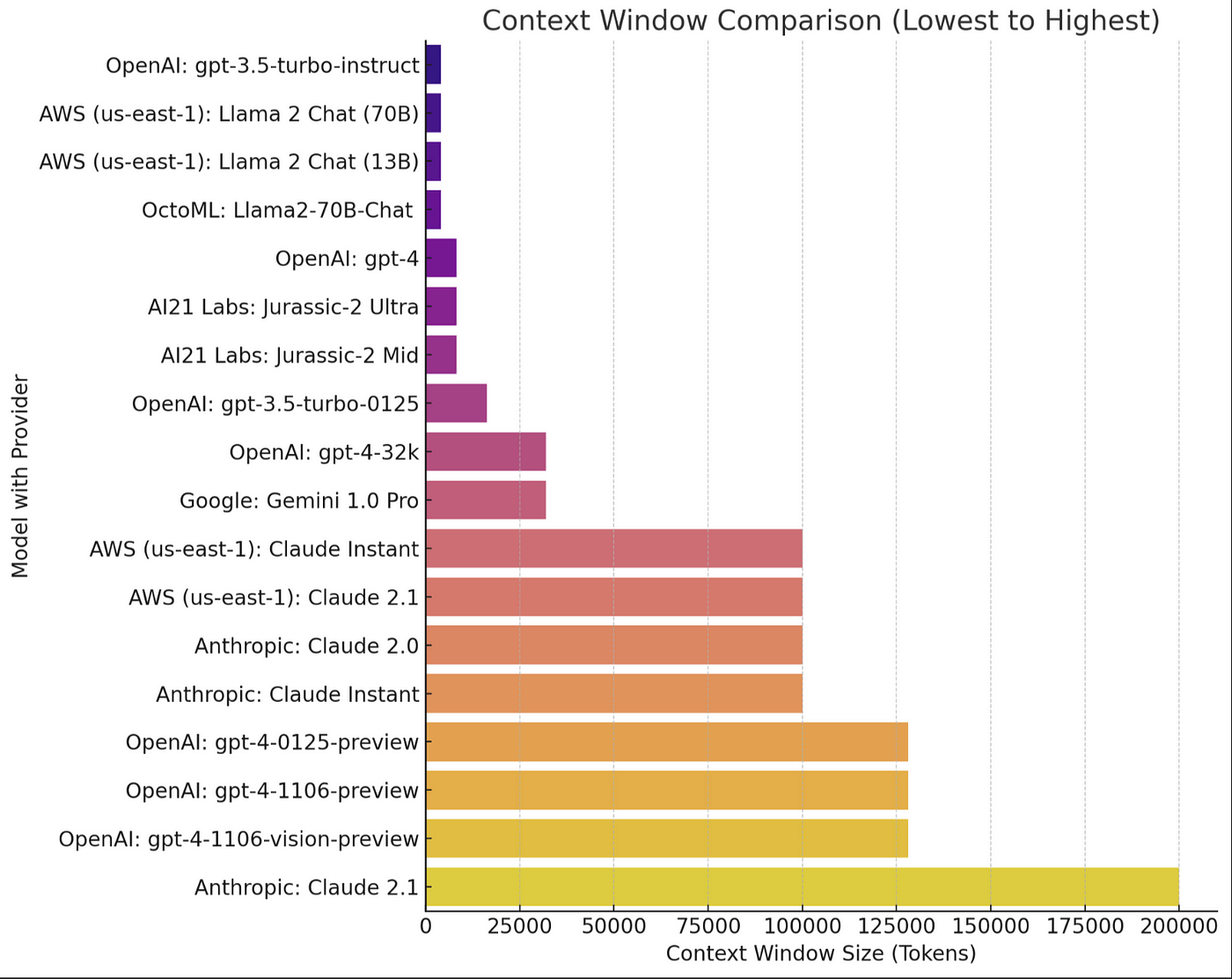

Scalability is another win. You’ve seen the growing context window offered by Large Language Models (LLM). You can input an ever-growing volume of data for analysis.

That’s great in theory.

However, it’s not all perfect. Inputting large volumes of data into an AI context window can overwhelm an LLM. Rather than effectively analyzing data or abiding by an experiment's parameters, it can default to a simplistic output.

Companies like Writer are working on dealing with these growing pains as they develop generative AI. This guide to generative AI for business leaders explains more.

For example, when conducting data analysis, Writer’s Palmyra processes chunks of data, generates a summary, and stores it for future reference. Breaking a process down into manageable chunks improves an LLM's performance.

Consequently, you get better results from larger datasets.

Issues like this highlight the limitations of using AI for data analysis. There are other considerations, too. For example, training data impacts generative output. The uproar around the initial release of Google Gemini provides an excellent case in point. Biased models can impact any aspect of data analysis.

5 ways to use AI for data analysis

The following sections provide a snapshot of five powerful ways to use AI in your data workflows.

You'll learn to combine AI with web scraping to standardize data collection, clean datasets automatically, analyze patterns with advanced statistics, create reliable prediction models, and guide strategic planning with concrete findings.

Data collection: automated data harvesting and API integrations

AI automates information gathering from multiple sources at scale in real time. That could mean scraping websites, pulling data from social media, or integrating directly with APIs. For instance, tools like Apify can collect large volumes of web data automatically, freeing you from the tedious task of manual data gathering.

Opportunities for automated data harvesting and API integrations for small to medium-sized businesses are varied. Take competitor pricing, automated data harvesting through web scraping allows real-time price comparisons and dynamic pricing strategies.

The development process would look something like this:

- Identify competitor websites and target product pages.

- Scrape product names and prices periodically. For example, using a Google Maps Scraper.

- Store the data for historical comparison.

- Analyze trends and adjust your pricing strategy accordingly.

You can use Python libraries like Beautiful Soup or Scrapy for dynamic content. Alternatively, you could utilize Selenium with headless browsers like ChromeDriver.

Automating data harvesting and integration with APIs saves time and ensures that data collection is consistent, up-to-date, and scalable.

Data cleaning: data sanitization and handling inconsistencies

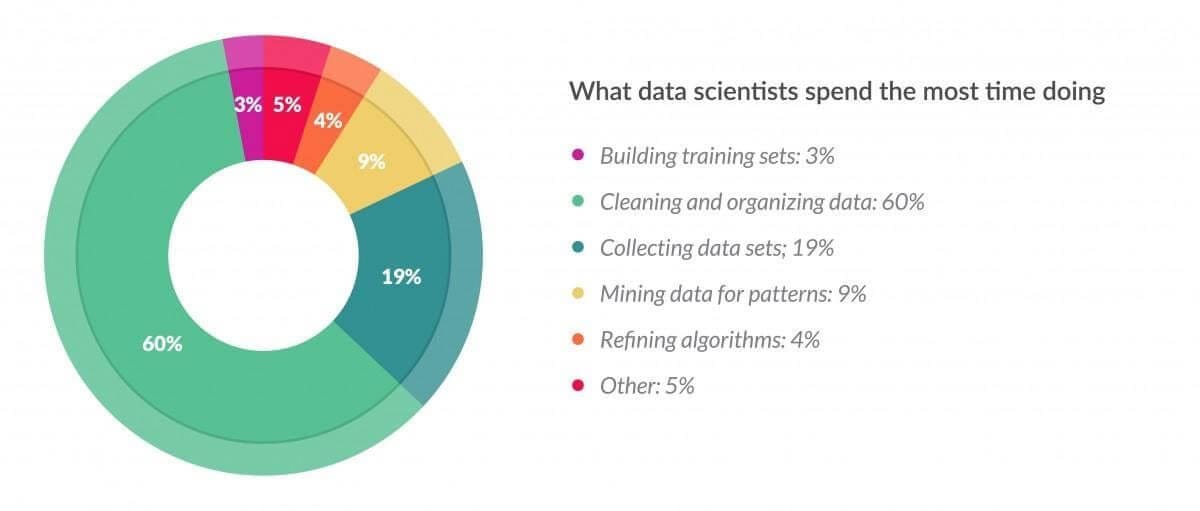

Data scientists spend up to 60% of their time cleaning messy data, according to a 2016 survey cited in Forbes (yeah, not a lot of fun).

It’s an important job, though. A report from MIT News indicated that bad data costs the US as much as $3.1 trillion each year.

It’s a pretty big number.

You can utilize AI tools to improve every step of data cleaning, from assessing data quality to monitoring and improving the data cleaning process.

For example, libraries like Pandas combined with machine learning models can visualize and detect anomalies quickly. These will help you detect duplicate entries, missing values, and formatting issues.

Tools like these help you set up systems to clean larger volumes of data more effectively. However, you still need human oversight to review and validate the AI’s work for accuracy and biases.

Data analysis: exploratory and advanced statistical analysis

Data analysis consists of two main stages: exploratory data analysis (EDA) and advanced statistical analysis. Exploratory data analysis (EDA) identifies patterns and trends. Advanced statistical analysis uses complex methods to make predictions and test hypotheses.

For example, in a house prices dataset, EDA might show that homes with more bedrooms generally cost more. A scatter plot could reveal small homes that are expensive due to location. A box plot by neighborhood could highlight areas with consistently higher prices.

You get varied insights from EDA.

As you might imagine, how you present the data is critical to the data’s value in a company. That’s because many people are visual learners (myself included).

Generating charts, graphs, and visuals using data takes something abstract for most people - number crunching - and makes it tangible. You can use Python libraries like Pandas for data manipulation and Matplotlib or Seaborn for visualizations.

Advanced statistical analysis uses techniques like regression analysis, hypothesis testing, and machine learning models to provide data insights. Use libraries like Scikit-learn to implement machine learning algorithms or Statsmodels to conduct statistical tests.

Predictive analytics: predictive models for real-world applications

Predictive analytics uses statistical analysis to forecast future outcomes based on historical data. You can use predictive analytics to gain insights into customer behavior, market trends, or operational outcomes.

Demand forecasting is a practical use case of predictive analytics. You can use Python libraries like Scikit-learn or TensorFlow to create models that predict product demand based on past sales data, seasonal trends, and external factors such as market conditions.

Predictive models are used in healthcare, for example. One predictive analytics tool identified over 90% of early-stage breast cancers likely to spread or recur. These rates of detection are better than those of the average doctor. It’s a nice real-world example of the benefits of predictive analytics.

Data-driven decisions: strategic planning and real-time support

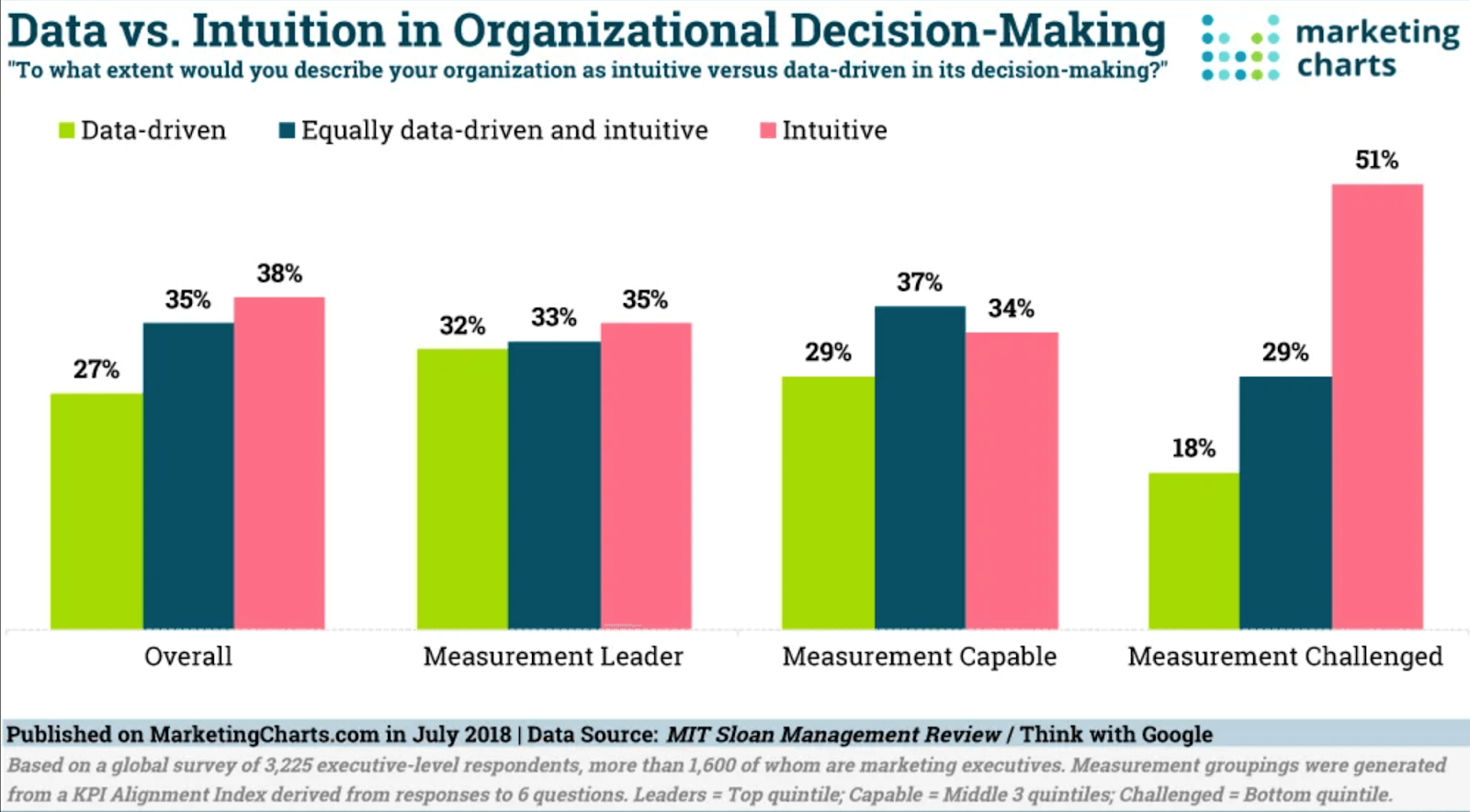

Despite the obvious benefits of using data to make informed decisions, 38% of overall decisions at a company are still based on intuition. At least, that’s what an MIT Sloan Management Review discovered in a 2018 survey.

Better data collection and analysis can reduce those numbers.

Setting up automated data pipelines creates a steady stream of new information. Regular updates let you provide immediate analytics and support.

Tools like Tableau and Power BI help visualize your findings through dynamic platforms, such as a Power BI dashboard. Clear metrics let teams track their key performance indicators (KPIs) and respond quickly.

Integrating AI with existing data infrastructure

AI works alongside your current data systems to refine data processing without disrupting your workflow. The automation makes your analysis faster and more accurate. Here's what to consider when adding AI to your infrastructure.

Compatibility with traditional data systems

According to a 2021 survey by Tata Consultancy Services, over two-thirds of global companies use legacy or mainframe applications for core business operations. Perhaps more surprisingly, a survey from 2020 found that 74% of companies that started a legacy system modernization project didn’t complete it.

So, most companies run on legacy software. And most of these companies struggle to update their systems.

This is an issue (or an opportunity).

Jensen Huang, the CEO of Nvidia, predicts we’re $150 billion of a one trillion-dollar overhaul of data centers within the next three to five years. As these changes roll out, more companies will attempt to transition away from legacy systems and take advantage of LLMs and other forms of AI to improve business operations.

There will be a lot of work in the coming years for programmers managing transitions.

Dealing with legacy systems can be both challenging and rewarding. The complexity arises from ensuring compatibility between outdated systems and new technologies. You need to do this while minimizing disruptions to business operations.

To run a successful legacy system modernization project, focus on gradual, iterative changes rather than a complete overhaul. Collaboration with business and technical teams is key to securing necessary resources and making informed decisions.

Cloud vs. on-premises AI solutions

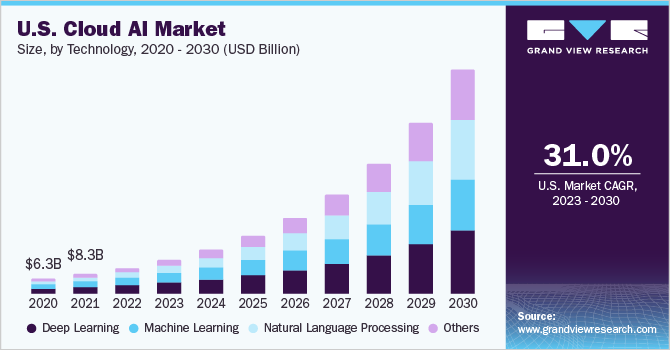

The market for cloud-based AI solutions is growing rapidly. AI-supported cloud computing is estimated to grow at a Compound Annual Growth Rate (CAGR) of 39.6% from 2023 to 2030. That equates to a rise from $60.35 billion in 2023 to nearly $398 billion by 2030.

Growth in this space is fuelled by massive investments by companies like Amazon through Amazon Web Services, Google, and Microsoft. On-site solutions offer limited benefits for most businesses.

Rather, the business focus is on ensuring you maintain the integrity of your corporate data. Essentially, companies want the benefits of AI while keeping data private.

Yet, while Cloud computing will boom in the coming years, it won’t replace private infrastructure.

Organizations with strict security rules or time-sensitive processing often need AI to run on their own hardware. This setup typically requires extra attention when connecting to older systems.

Data governance and management

Effective data governance and management are crucial when integrating AI to maintain data integrity. They also ensure compliance with regulations like GDPR or HIPAA.

Data governance, management, security, and privacy are critical, given the sensitivity of some sectors where AI is utilized. Obvious examples include healthcare and financial services. For example, robotic process automation-based claims processing (CAGR 35.1%), real-time financial advice (CAGR 29.5%), and digital banking experience (CAGR 29.1%) are all growing fast in the financial services sector.

Those three examples in the financial services industry rely on generative AI, data, and analytics technologies. Data management is critical in this process.

Leaks generate real risks to customers. For example, 2.6 million Americans were affected by identity theft and related fraud in 2023, at a cost of $10 billion (that’s why things like identity theft protection are so important). As more data is handled in the cloud, risks increase.

You need clear policies around data handling, security, and access control to prevent data breaches and inaccuracies. Conduct regular audits, use encryption, and manage data lifecycles properly to protect sensitive information.

Getting started with AI data analysis

You understand the premise for using AI data analysis. The process includes collecting, cleaning, and preparing data before utilizing relevant platforms for analysis. What follows is a practical framework you can use.

Assessing your data readiness

To assess data readiness for AI analysis, start by checking data provenance and quality. Make sure the data is clean, accurate, and free of duplicates or errors. Also, assess whether you have enough data and that it covers diverse scenarios to avoid model bias.

Next, check if the data is labeled properly for supervised learning. Consider manual labeling or semi-supervised techniques if needed. Also, be sure your infrastructure can handle large datasets and intensive AI computations.

Choose between cloud services or scalable storage as needed. Factors like the data you’re handling will define the best option.

Finally, verify that your data complies with privacy regulations like GDPR or HIPAA. That’s especially important for sensitive information. Data should also be easily accessible and well-integrated across systems using APIs or data pipelines.

Choosing the right AI tools

Choosing the right AI tools is key for effective data analysis. The decision depends on task type, data size, system integration, and customization needs. Selecting the proper framework, libraries, and cloud services will streamline development and provide scalability and accuracy.

Here are some specific factors to consider for compatibility and efficiency.

- Supervised vs. unsupervised learning: Tools like scikit-learn or TensorFlow are good for classification, regression, and clustering tasks. Whereas Keras or PyTorch are better suited to deep learning.

- Data volume and scalability: Apache Spark with MLlib can handle large data volumes.TensorFlow Distributed or PyTorch Distributed support parallel processing across clusters.

- Ease of integration: TensorFlow and scikit-learn have great API support for Python. They offer easy integrations with libraries and frameworks like Pandas for data manipulation and NumPy for numerical computation. Google Cloud AI Platform or AWS SageMaker provides end-to-end solutions for building, training, and deploying models in the Cloud.

- Pre-built models and customization: Hugging Face Transformers or OpenAI GPT offer pre-built models you can fine-tune for your use case. Frameworks like PyTorch or TensorFlow allow you to build custom models from scratch.

- Visualization and monitoring: TensorBoard (for TensorFlow) and Matplotlib or Seaborn (for Python) can help monitor and visualize model performance.

Choosing AI tools requires considering task type, data volume, and system integration. I’ve tried to cover the core points to keep in mind for your project.

Implementing AI projects: step-by-step guide

A good plan of action is critical to your project's success. Define clear project objectives. Identify the key data sources required and assess the type of AI model that will meet your needs.

Once you’ve defined the project scope and what you hope to achieve, get buy-in from key decision-makers.

Consider the following factors for data preparation and model development: Your data should be free from errors and inconsistencies. When everything is running, track performance, gather user feedback, and refine things as you go.

Put AI to work

To make AI work effectively in your projects, remember to choose the tools that match your needs, check your data quality, and follow implementation standards. You'll surely face common issues like model constraints and data skew, but a well-planned AI approach will help you get the most out of the technology.

Website Content Crawler was specifically designed to extract data for feeding, fine-tuning, or training large language models (LLMs) such as GPT-4, ChatGPT, or LLaMA.

- Finds and downloads the right web pages.

- Transforms the DOM of crawled pages to remove navigation, header, footer, cookie warnings, and other fluff.

- Converts the resulting DOM to plain text or Markdown and saves downloaded files.

AI for data analysis FAQ

Can AI be used for data analysis?

AI can be used for data analysis to quickly process large datasets, identify patterns, and generate insights that might be missed by manual analysis. AI algorithms like machine learning automate data classification, prediction, and anomaly detection.

Which AI tool is best for data analysis?

The best AI tool for data analysis depends on your specific needs. Popular options include TensorFlow for deep learning, RapidMiner for end-to-end machine learning workflows, and Google Cloud AI for scalable data analytics.

Can AI replace a data analyst?

AI can automate many tasks traditionally done by data analysts. However, it can't replace a data analyst, as human expertise is still needed to interpret complex insights, provide context, and make strategic decisions based on the data.