It's common knowledge that large language models (LLMs) are the cause of today's AI revolution. It's also well-known that these LLMs were trained with data crawled and scraped from the web.

There's one problem with them, though. They only get information from the data they've been trained on. That means they only know the world at the time of training. So, there's a knowledge cutoff.

Thankfully, there's a solution to this problem.

While not as famous as LLMs, this solution is what really lies behind the AI revolution. It's called retrieval-augmented generation (RAG).

RAG Web Browser is designed for Large Language Model applications or LLM agents to provide up-to-date Google search knowledge. It lets your Assistants fetch live data from the web, expanding their knowledge in real time. This tool integrates smoothly with RAG pipelines, helping you build more capable AI systems.

What is RAG in AI?

Retrieval-augmented generation (RAG) is an AI framework and technique used in natural language processing that combines elements of content retrieval and content generation to enhance the quality and relevance of text produced by AI models.

RAG first came to the attention of AI developers in 2020 with the publication of the research paper, Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. Since then, it has been used to improve generative AI systems.

What is RAG in LLMs?

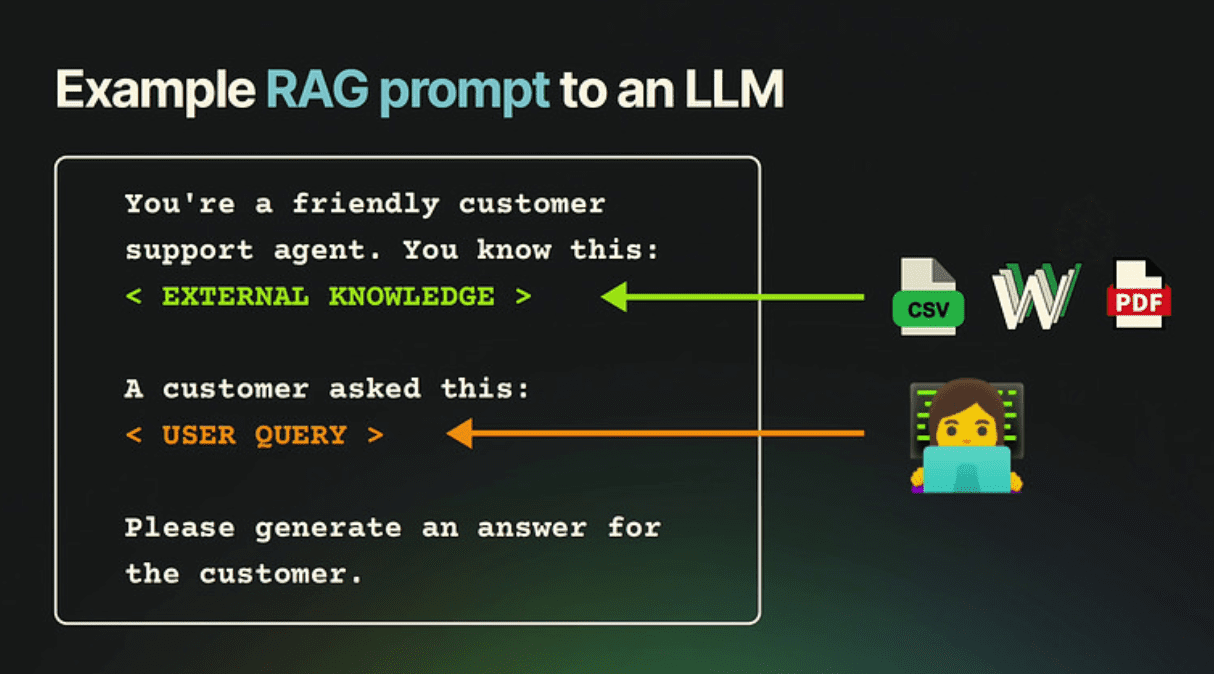

In the context of large language models (LLMs), retrieval-augmented generation is an approach that combines the generative capabilities of language models with information retrieval. Here's a concise overview:

The retrieval-based component

RAG models have a retrieval-based component (not to be confused with fine-tuning) that stores and retrieves pre-defined responses from a database or knowledge base. Using knowledge base software ensures that this retrieval component operates efficiently, offering a reliable foundation for response generation. Creating a knowledge base using knowledge base software ensures that this retrieval component operates efficiently, offering a reliable foundation for response generation.

The generative component

In addition to the retrieval-based component, RAG models have a generative component that can generate responses from scratch.

Retrieval augmentation

The key innovation in RAG models is the retrieval-augmentation step. Instead of relying solely on generative responses or retrieval responses, RAG models combine both.

When a user query is received, the model first retrieves a set of candidate responses from its knowledge (the retrieval-based component).

Then, it uses the generative component to refine or enhance the retrieved response.

What is RAG in chatbots?

RAG in chatbots refers to the same core concept as in other LLMs, but it's specifically applied to enhance conversational AI. Here's how it works in chatbot contexts:

- Query understanding: When a user sends a message, the chatbot analyzes it to understand the intent and content.

- Information retrieval: The chatbot searches its knowledge base for relevant information related to the user's query.

- Context augmentation: The retrieved information is added to the conversation context.

- Response generation: The chatbot uses the original query, conversation history, and the retrieved information to generate a response.

- Delivery: The generated response is sent back to the user.

Retrieval-based models search a database for the most relevant answer, while generative models create answers on the fly. The combination of these two capabilities makes RAG chatbots efficient and versatile.

A tutorial that shows you how to make your own custom AI chatbot with Python using LangChain, Streamlit, and Apify

The benefits of RAG in AI

1. It mitigates hallucinations

Because it incorporates retrieved information into the response generation process, RAG helps reduce the likelihood of the model making things up or generating incorrect information. Instead, it relies on factual data from the retrieval source.

2. It's more adaptable

RAG systems can handle a wide range of user queries, including those for which no pre-defined response exists, thanks to their generative component. Moreover, the retrieval mechanism can be fine-tuned or adapted to specific domains or tasks, which makes RAG models versatile and applicable to a wide range of NLP applications.

3. It produces more natural language

RAG models can produce more natural-sounding responses compared to purely retrieval-based models. Furthermore, they can personalize generated content based on specific user queries or preferences. By retrieving relevant information, the models can tailor the generated text to individual needs.

4. It reduces production costs

This last point is the main reason that RAG is the real reason behind the AI revolution. GPT-4 costs hundreds of millions of dollars. But you can set up a RAG pipeline very quickly and cheaply.

Does ChatGPT use RAG?

A really simple example of RAG in action is adding knowledge to custom GPTs.

When you add a dataset to a GPT, the GPT effectively has a RAG pipeline under the hood. It retrieves data from its knowledge and generates an answer based on that information.

This means the information will be more reliable than when the GPT uses its browse the web feature.

How to collect data to build a RAG pipeline

To create or enhance a retrieval-augmented generation AI model, you need data for your knowledge base or database, so let's end with where to begin: Website Content Crawler.

1. Use Website Content Crawler to collect web data

Website Content Crawler can scrape data from any website, clean the HTML by removing a cookies modal, footers, or navigation, and then transform the HTML into Markdown. This Markdown can then be used to feed AI models, LLM applications, vector databases, or RAG pipelines. Website Content Crawler integrates well with LangChain, LlamaIndex, Pinecone, Quadrant, and the wider LLM ecosystem.

Extract text content from the web to feed your vector databases and fine-tune or train large language models such as ChatGPT or LLaMA.

2. Train the model with your data

If your AI model includes a generative component, you'll need training data for fine-tuning the model (using RAG and fine-tuning in tandem is the ideal solution). This data may consist of conversational or text data relevant to your application domain. You'll need to ensure that the data is appropriately labeled for training.

3. Integrate the data with your RAG architecture

Finally, integrate the knowledge base and training data into your RAG architecture so the model can retrieve and generate responses based on user queries. Understanding how to use AI agents effectively will help automate these processes, enabling seamless interaction and continuous learning within your system.

Transfer results from Actors to the Pinecone vector database, enabling Retrieval-Augmented Generation (RAG) or semantic search over data extracted from the web.

Summary: What is RAG?

RAG (retrieval-augmented generation) is a powerful AI framework that combines content retrieval and generation to enhance the quality and relevance of AI-produced text. It addresses a key limitation of large language models (LLMs) by allowing them to access up-to-date information beyond their initial training data.

Key points:

- RAG integrates a retrieval-based component with a generative component.

- It helps mitigate hallucinations in AI responses by grounding them in factual data.

- RAG makes AI models more adaptable and capable of handling a wide range of queries.

- It produces more natural and personalized language compared to purely retrieval-based models.

- RAG significantly reduces production costs compared to training massive LLMs from scratch.

RAG is particularly useful in chatbots and other AI applications where accurate, context-aware responses are required. It works by understanding a query, retrieving relevant information, augmenting the context, and then generating a response.

To implement RAG, you can use tools like Website Content Crawler to collect and process web data, which can then be integrated into the RAG architecture.

While LLMs have garnered much attention, it's RAG that truly powers the current AI revolution. By enabling AI to combine vast knowledge bases with generative capabilities, RAG offers a more flexible, cost-effective, and powerful approach to AI-driven natural language processing tasks.