Hey, we're Apify. You can build, deploy, share, and monitor any scrapers on the Apify platform.

We’re going to show you how to use the Apify platform and a ready-made tool called Google Maps Scraper to extract data from Google Maps. This scraper, or crawler, will enable you to extract the most recent Google Maps data directly from the website, including reviews, images, opening hours, and popular times.

To learn how to achieve this, you can follow a step-by-step guide here or watch our short YouTube tutorial below.

📝 What data can I extract from Google Maps?

| 🔗 Place ID, subtitle, category, name, and URL | ✍️ Review text | ☎️ Phone and website, if available |

| 📍 Address, location, plus code | 🔍 People also search | 🗓 Published date |

| 🛰 Geolocation | 🍔 Images | 💳 Hotel room price |

| 🏷 Menu and price, if available | ➕ List of detailed characteristics (additionalInfo, optional) | 👩💻 Response from owner - text and published date |

| 🔒 Temporarily or permanently closed status | 🤳 Review images | 🔗 Review ID & URL |

| 🧑🍳 Opening hours | 🌏 Language & translation settings | 🗂 Reviews sorting and filtering |

| ⌚️ Popular times - histogram & live occupancy | 📇 Reviewer name and ID | 🏨 Hotel booking URL+ nearby hotels |

| 🌟 Stars | ⭐️ Average rating (totalScore), review count, and review distribution | 🙋 Updates from customers & Questions and answers |

💿 Why not use the official Google Maps API?

Some massively popular websites such as Instagram and Amazon do provide APIs for their users to access part of their data, scraping purposes included. Google Maps is among these websites. So why do people search for unofficial Google Maps API in the first place when there’s an official API around?

The answer is the limitations of the Google Maps API and the need to find alternatives to get around these limitations. Back in 2010, Google Places API introduced considerable limitations on scraping their data. However, users often seek unofficial APIs to access functionalities such as creating a Google Maps multi-destination route, which might not be fully supported by the official API. Here are just a few reasons why alternatives to Google Maps API are popular:

🏷 Prices got pretty high. In 2010, Google Places made using their APIs paid. 8 years later, in 2018, Google introduced a new billing model for businesses using Google Maps APIs. The amount of free API calls has been cut, and the new pricing wasn’t welcome.

🤔 Pricing model is also tricky. After using up the freemium monthly credits of $200, which include a seemingly safe package of 28K Dynamic Maps loads, 100K Static Maps loads, 40K Directions calls and 40K Geolocation calls, things get tricky. However, the costs go pretty high if you decide to use Embed API in Directions, Views, or Search mode. And don’t even get us started on autocomplete - that one consumes credits like wildfire.

🔒 Authentication gate. Your API calls have to include a valid API key linked to a Google Cloud Platform account; yes, even if your usage remains within the initial free $200 credit.

📞 Limited number of free API calls. 28K dynamic loads per month for free (compared to 25K per day before 2018 pricing change). All this leads to…

👀 Anxious monitoring. You have to constantly keep an eye on your API usage so as to stay within the limits because you don’t want to face consequences when your account gets blocked out of the blue for overusage.

⏱ Imposed limitations on results. Google Places API enforces rate limits (100 requests per second) and IP quotas. Some features are still unavailable in Place API such as Popular place times histogram.

🌋 Unpredictability. Google can make any changes to the paid API, or impose new restrictions at any moment. Adapting to those changes can influence your solution significantly and without warning.

These are just a few reasons that encouraged businesses and developers around the world to find alternative ways to scrape the Google Maps website, such as Google Maps Scraper 🔗, which now accounts for 30+K users. While the official Google Maps Places API can be an adequate option in some cases, low-code crawlers such as ours can provide:

🧬 Unlimited results

🌟 All place reviews

🏞 All place images

📊 Popular place times histogram

🗺 Visual representation of scraped data

💸 All for a better price

Now let's walk you through every step of the process of scraping Google Maps. There are two ways of scraping Google Maps efficiently: either by using a ready-made scraper (or crawler) or by creating a scraping bot of your own. Since Google Maps is a complex site to extract data from (and to build a web scraper for), let's try out the ready-made option first.

🌎 How to scrape Google Maps?

- Go to Google Maps Scraper.

- Add the search term, location, category, URL, or geolocation to scrape.

- Set up extra parameters such as reviews, web results, images, or questions.

- Click Start and check your results in Output.

- Export data in CSV, HTML, JSON, XML, or using an API.

Step 1. Go to Google Maps Scraper

First, go to the Google Maps Scraper page and click the Try for free button.

You can create a free account using only your email address or GitHub account. Afterward, you will be redirected to Apify Console – your workspace for all things data extraction.

Step 2. Indicate the search term or URL to scrape

There are two ways to get your Google Maps data. Choose your starting point: search terms 🔎 or URLs 🔗 .

🔎📍 How to scrape Google Maps by search term + location

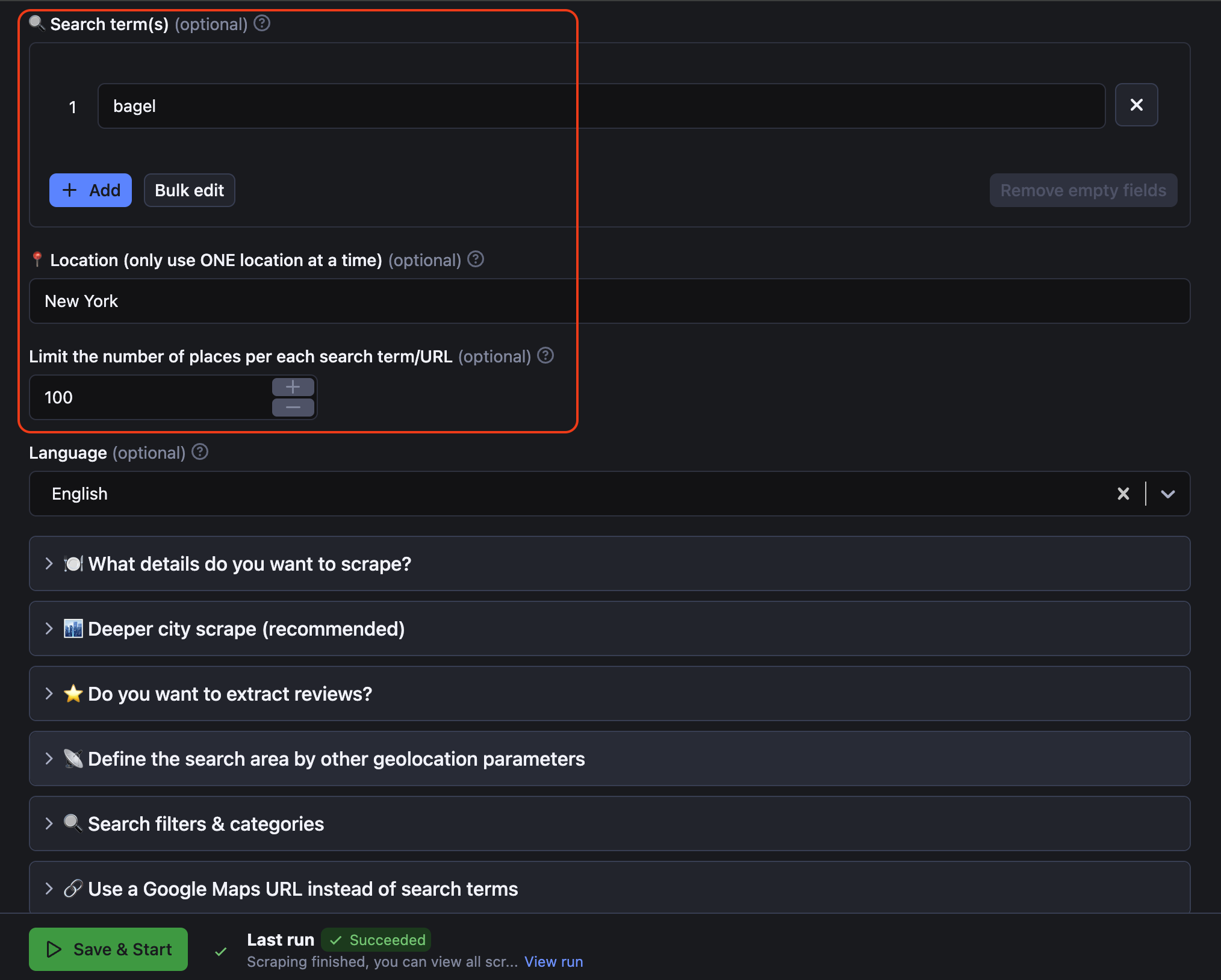

Enter your search terms in the first fields of the scraper, e.g., bagel -> New York. That would be enough for a regular scrape. The scraper will find places in New York City that mention Bagel 🥯.

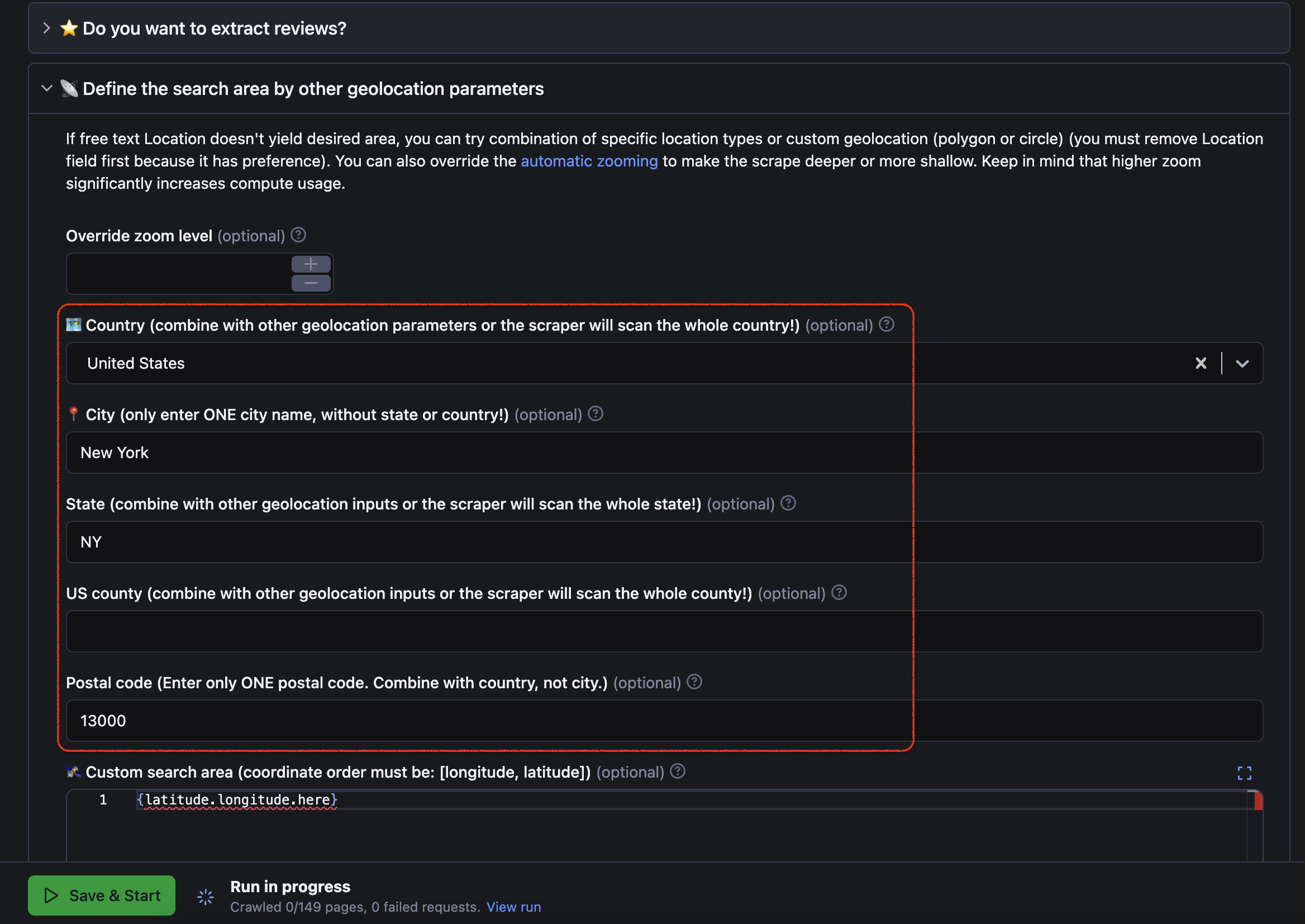

Alternatively, you can make your search even more granular in the 📡 Define the search area either by typing the rest of the address (state, US county, postal code) or by inserting the latitude and longitude coordinates of the area of your interest. More detailed guide here 🛰.

🔗 🗺 How to scrape Google Maps by URL?

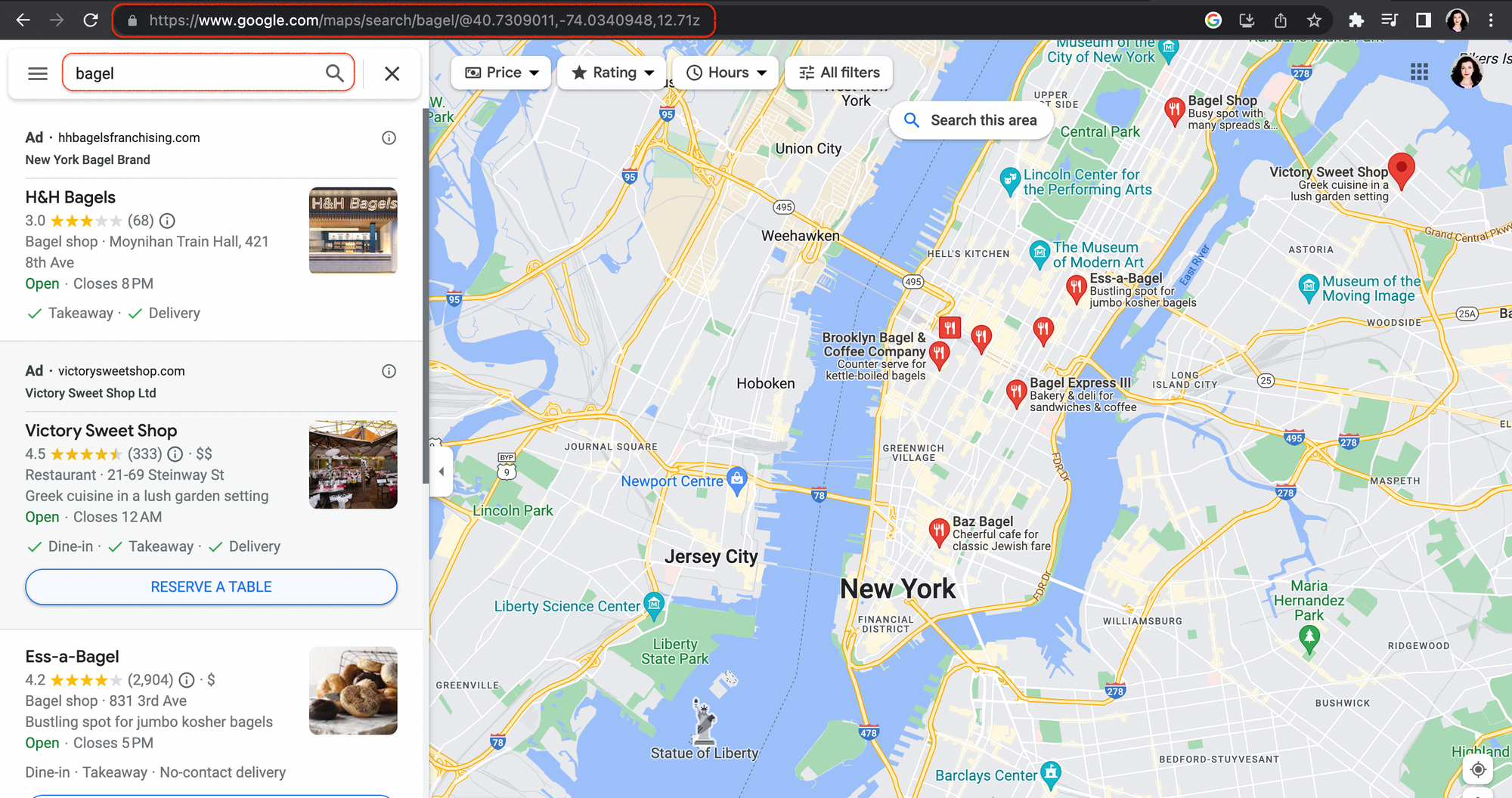

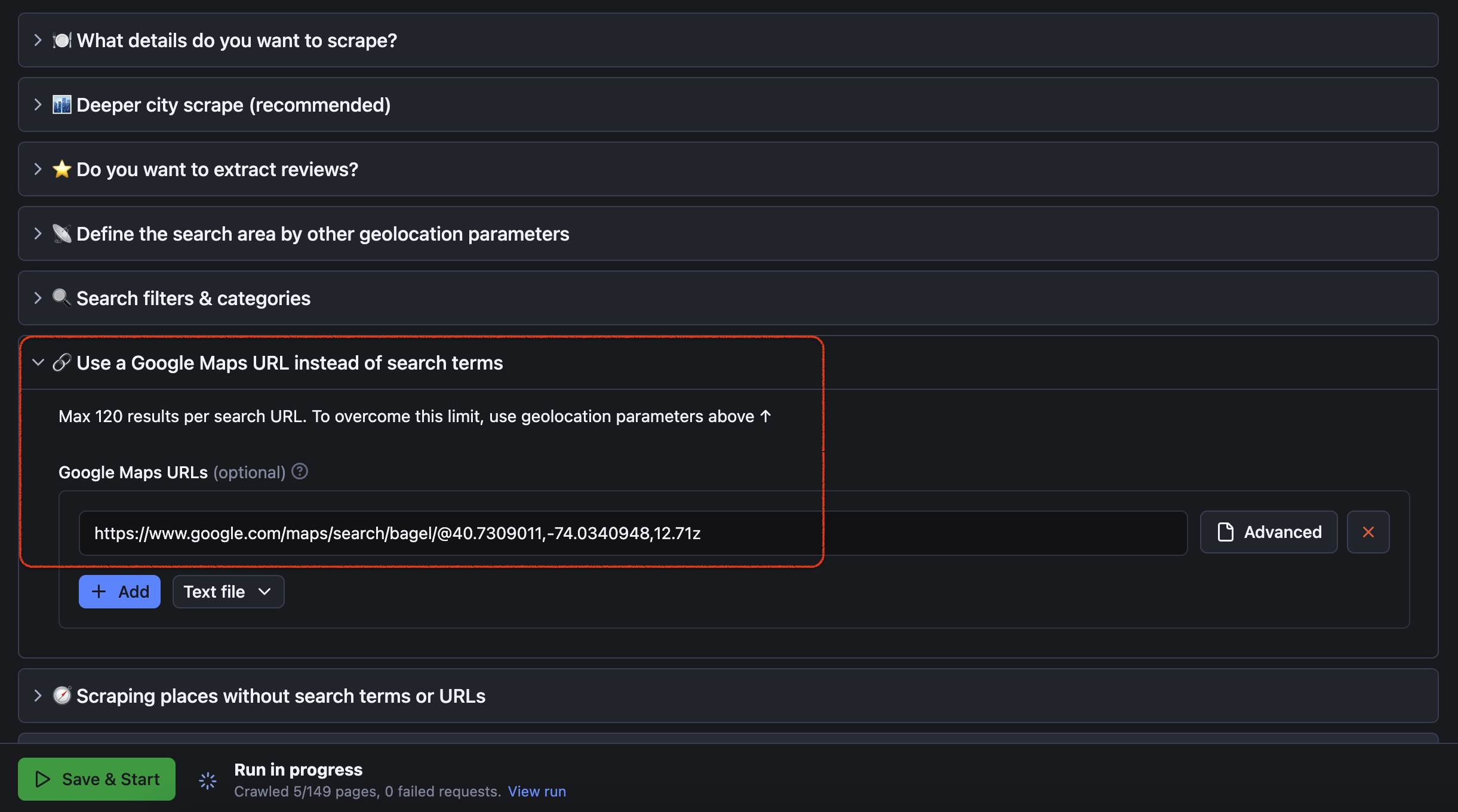

Open the Google Maps website in a separate tab, search for New York -> bagel and, once Google Maps provides you with results, copy the URL and paste it into the respective field.

🔗 🗺 How to scrape Google Maps by URL

With this option, you can also adjust the zoom level of the area to scrape, before you copy-paste the URL. If you zoom in, you will get more detailed results, including smaller businesses. Otherwise, you will obtain what Google Maps will consider the most relevant places over a larger area. More detailed guide here 🔎.

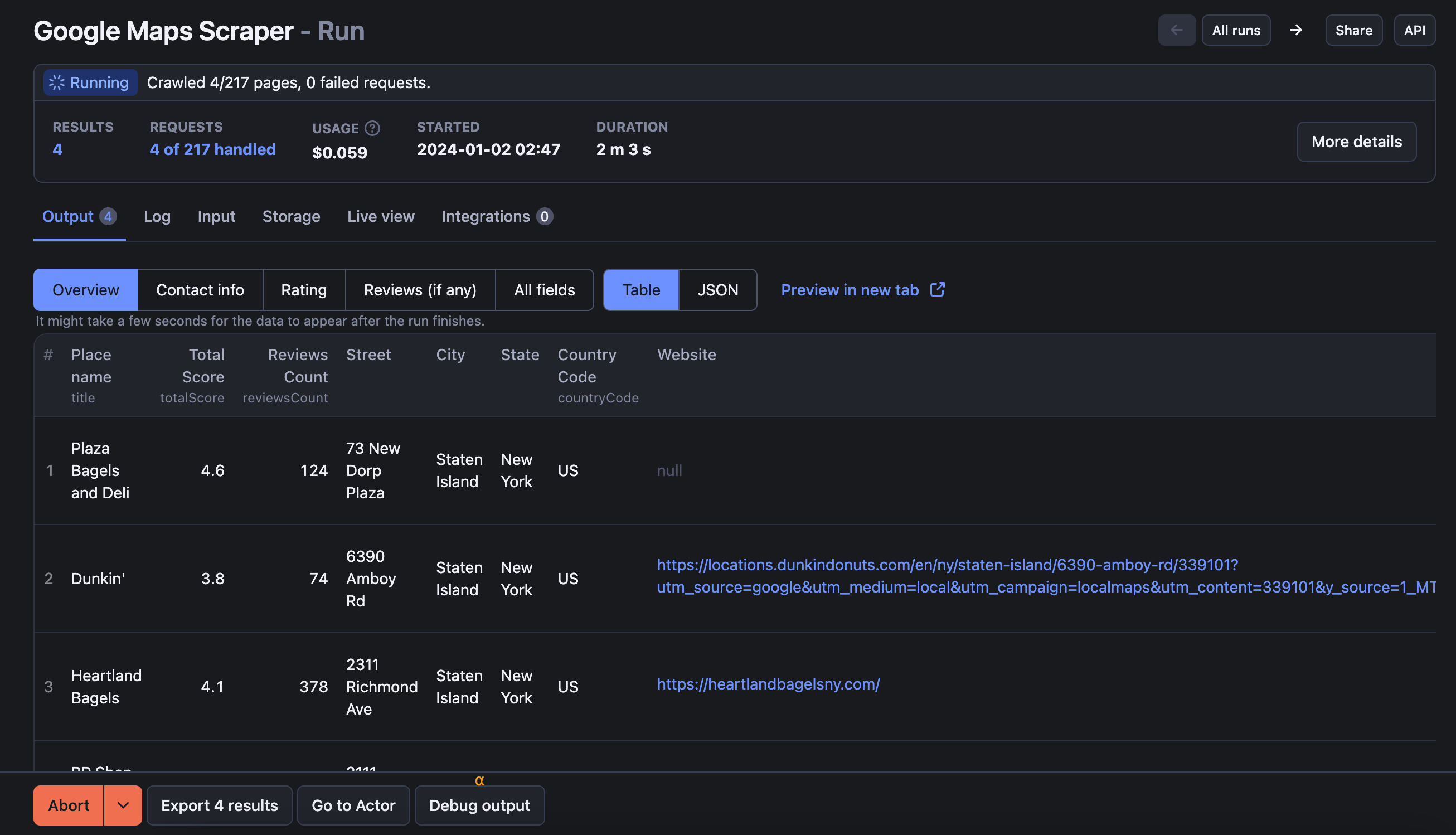

Step 3. Click Start ▶️

Once you are all set, click the Start button. Notice that your task will change its status to Running🏃♂️, so wait for the scraper to finish. It might take a few minutes before you see the status switch to Succeeded 🏁; this depends on the complexity of your search and how many places you've indicated.

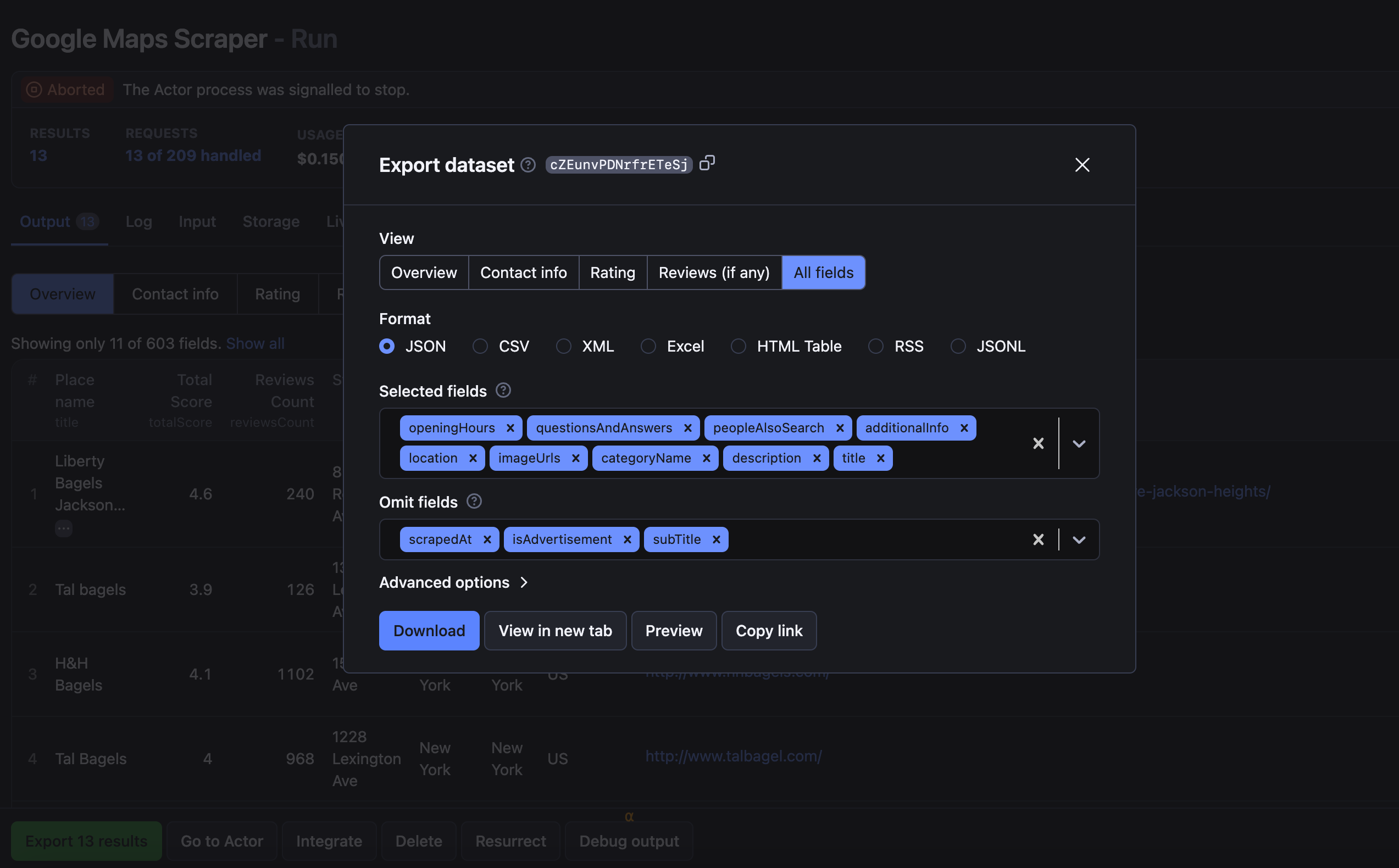

Step 4. Download data from Google Maps

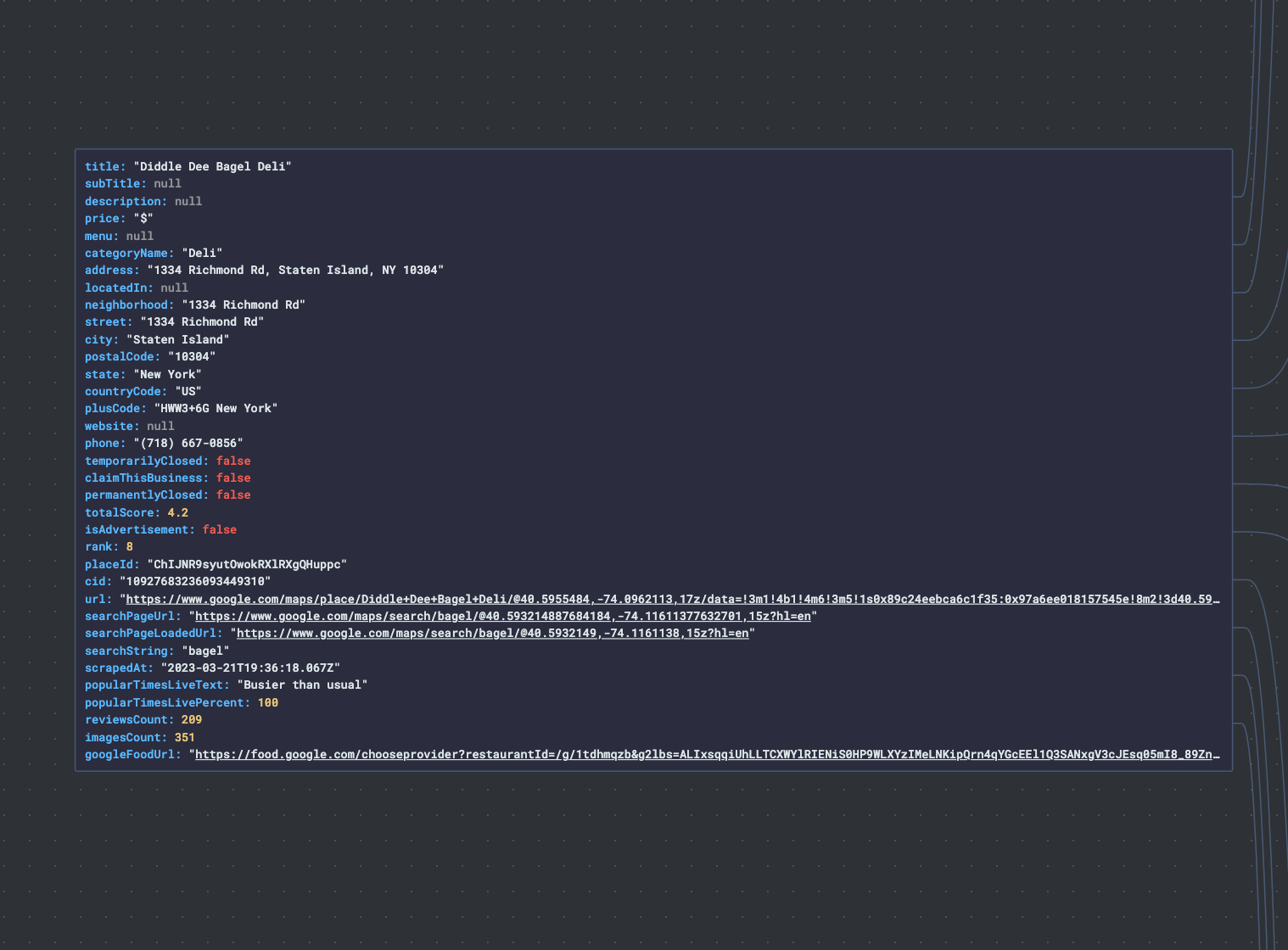

To preview and download the dataset, move over to the Storage tab or click on the Export X results button. It will contain your scraped data in various formats, including HTML table, JSON, CSV, Excel, XML, and RSS feed. Besides the format, you can also choose which part of the dataset you want to download: all data, rating, contact info or reviews, or even which specific fields to keep or get rid of.

Part of the Google Maps dataset - basic info about the restaurant

Finally, if this particular scraper seems too advanced for your needs, have no fear. There is also Fast Google Maps Scraper. It has an easier interface and is great for quickly scraping Google Maps.

🦄 Choosing the best tool for Google Maps scraping

Scraping Google Maps website can be very versatile, which is why we've developed several Google Maps scrapers to get the job done. They are all equally powerful but suited to different use cases. Explore this table to find the best fit for your needs:

| 📍 Google Maps Scraper | 🏎 Google Maps Data Extractor | 💼 Google Maps Business Scraper | |

|---|---|---|---|

| Scrape by: Location 📍 + search term 🔍 | ✅ | ✅ | ❌ |

| Scrape by: Location 📍 + Google Maps category 🏛 | ✅ | ✅ | ❌ |

| Scrape by: Google Maps search URLs 🔗 | ✅ | ✅ | ❌ |

| Scrape by: Google place URLs 🔗 or placeIDs 🆔 | ✅ | ❌ | ✅ |

| Define location: by city, country, state, US county, or postal code | ✅ | ✅ | ❌ |

| Define geolocation and coordinates (polygons, multipolygons, circles) | ✅ | ✅ | ❌ |

| Scrape all places from the area, no search term needed | ✅ | ❌ | ❌ |

| Speed | 🚙 Slower | 🏎💨 Extremely fast | 🏎 Very fast |

| Extracts place details: contact details, prices, opening hours, rating, etc. | ✅ Full list here | ✅ Full list here | ✅ Full list here |

| Extracts images | ✅ | ❌ | ❌ |

| Extracts reviews | ✅ | ❌ | ❌ |

Extracts reviewsDistribution |

✅ | ❌ | ✅ |

| Extracts web results, order by info, Q&A and updates | ✅ | ❌ | ❌ |

| Output formats | Excel, CSV, JSON, HTML, XML | Excel, CSV, JSON, HTML, XML | Excel, CSV, JSON, HTML, XML |

| API, webhooks and integrations | ✅ | ✅ | ✅ |

| Language options for search | ✅ | ✅ | ✅ |

| Visualization of scraped results on a map | ✅ | ✅ | ✅ |

| Pricing for 1,000 results | ⚠️ depends on complexity of your search | $12 for 1,000 places | $4 for 1,000 places |

| Free results per month with Free plan | approx. 2,000 results | 400 results | 1,250 results |

| 📍 Google Maps Scraper | 🏎 Google Maps Data Extractor | 💼 Google Maps Business Scraper |

❓ FAQ

🧑⚖️ Is it legal to extract data from Google Maps?

Anybody can get information from publicly available websites - web scraping is just a faster and more efficient way to get the job done. It is therefore legal, as long as you respect the personal data and copyright laws. To learn more about the legality of web scraping, read our blog post on the subject.

⭐️ Is it legal to scrape Google Maps reviews?

Yes, but with caveats. Public reviews posted on Google Maps often contain personal information such as a name, profile image, review ID. All that information combined could be potentially used to identify the reviewer - which is protected by GDPR in the EU and by other laws around the world, and for a good reason.

You should only scrape personal data if you have a legitimate reason to do so, and you should also factor in Google's Terms of Use. We are aware of that and built our Google Reviews Scraper in a way to be able to avoid extracting personal data. You can read the basics of ethical web scraping in our expert piece on the legality of web scraping.

💳 Is Google Maps API free?

No. Google Places APIs used to have a free tier, but in 2018, they introduced a new billing model which reduced the amount of free API calls available. After using up the free credits, costs can quickly become expensive, especially if you use certain features like Embed API or Autocomplete.

📇 Can I scrape Google Place IDs?

Yes. Google Maps Scraper 🔗 extracts Google Place IDs, among other things like place name, address, contact details like website and phone number, reviews, opening hours, popular times, open/closed status, menu, and prices. If you want to scrape Google Maps using place ID as an input, you should try 💼 Google Maps Business Scraper.

⛽️ Can I scrape gas prices from Google Maps?

Yes. Any data that you can see on Google Maps website you can also extract. That data includes gas prices, gas type, gas station location and details, price update time, etc. Check out our tutorial on how you can use a Gas Prices Scraper to that end.

🎯 Need more Google scraping tools?

If you have a specific scraping case for Google data extraction (or Google API), check out these simple scrapers. They're designed to handle Google scraping and data extraction from Google Trends, News, Lens, Images, and even Google Search. Take a peek and see if any of them fit the bill.

✉️ How do I extract email from Google Maps?

You can use a web scraper tool created specifically to extract contact details from Google Business Profile (formerly Google My Business). Google Maps Email Extractor will get you G Maps business details such as place name, location, website, phone number. Besides, you can get info on rating, number of reviews, popular times, category, ads if available, opening hours and URLs. It can also include detailed reviews of every place as well as their translations.

👷♀️ Can I build my own Google Maps data scraper?

If you want to make a map scraper of your own, you can do it, but these days you don't have to start from scratch. Start off with a simple web scraping template in Python, JavaScript, and TypeScript. You can host your scraper on the Apify platform which will cover the proxy, monitoring, and scheduling.

🛡 Do I need proxies to scrape Google Maps?

It's preferable to scrape such a huge website as Google Maps by using proxies, that way, it's faster and more efficient. Because of this, if you get serious about scraping Maps, it will eventually start costing you at least a little cash, but in the meantime, you can get one month of Apify Proxy free as soon as you sign up at Apify.

🤖 Can I use AI to scrape Google Maps?

AI is currently unable to scrape websites directly, but it can help generate code for scraping Google Maps if you prompt it with the target elements you want to scrape. Note that the code may not be functional, and website structure and design changes may impact the targeted elements and attributes

📲 Can I integrate data from Google Maps with my app?

Yes, though the data has to be in machine-readable format. Apify platform stores data in universal formats such as JSON, XML or CSV which makes it easy to plug in extracted Google Maps data into other apps. The platform also allows seamless data integration across various integration tools such as Zapier, Make, Airbyte, Keboola, and others. To make this happen, all you need to know is the dataset's ID.

🙋♂️ How do I scrape my location on Google Maps?

You can get G Maps data from the exact location by indicating coordinates or postal address, including your current location. Here's how to do it in 4 steps with Google Maps Scraper:

- Head over to the 🛰 Define the search area section and indicate your postal details (country, state, city, zip code).

- Alternatively, indicate your exact coordinates (longitude and latitude).

- Begin data collection by clicking 'Start'.

- Download your Google Maps data in CSV format.

✋ How to scrape data from Google Maps without getting blocked?

The best way is to use proxies. The vast majority of the websites use anti-blocking protections of varying caliber to fend off bots. That's understandable – traffic that comes from real visitors is more valuable than the bots' traffic (even if the bot comes at the command of a real human). To avoid getting blocked while scraping Google Maps, or any other website for that matter, you should first try using data-center or residential proxies along with your web scraper.

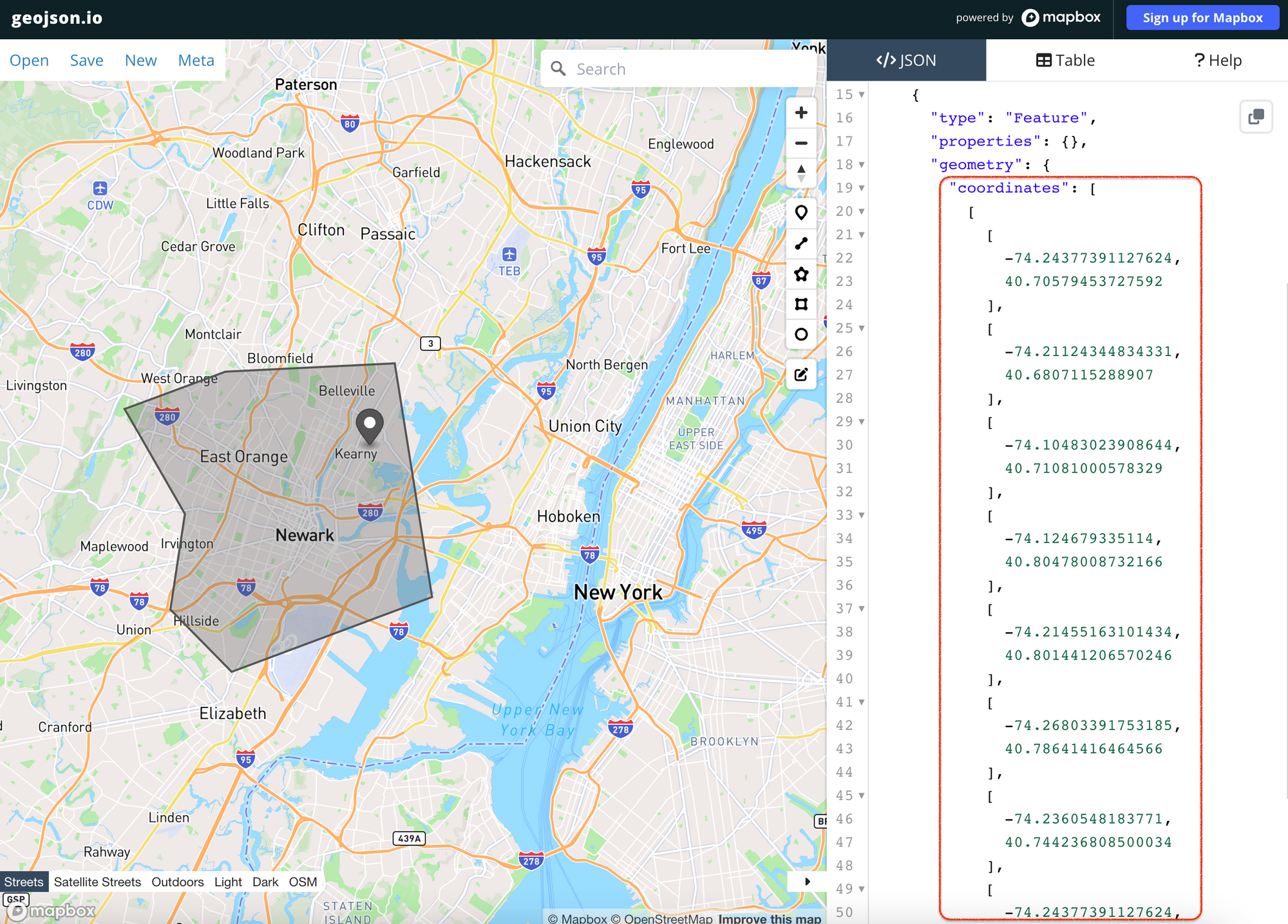

🛰 Can I scrape Google Maps by geolocation?

Yes. Extracting data by geolocation can be very handy because you can create various irregular shapes for specific areas on the map. We recommend using Geojson.io for an easy coordinates definition and following our guide to see how to apply those coordinates in Google Maps scraper.

📍 How to use data extracted from Google Maps

These days, without a digital presence on Google Maps, a local business can count itself as pretty much invisible. That's why Google Maps is a colossal free data repository for businesses and organizations, which makes it a goldmine for extracting all kinds of invaluable data: from reviews, geolocation, and gas prices to contact data such as emails, phone numbers, addresses, and websites companies in the general vicinity. Here are just a couple of examples of how you can use this extracted data:

☎️ Generate leads. By scraping Google Maps, you can build your own database of emails, phone numbers, reviews, ratings, and businesses for a targeted place, industry, or country.

🧸 Monitor brand sentiment. You can analyze collected Google Maps reviews for positive/negative sentiment, quality of service, and specific phrases. Our Google Maps Reviews Scraper focuses specifically on Google Maps reviews, including reviewer name, reviewer ID & URL, reviewer number of reviews, and Is Local Guide parameter.

🧑💻 Create a potential customer base. Websites and contact information are scattered all over the internet, but trying to find them takes a lot of time. Google Maps is one place where many of your prospects are gathered together.

👁 Search, monitor, and analyze the competitors' offers. Scraping Google Maps reviews about competitors can be a useful review framework for your business and can give you the capability to provide your clients with a better marketing service.

🗺 Analyze geospatial data for scientific or engineering work. For instance, when working with satellite data and geolocation or analyzing territorial changes before and after an event, as has been thoroughly done in this Bellingcat study.

⛽️ Get gas prices. Google Maps also provides an opportunity to get recent gas prices from the area, along with details on field type, company supplier, and more. You can use Gas Stations Scraper to that end.

🕵️ Carry out a product hunt. Find businesses offering the product you're searching for and choose the best option out of the pool of results.

📀 Benefit from big data, even if you're a small player. Find opportunities for expanding your business or organization and developing a working market strategy.

There are many other ways to use that Google Maps data. For example, custom mapping solutions might be made out of it. With that, your business will not just exist; it'll stand out on digital maps and provide tailored experiences for your customers.

However, in order to create a whole database, you may need to automate the process of data collection. One way to do that is via an official Google Maps API, but it won't be easy, as you know by now. You can always opt for a completely different Google Maps data extraction tool that can provide a full range of results, such as this free Google Maps Scraper or other Google scraping tools.