Apify recently decided to develop an enterprise support assistant to answer common customer questions that typically concern the best Apify Actors for specific tasks. We chose the newly introduced OpenAI Assistants because of their versatility in retrieving knowledge from files and executing custom functions. However, we found out that updating an assistant’s knowledge base is cumbersome and prone to errors. But the OpenAI and Apify integration makes this process straightforward and efficient.

About the Apify enterprise support assistant

Apify is constantly exploring more efficient ways to assist our customers and users in solving their problems. With the advent of custom ChatGPTs, we introduced Apify Adviser GPT to handle general inquiries about the Apify platform and Actors and to help users apply these tools to a variety of tasks (not just web scraping).

However, there are two significant challenges with custom GPTs. First, accessing them requires a paid version of ChatGPT. Second, as our platform and its Actors evolve, the assistant must continuously update its knowledge from apify.com, which requires manual effort. To address these issues, we decided to utilize Apify's scraping capabilities together with the OpenAI Assistant. We employed Website Content Crawler to crawl apify.com and upload the data directly to the OpenAI Assistant vector store. Thanks to Apify's crawling capabilities and integration with the OpenAI vector store, setting up and running this process is quite straightforward.

How I built an AI-powered tool in 10 minutes

OpenAI Assistant V2

OpenAI assistants received a major update in April 2024. The assistants are more adaptable because they now integrate several functionalities, including code interpreter, file search, and function calls. Furthermore, you can control the assistant behavior using model parameters such as temperature (to manage randomness), top-p (to control diversity), and tool preference (to specify which tools the assistant should use, like file search). Given these parameters, you can create powerful and robust applications such as coding developer support, technical documentation search, and enterprise knowledge management.

How does the OpenAI Assistant work?

OpenAI Assistants are built on several core components:

- Large language models: Including GPT-4, GPT-4 Turbo, GPT-4 32k, GPT-3.5 Turbo among others

- Instructions: define the assistant’s persona, capabilities, and specific instructions

- Tools: code interpreter to write and run Python code, file search for knowledge base retrieval, and function calling for interaction with the external world

- Vector store acts as a knowledge base for the assistant

The main steps involved in running the assistant:

- Thread: Session between an assistant and end-user (contains messages)

- Message: Created either by the assistant or the user (may include text, images, or files)

- Run: Calls the assistant on a thread, and appends new messages to the thread

How to create an Assistant (API) with vector store

You can easily create an Assistant and a vector store on the OpenAI platform, or you can use the following steps in Python.

First, let’s install the OpenAI package:

pip install openai

Then import the package, specify your OpenAI API token, and create your assistant:

from openai import OpenAI

client = OpenAI(api_key="YOUR API KEY")

my_assistant = client.beta.assistants.create(

instructions="As a customer support agent at Apify, your role is to assist customers with their web data extraction or automation needs.",

name="Sales Advisor",

tools=[{"type": "file_search"}],

model="gpt-3.5-turbo",

)

print(my_assistant)The output is the ID and description of your assistant:

Assistant(id='asst_DHTjx7Ij7K6jYdkfyClnIhgj', created_at=1714390792, description=None, instructions='As a customer support agent at Apify … ')Next, create a vector store, upload a file, and add it to the vector store. Let's assume you already have the necessary data from a website on hand and we'll cover how to update files automatically later.

# Create a vector store

vector_store = client.beta.vector_stores.create(name="Sales Advisor")

# Create a file

file = client.files.create(file=open("dataset_apify-web.json", "rb"), purpose="assistants")

# Add the file to vector store

vs_file = client.beta.vector_stores.files.create_and_poll(

vector_store_id=vector_store.id,

file_id=file.id,

)

print(vs_file)The ID of the newly created file with the completed status is:

VectorStoreFile(id='file-toBbgLcoQNXNxPzb6RStRBpf', created_at=1714391086, object='vector_store.file', status='completed')

Update the assistant to use the new vector store:

# Update the assistant to use the new vector store

assistant = client.beta.assistants.update(

assistant_id=my_assistant.id,

tool_resources={"file_search": {"vector_store_ids": [vector_store.id]}},

)Now, you can ask your assistant about Apify, for example, How can I scrape a website using Apify?

# Create a thread and a message

thread = client.beta.threads.create()

message = client.beta.threads.messages.create(

thread_id=thread.id, role="user", content="How can I scrape a website?"

)

# Run thread with the assistant and poll for the results

run = client.beta.threads.runs.create_and_poll(

thread_id=thread.id,

assistant_id=assistant.id,

tool_choice={"type": "file_search"}

)

for m in client.beta.threads.messages.list(thread_id=run.thread_id):

print(m)With the following output in reverse order:

- To scrape a website you can use Apify's Website Content Crawler …

- How can I scrape a website?That’s it! In just a few steps, we've created a powerful enterprise assistant capable of answering any questions about the Apify platform and its Actors. Now, it's also essential to keep the assistant's knowledge base up to date, so let's look at that process.

Updating the OpenAI vector store using Apify Actors

A simple approach would be to update the assistant's knowledge manually, but this method is prone to quick obsolescence. Instead, we can use the Apify platform and one of the 2,000+ Apify Actors on Apify Store to update the OpenAI vector store. The first step involves setting up an Actor for OpenAI vector store integration.

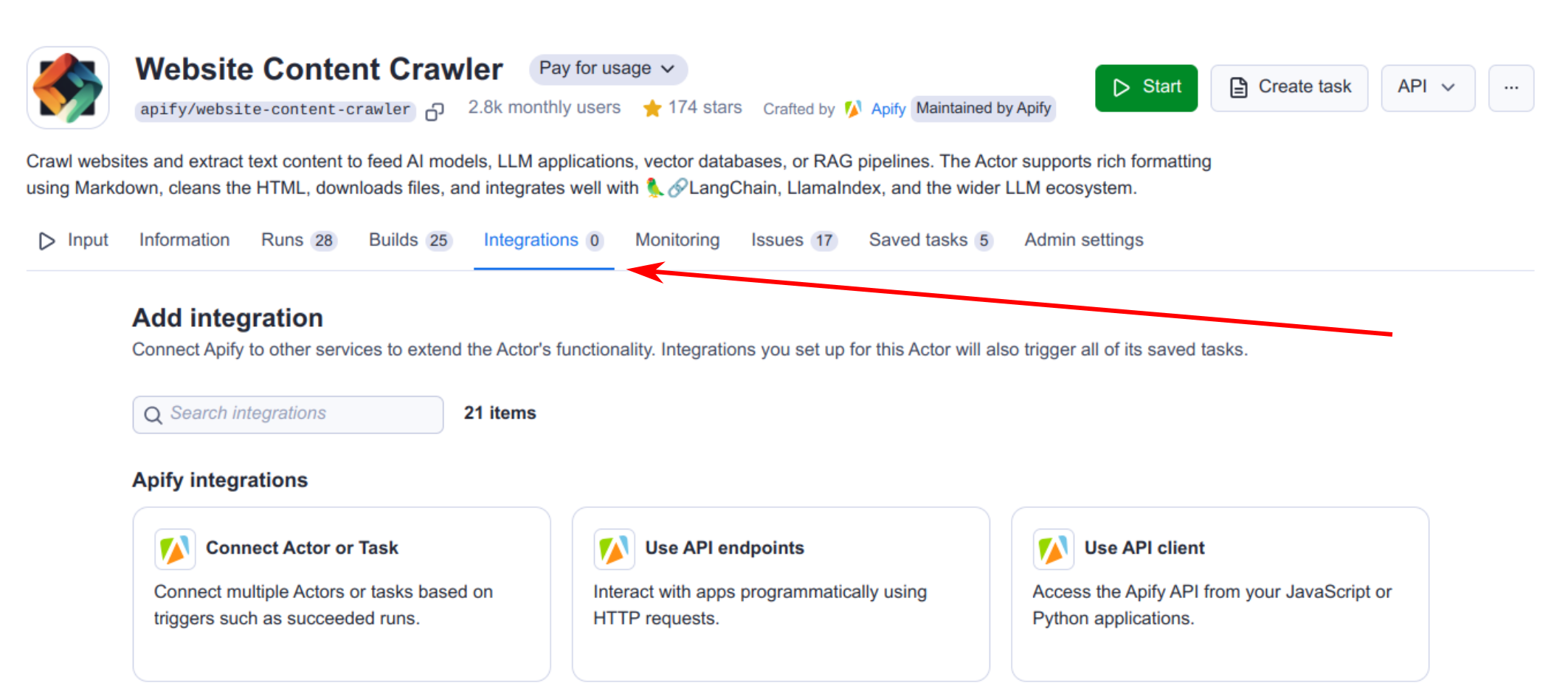

We will use Website Content Crawler. Refer to this detailed guide to setting up the crawler for your project. The best approach is to create a Task that can be scheduled to ensure periodic updates

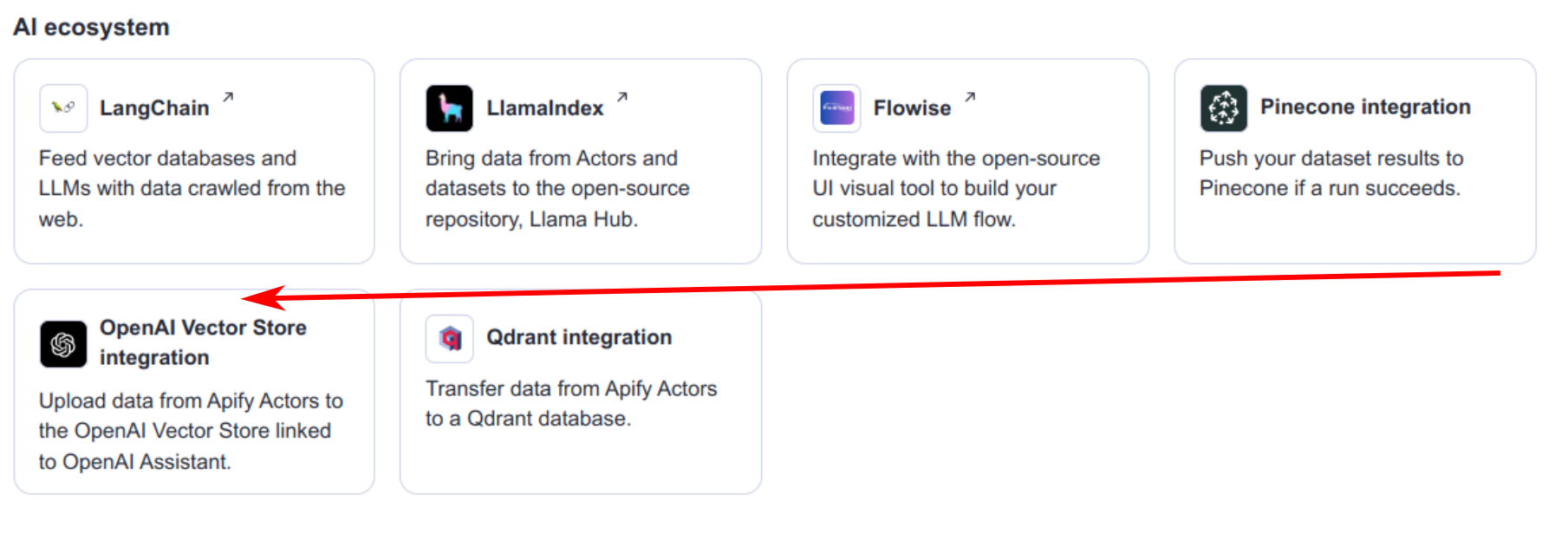

Once you have the crawler ready, go to the integration section and in the AI ecosystem category select the OpenAI vector store integration.

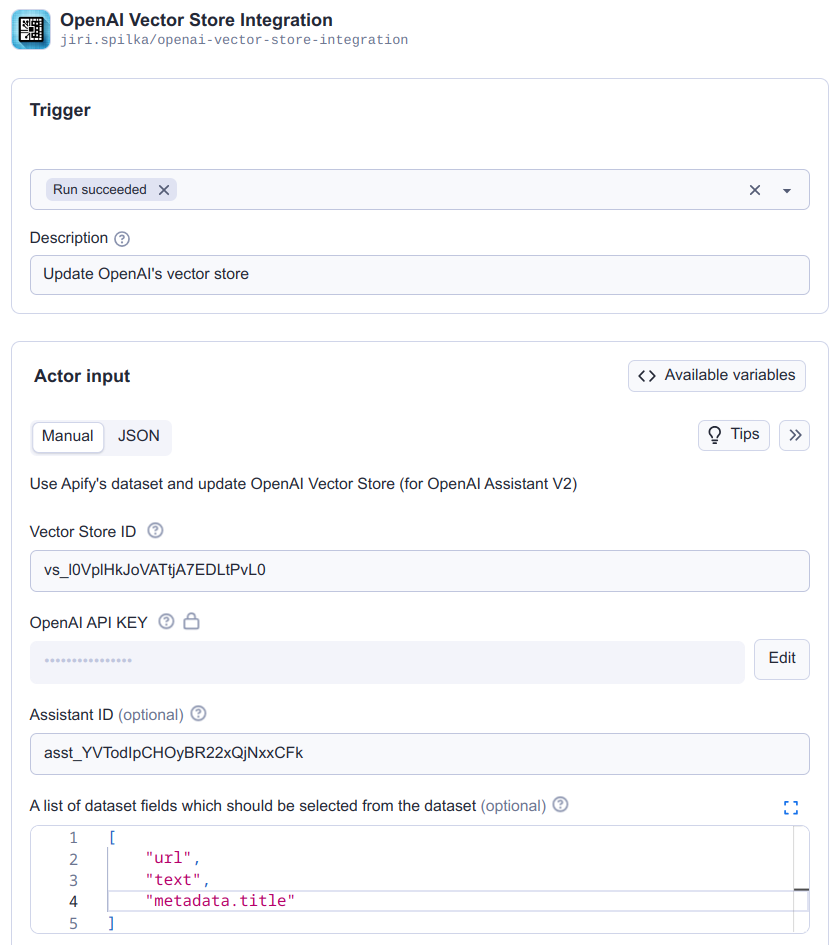

Next, select when to trigger this integration (typically when a run succeeds) and fill in all the required fields for the OpenAI integration:

- OpenAI vector store ID

- OpenAI API key

- Assistant ID (Optional): This parameter is required only when a file exceeds the OpenAI size limit of 5,000,000 tokens (as of July 2024). When necessary, the model associated with the assistant is used to count tokens and split the large file into smaller, manageable segments.

- Array of vector store file ids to delete: Delete specified file ids associated with vector store. This can be useful when you need to delete files that are no longer needed.

- Delete/Create vector store files with a prefix: Using a file prefix streamlines the management of vector store file updates by eliminating the need to track each file's ID. For instance, if you set the file_prefix to dataset_apify_web_, the Actor will initially locate all files in the vector store with this prefix. Subsequently, it will delete these files and create new ones, also prefixed accordingly.

Read our guide to Actor-to-Actor integrations for a detailed explanation of how to combine Actors to achieve more complex tasks.

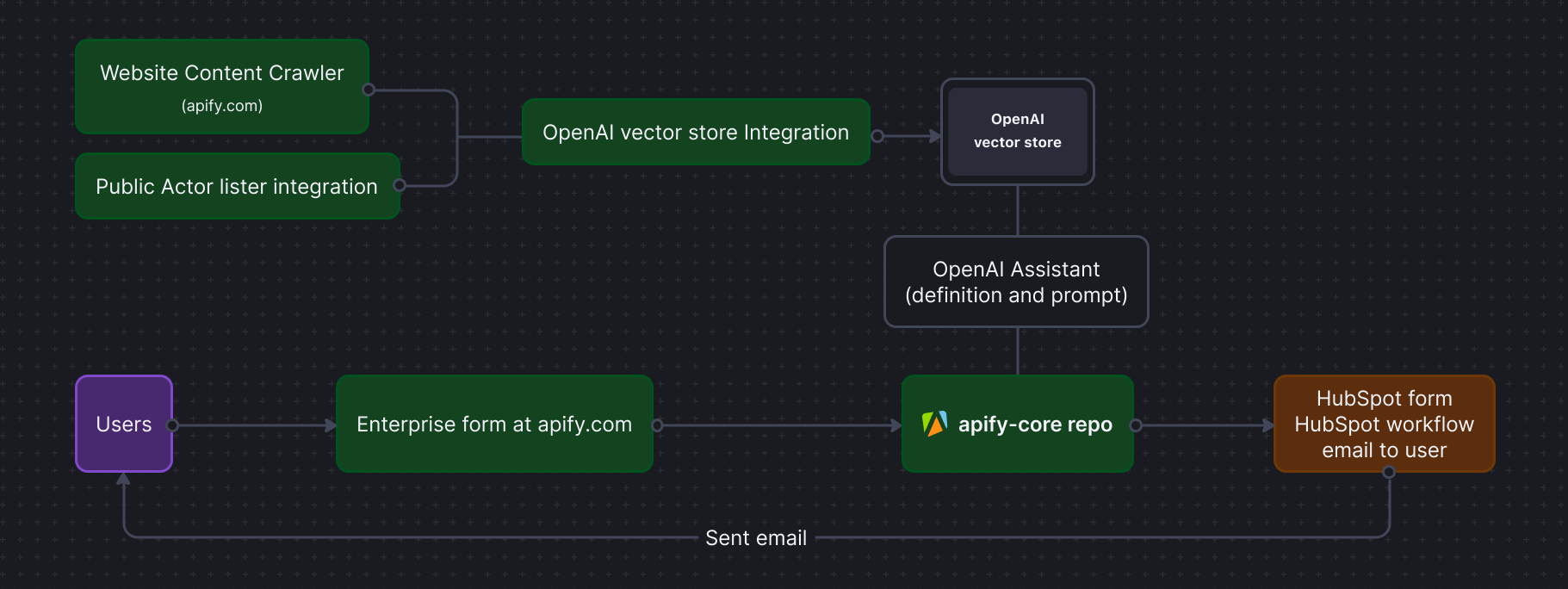

Here's a diagram showing how the process works:

Schedule a task

As the final step, schedule your task to ensure that the data remains up to date.

How to try out Apify's enterprise support assistant

The assistant is capable of answering a wide range of questions, such as, "I would like to scrape a generic website – how can I do that?"

And here's the answer from our AI assistant:

- To scrape a generic website, you can use the Website Content Crawler Actor.

- This Actor is free to start, and it's very effective for scraping and extracting text content from various types of websites, including blogs, knowledge bases, and help centers.

- If you have other specific needs or websites in mind, you might find more specialized Actors in Apify Store.

Try it out by submitting a query on our Apify Enterprise form. You'll get an email with general recommendations along with query-specific suggestions from our AI assistant. Let us know what you think!

Web scraping for AI-powered travel operations

Acai Travel is changing the travel industry with AI-powered solutions that augment human expertise. Using Apify's web scraping capabilities, they provide travel agents with up-to-date information from hundreds of airlines and more.