With the rise of AI agents, there’s a developing interest in exploring their core architecture. In this article, we'll break down the key components, operational modules, and various types of agent architecture.

What is AI agent architecture?

AI agent architecture refers to the different modules involved in an agent’s pipeline. Whether interacting with users, automating workflows, or collaborating with other AI agents, these architectures determine how an agent operates and adapts.

Core components of AI agent architecture

The fundamental building blocks remain largely the same, from early rule-based systems like ELIZA to modern LLM-powered AI agents. Let's break them down.

1. Perception module

The perception module is the “eyes and ears” of the agent. It can be a proper set of cameras for robotic agents or just a text input for a chatbot. A generic workflow is:

- Sensing: The physical data collection – like the text from the keyboard or frames from the camera.

- Preprocessing: The collected data is cleaned using text preprocessing, DSP algorithms, or other techniques depending on the data.

- Feature extraction: Feature extraction means representing data (we just preprocessed) in some meaningful form. Like tokenization for text/speech or object detection for images.

- Interpretation: This interpretation depends on the data and the desired agentic behavior. For example, the TV remote picks up the speech signals and converts them into text, or a self-driving agent recognizes the person in front of the car as a pedestrian.

After interpretation, the output will be relayed to the next module, depending on the agent’s type.

2. Cognitive module

As its name suggests, the cognitive module does the reasoning. A simple example is the chess-playing agent. It goes through all the perceptions to get the opponent’s current position and will need the cognitive module to decide its next move.

A cognitive module involves both reasoning and decision-making. The algorithms implemented in the cognitive module can be classified as:

- Reasoning: The reasoning part can use different reasoning approaches. These approaches can be either classical, like deductive or inductive reasoning, or a bit advanced, like probabilistic or analogical ones. A common example is banking systems marking a transaction as suspect based on some reasoning (e.g., the user is not in his/her geographical location and may be compromised, making a large withdrawal at 3 AM is probably a scam, etc.).

- Knowledge retrieval: This module can use different types of databases, like simple JSON files or vector databases.

- Optimization: Different optimizers like genetic algorithms and decision trees can help the cognitive module determine the most feasible action(s).

- Planning: Different path-finding algorithms, like A* search, can be used for the planning.

3. Action module

The action module only depends on the cognitive output and is irrespective of the agent. For chatbots and many other agents, it's just a text output; for robotic agents, it can be some audio signals (or even images in some cases) too.

4. Learning module

Some agents can improve their performance over time based on feedback. ChatGPT is a good example where a huge team used reinforcement learning to enhance/adapt GPT-3’s capabilities.

It's not always necessary to have humans in the loop. A good example is Retrieval Augmented Generation (RAG), where an agent can be augmented with a knowledgebase (no human involvement after the knowledge curation).

Features of AI agent architecture

With the rising focus on AI, especially LLM agents, some components deserve better coverage.

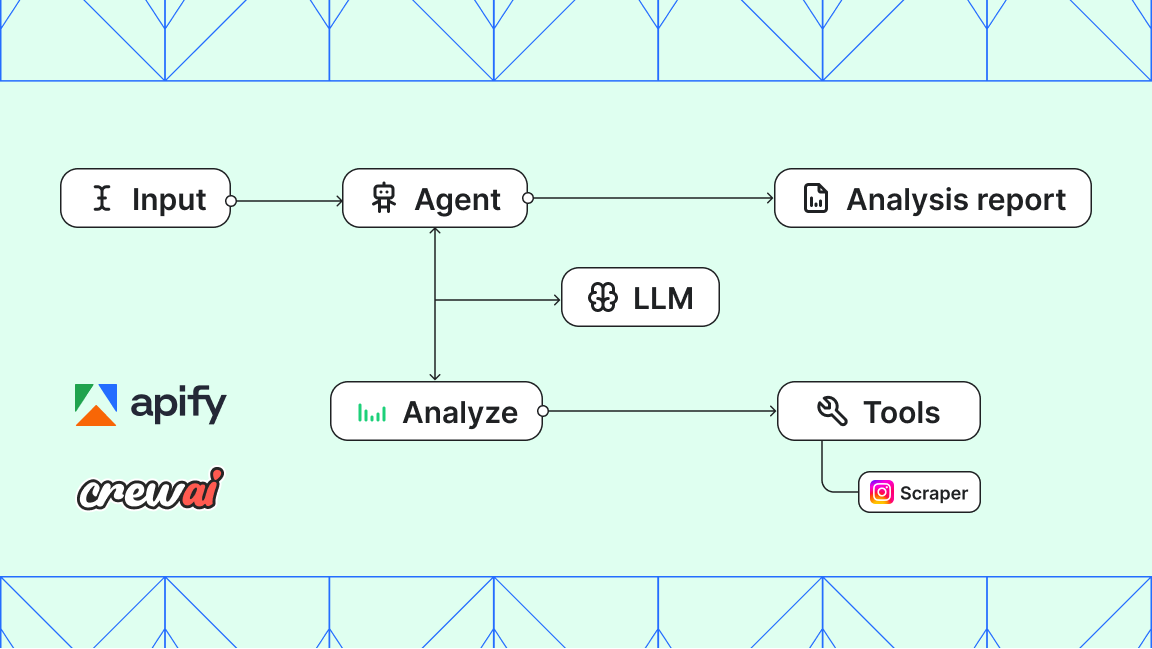

Tool integration

At times, our desired functionality requires a deterministic behavior. Example: a query about the weather can lead to one of these responses from an LLM agent:

- Tries to answer the question intrinsically (most probably will be imprecise).

- Performs some web searches – will have a better result, but due to the diverse nature of web sources, we are still uncertain.

- Uses a specific Weather API, which is already tested.

In such scenarios, all we need is an integration - be it an API, a user-defined function, or a database.

Memory mechanisms

Agents often use context before reasoning/reaction. While it can be any database, vector databases are more common due to embeddings and faster search. This is an example of using LangChain to add the conversation memory (to keep the 3 most recent chats):

from langchain.memory import ConversationBufferWindowMemory

window_memory = ConversationBufferWindowMemory(k=3)

agent1 = initialize_agent(

tools=load_tools(

["arxiv"],

),

llm=ChatOpenAI(temperature=0.3),

agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

verbose=True,

memory=window_memory

)

Types of AI agent architectures

AI agent architectures can be further classified, mainly as single vs. multi-agent.

1. Single-agent architecture

The most common architecture involves a single agent. While I have already covered the architecture in detail, a couple of recent (single) agent architectures deserve some coverage

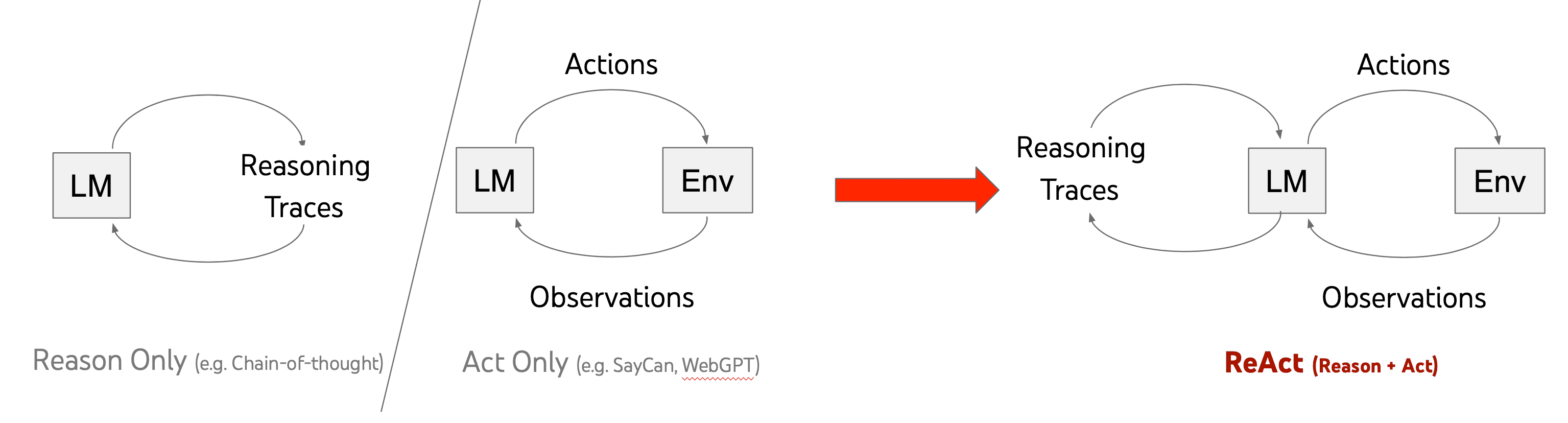

ReAct: Behind ReAct, there's a simple philosophy: Why keep reasoning and acting separate and not combine both? ReAct is as good in practice as it looks on paper, performing better than reasoning models or agents without reasoning.

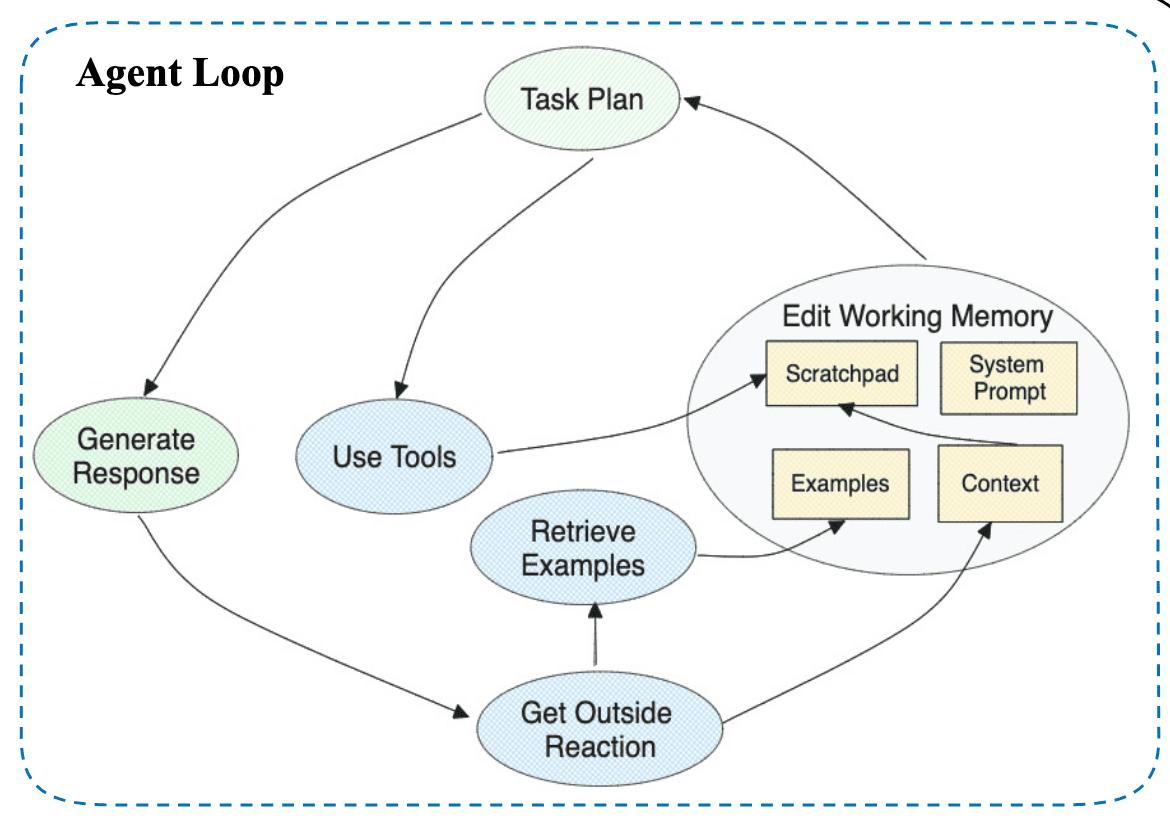

RAISE: RAISE (Reasoning and Acting through Scratchpad and Examples) improves the already good performance of ReAct by self-reflection: it self-evaluates its action to take the next steps.

2. Multi-agent architecture

In most complex systems, there's often a network of agents. These multi-agent systems can have different architectures:

- Centralized vs. decentralized

In centralized architectures, we have a “nucleus” node which oversees all the agents. Although centralized architecture provides greater control but is overreliant on the central agents. Decentralized multi-agent systems provide better flexibility – they mostly work on hybrid architectures, like holonic and coalition.

- Holonic

In holonic architectures, agents are pretty much autonomous (holons) but work towards the collective objective. For example, different robotic agents for painting, soldering, and many other features in a Boeing factory work independently, yet all are aimed at the final product (while maintaining some quality protocols).

- Coalition

Coalition architectures are formed as needed. A situation or emergency may arise, expecting different agents to form a coalition. Some common examples include AI-enabled Cybersecurity defense systems.

Conclusion

We’ve provided a concise yet comprehensive overview of AI agents' core components and architectural types. As AI continues to evolve, refining these architectures will be key to developing more capable and adaptable agents.

More about AI agents

- How to build an AI agent - A complete step-by-step guide to creating, publishing, and monetizing AI agents on the Apify platform.

- AI agent orchestration with OpenAI Agents SDK - Learn to build an effective multiagent system with AI agent orchestration.

- AI agent workflow - building an agent to query Apify datasets - Learn how to extract insights from datasets using simple natural language queries without deep SQL knowledge or external data exports.

- 11 AI agent use cases (on Apify) - 10 practical applications for AI agents, plus one meta-use case that hints at the future of agentic systems.

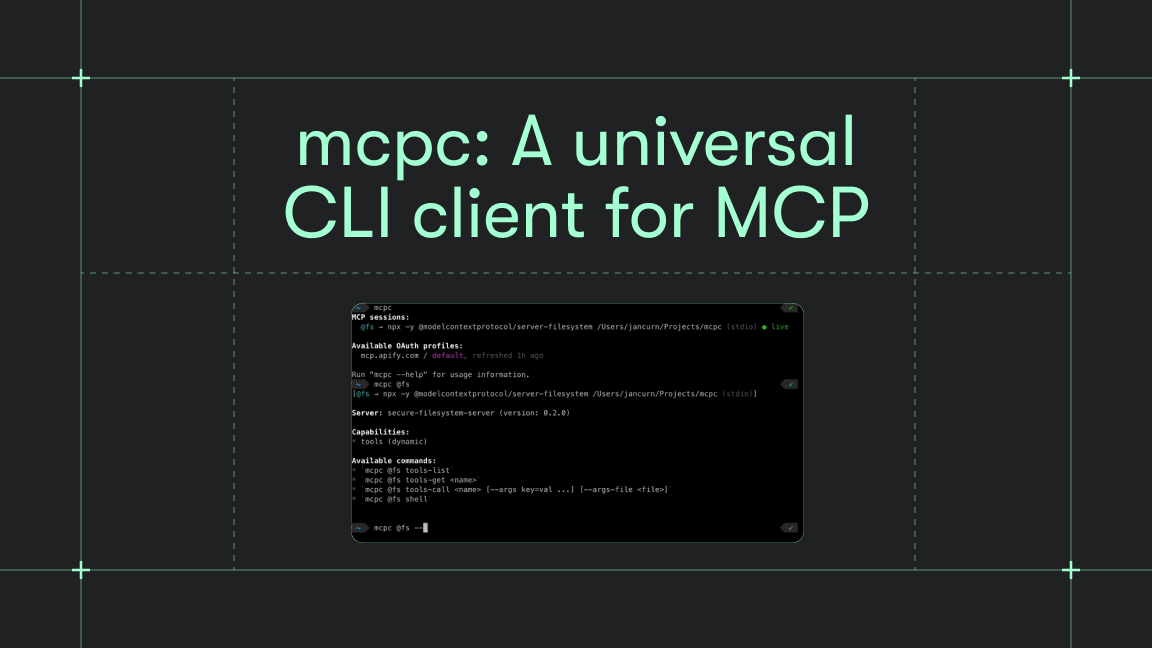

- The state of MCP - The latest developments in Model Context Protocol and solutions to key industry challenges.

- How to use MCP with Apify Actors - Learn how to expose over 4,500 Apify Actors to AI agents like Claude and LangGraph, and configure MCP clients and servers.

- 10 best AI agent frameworks - 5 paid platforms and 5 open-source options for building AI agents.

- 5 open-source AI agents on Apify that save you time - These AI agents are practical tools you can test and use today, or build on if you're creating your own automation.

- What are AI agents? - The Apify platform is turning the potential of AI agents into practical solutions.

- 7 real-world AI agent examples in 2025 you need to know - From goal-based assistants to learning-driven systems, these agents are powering everything from self-driving cars to advanced web automation.

- LLM agents: all you need to know in 2025 - LLM agents are changing how we approach AI by enabling interaction with external sources and reasoning through complex tasks.

- 7 types of AI agents you should know about - What defines an AI agent? We go through the agent spectrum, from simple reflexive systems to adaptive multi-agent networks.