For all the hype about large language models, they’re not very good at providing specific answers to questions that demand profound knowledge or expertise. Ask an LLM, like ChatGPT, something about a complex field, such as programming, medicine, or law, and you won’t get the kind of nuanced or even reliable answer that specialized knowledge can provide.

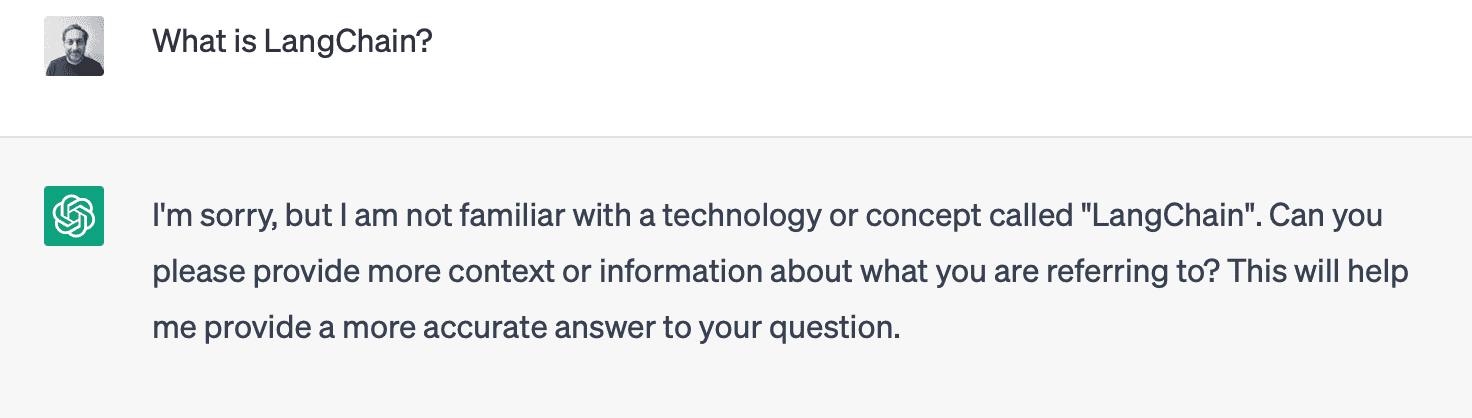

Another glaringly obvious issue with “vanilla” ChatGPT, for example, is this:

Hardly surprising. LangChain was released in October 2022, while ChatGPT has been trained on data from no later than 2021.

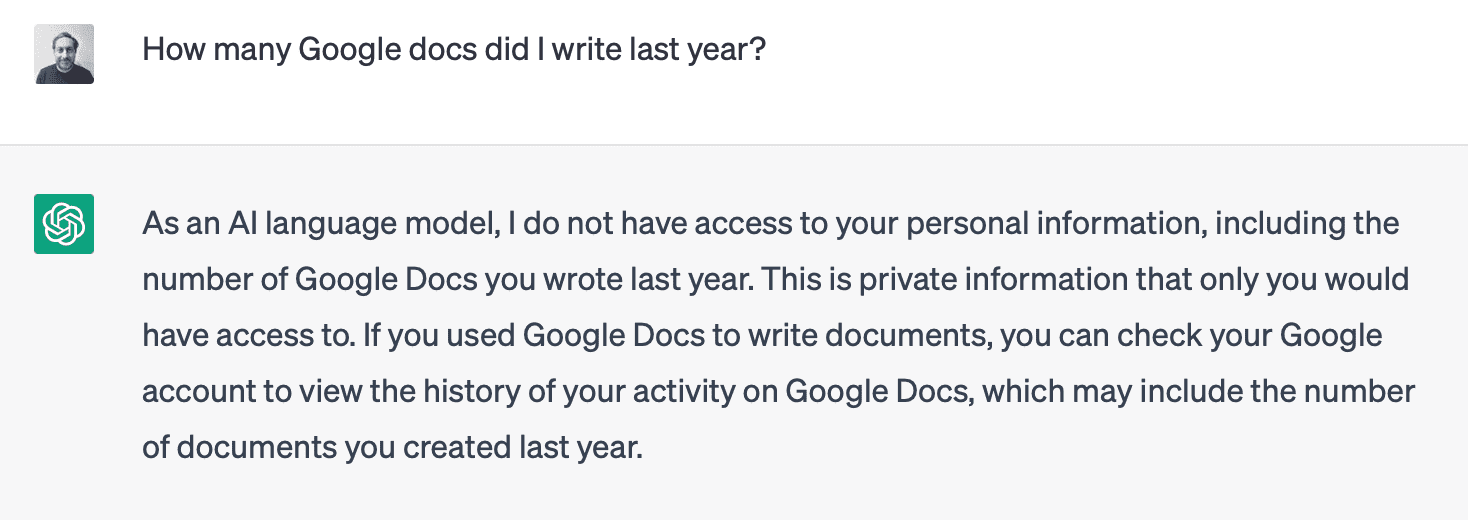

Here’s another limitation of ChatGPT:

It is precisely this limitation that LangChain overcomes.

LangChain is a powerful open-source framework for developing applications powered by language models. It connects to the AI models you want to use, such as OpenAI or Hugging Face, and links them with outside sources, such as Google Drive, Notion, Wikipedia, or even your Apify Actors. That means you can chain commands together so the AI model can know what it needs to do to produce the answers or perform the tasks you require.

This video tutorial shows you how to use LangChain with Apify Blog Scraper, which will help you understand how to integrate LangChain with any scraper you choose

Why use LangChain?

You can create advanced use cases around LLMs with LangChain because it lets you chain together multiple components from several modules:

🤖 Large language models

LangChain is a standard interface through which you can interact with a variety of LLMs.

📜 Prompt templates

LangChain provides several classes and functions to make constructing and working with prompts easy.

🧠 Memory

LangChain provides memory components to manage and manipulate previous chat messages and incorporate them into chains. This is crucial for chatbots, which need to remember prior interactions.

🕵🏻 Agents

LangChain provides agents that have access to a suite of tools. Depending on the user’s input, an agent can decide which tools to call.

All of this means that LangChain makes it possible for developers to build remarkably powerful applications by combining LLMs with other sources of computation and knowledge.

How to get started with LangChain

To begin using LangChain, install it with either pip install langchain or conda install langchain -c conda-forge.

You’ll need integrations with model providers, data stores, and APIs. The LangChain documentation provides an example of setting up the environment for using OpenAI’s API:

- Install the OpenAI SDK:

pip install openai

- Then set the environment variable in the terminal:

export OPENAI_API_KEY="..."

- Another option is to do it from inside the Jupyter notebook or Python script:

import os

os.environ["OPENAI_API_KEY"] = "..."

From there, you can follow the documentation in Python or in JavaScript/TypeScript and start building a language model application, chat model, or text embedding model with the many modules LangChain provides.

Why integrate LangChain with Apify Actors?

Apify is a web scraping and automation platform. Apify Actors are serverless cloud programs that can do almost anything a human can do in a web browser. By integrating them with LangChain, you can feed results from Actors directly to LangChain’s vector indexes to build apps that query data crawled from websites such as documentation or knowledge bases, chatbots for customer support, and a lot more.

Let’s take, for example, Website Content Crawler. This Actor can deeply crawl websites and extract text content from the web pages. Its results can help you feed, fine-tune or train your LLMs or provide context for prompts for ChatGPT.

LangChain’s integration for Apify lets you feed the results directly to LangChain’s vector database so you can easily create ChatGPT-like query interfaces to websites.

How to integrate Apify with LangChain

🔗 1. Install all dependencies

pip install apify-client langchain openai chromadb

🔗 2. Import os, Document, VectorstoreIndexCreator, and ApifyWrapper into your source code

import os

from langchain.document_loaders.base import Document

from langchain.indexes import VectorstoreIndexCreator

from langchain.utilities import ApifyWrapper

🔗 3. Find your Apify API token and OpenAI API key and initialize these into your environment variable

os.environ["OPENAI_API_KEY"] = "Your OpenAI API key"

os.environ["APIFY_API_TOKEN"] = "Your Apify API token"

🔗 4. Run the Actor, wait for it to finish, and fetch its results from the Apify dataset into a LangChain document loader

loader = apify.call_actor(

actor_id="apify/website-content-crawler",

run_input={"startUrls": [{"url": "https://python.langchain.com/en/latest/"}]},

dataset_mapping_function=lambda item: Document(

page_content=item["text"] or "", metadata={"source": item["url"]}

),

)

If you already have some results in an Apify dataset, you can load them directly using ApifyDatasetLoader, as shown in this notebook. In that notebook, you'll also find an explanation of the dataset_mapping_function, which is used to map fields from the Apify dataset records to LangChain Document fields.

🔗 5. Initialize the vector index from the crawled documents

index = VectorstoreIndexCreator().from_loaders([loader])

🔗 6. Query the vector index

query = "What is LangChain?"

result = index.query_with_sources(query)

print(result["answer"])

print(result["sources"])

The query produces an output like this:

LangChain is a framework for developing applications powered by language models. It is designed to connect a language model to other sources of data and allow it to interact with its environment.

Website Content Crawler + LangChain example

Here’s an example of Website Content Crawler with LangChain in action (from the Website Content Crawler README):

- First, install LangChain with common LLMs and the Apify API client for Python:

pip install langchain[llms] apify-client

- Then create a ChatGPT-powered answering machine:

from langchain.document_loaders.base import Document

from langchain.indexes import VectorstoreIndexCreator

from langchain.utilities import ApifyWrapper

import os

# Set up your Apify API token and OpenAI API key

os.environ["OPENAI_API_KEY"] = "Your OpenAI API key"

os.environ["APIFY_API_TOKEN"] = "Your Apify API token"

apify = ApifyWrapper()

# Run the Website Content Crawler on a website, wait for it to finish, and save

# its results into a LangChain document loader:

loader = apify.call_actor(

actor_id="apify/website-content-crawler",

run_input={"startUrls": [{"url": "https://docs.apify.com/"}]},

dataset_mapping_function=lambda item: Document(

page_content=item["text"] or "", metadata={"source": item["url"]}

),

)

# Initialize the vector database with the text documents:

index = VectorstoreIndexCreator().from_loaders([loader])

# Finally, query the vector database:

query = "What is Apify?"

result = index.query_with_sources(query)

print(result["answer"])

print(result["sources"])

The query produces an answer like this:

Apify is a cloud platform that helps you build reliable web scrapers, fast, and automate anything you can do manually in a web browser.

And that, in a nutshell, is why Apify and LangChain are a great combination!

If you want to see what other GPT and AI-enhanced tools you could integrate with LangChain, have a browse through Apify Store.