What is cURL?

cURL, short for "Client for URL," is a powerful and versatile command-line tool and library that allows you to transfer data over various network protocols. It's a popular choice among developers for its simplicity, flexibility, and wide range of features. It can download files, scrape websites, make API requests, test web applications, automate tasks, and much more.

cURL enables users to send and receive data over a wide range of protocols, including HTTP, HTTPS, FTP, FTPS, SMTP, POP3, IMAP, LDAP, DICT, TELNET, and many more. It supports both sending and receiving data, making it a valuable tool for tasks such as downloading files, making HTTP requests, uploading data, and automating various network-related operations.

A brief history of cURL

cURL was started in 1996 by Daniel Stenberg, a Swedish software engineer. Stenberg's motivation for creating cURL was born out of a practical need - to get currency exchange rates, as existing tools were too complex. Back in the 1990s, staying on top of global markets was crucial for many individuals, especially those active in online communities like IRC. cURL was developed as a tool to effortlessly fetch exchange rates from the internet and keep users informed. This initial purpose laid the foundation for its future adaptability and versatility.

cURL gained popularity as an open-source project and thrived on contributions from developers worldwide. Today, cURL is a reliable and widely used tool for data transfer and manipulation.

One reason for cURL's popularity is its cross-platform compatibility, which offers flexibility. However, integrating directly with cURL using the libcurl library isn't always the most convenient approach for HTTP communication (e.g., making API calls) in programming languages. The optimal method depends on the programming language (e.g., built-in functions in PHP and Java, libraries like requests in Python), project needs, and desired level of control.

Not just individuals, but organizations and companies also use cURL for tasks like automated testing, data retrieval, and web scraping. Its command-line interface (CLI) and extensive capabilities make it essential for developers and system administrators.

What does cURL do?

cURL's primary function is to transfer data between your computer and a server via various protocols, including HTTP, HTTPS, FTP, and more. It allows you to:

- Download files: This is cURL's original purpose, and it remains a widely used feature for downloading files from websites, servers, or other online sources.

- Upload files: You can use cURL to upload files to servers for storage, sharing, or other purposes.

- Get website content: cURL can retrieve the HTML code of a website, which can be used for various purposes, such as web scraping or building web automation tools.

- Scraping websites: With additional tools like Scrapy and Beautiful Soup, you can use cURL to extract data from websites, which can be used for various purposes, such as building data-driven applications or market research.

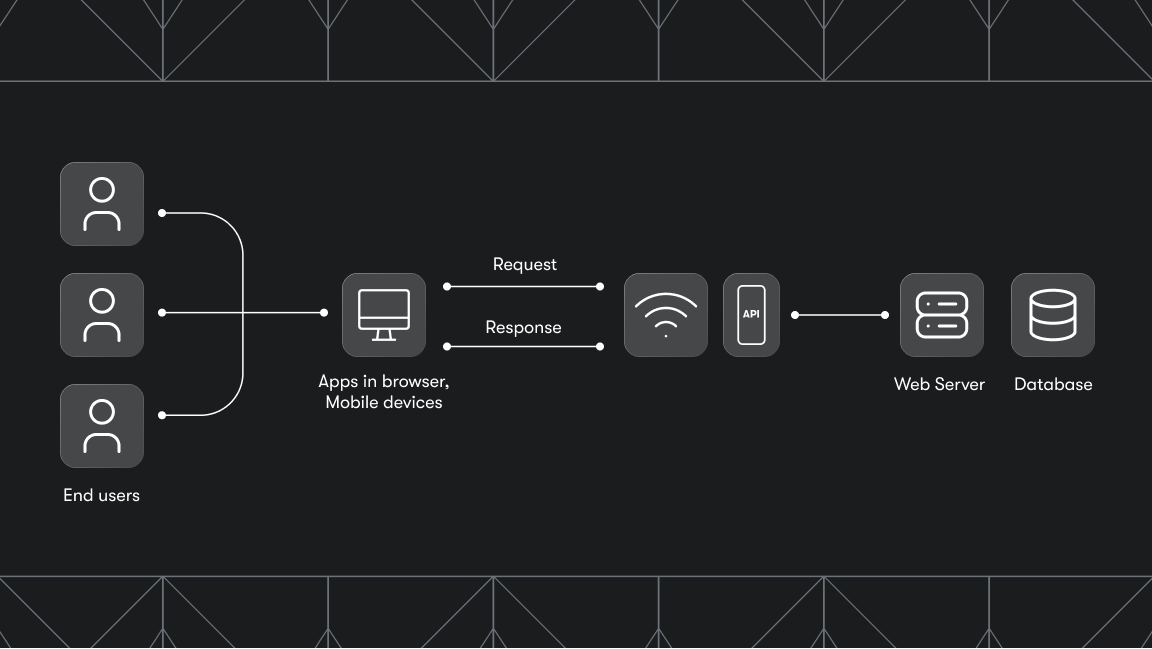

- Make API requests: Many modern applications rely on APIs to exchange data. cURL can be used to make HTTP requests to APIs and retrieve or send data in various formats, like JSON or XML.

- Test web applications: cURL can simulate some server-side interactions with web applications, like testing web APIs, making it a valuable tool for web development and testing.

Related:

How to use cURL

cURL is primarily used through the command line. It comes pre-installed on most operating systems, including Windows, macOS, and Linux. You can confirm the availability of cURL in your computer by running this command:

Remember: cURL doesn't have a fancy GUI; it's all about typing commands. But don't worry, it's not as scary as it sounds!

Start by confirming if cURL is available:

curl https://apify.com

If you see the Apify website response, you're good to go! Now, you can use the curl command followed by various options and arguments to perform different tasks.

Here's a basic example of how to use cURL to download a file:

curl -o file.txt https://example.com/file.txt

This command tells cURL to download the file located at the URL https://example.com/file.txt and save it locally as file.txt.

But cURL offers much more. It has a multitude of options and arguments that let you customize your requests.

For more complex tasks, cURL offers a wide range of options and arguments that allow you to customize your requests. These include:

- Specifying the HTTP method: You can use various HTTP methods like GET, POST, PUT, and DELETE to interact with web servers.

- Setting headers: You can send custom headers with your request to provide additional information to the server.

- Sending data with the request: For POST and PUT requests, you can send data along with the request body. This can be done using various formats like JSON, XML, or form data.

The following table lists some frequently used cURL command options:

| Option | Description |

| -o | Output filename |

| -X | HTTP method (e.g., GET, POST) |

| -d | Data to send with the request |

| -H | Header to send with the request |

| -u | Username and password for authentication (divided with a colon) |

| -L | Follow redirects |

| -A | Sets the user agent to use for the request |

| -v | Verbose output |

You can find a complete list of cURL options and their detailed descriptions in the official documentation.

Related: How to use cURL in Python

Examples of using cURL

Here are some examples of how you can use cURL:

- Download the latest version of Python:

curl -L https://www.python.org/downloads/latest/python-3.12.1-amd64.exe -o python-3.12.1-amd64.exe

- Get the current weather:

curl http://api.openweathermap.org/data/2.5/weather?q=YOUR_LOCATION&appid=YOUR_API_KEY

This example requires you to replace "YOUR_API_KEY" with your own OpenWeatherMap API key.

- Send data to a web form:

curl -X POST -d "name=John Doe&email=johndoe@example.com" https://example.com/form

This example sends a POST request to the URL https://example.com/form with the data name=John Doe&email=johndoe@example.com.

- Download a file as a different filename:

curl -o screenshot.png https://example.com/image.jpgThis example downloads the file https://example.com/image.jpg and saves it locally as screenshot.png.

Advanced examples of using cURL

Let's look at more sophisticated examples to demonstrate cURL's versatility:

- File upload with cURL

curl -X POST -H "Authorization: Bearer YOUR_API_KEY" \\

-F "file=@/path/to/yourfile.txt" \\

https://yourserver.com/upload

This example uploads a file to a server URL https://yourserver.com/upload.

- Sending JSON data with a POST request to an API

Assuming you're querying an API that returns JSON, such as OpenAI's API, the request might look like this:

curl -X POST -H "Content-Type: application/json" \\

-d '{"prompt": "Translate the following English text to French: \\'Hello, world!\\'", "max_tokens": 60}' \\

-H "Authorization: Bearer YOUR_OPENAI_API_KEY" \\

https://api.openai.com/v1/engines/davinci-codex/completions

This would send a text translation prompt to OpenAI's language model.

- Sending GET requests with JSON

curl -X GET \\

-H "Content-Type: application/json" \\

-H "Authorization: Bearer YOUR_APIFY_TOKEN" \\

"https://api.apify.com/v2/datasets/datasetId/items"

Replace "YOUR_APIFY_TOKEN" with your Apify API token and "datasetId" with the ID of the dataset you want to retrieve items from.

In this command, the -X GET flag specifies that the request is a GET request. The -H "Content-Type: application/json" header specifies that the request is sending JSON, and the -H "Authorization: Bearer YOUR_APIFY_TOKEN" header includes the authorization token in the request. The URL specifies the endpoint for retrieving items from a dataset in the Apify platform.

- Making requests to the Apify API

To start a crawler on Apify using cURL, you'd structure a POST request like this:

curl -X POST -H "Content-Type: application/json" \\

-H "Authorization: Bearer YOUR_APIFY_TOKEN" \\

-d '{"startUrls":[{"url":"<https://wikipedia.org/wiki/Artificial_intelligence>"}]}' \\

"https://api.apify.com/v2/acts/ACTOR_ID_HERE/runs?token=YOUR_APIFY_TOKEN"

This command starts an Actor task on Apify and scrapes data from Wikipedia’s Artificial intelligence page. In this snippet, you will replace placeholders with your specific Apify token and the Actor task ID. Replace "ACTOR_ID_HERE" with the ID of the Actor you want to run and "YOUR_APIFY_TOKEN" with your Apify API token. Remember, always secure your API tokens to prevent unauthorized access.

These are just a few examples of how you can use cURL. The possibilities are endless, limited only by your imagination and technical skills.

cURL advanced functionalities

Headers and cookies

You can send custom headers for authentication, user-agent spoofing, or manipulating server responses. Manage cookies for persistent logins or scraping purposes. Example: Imagine logging into a forum with cURL by crafting a POST request with a custom header containing your login credentials and extracting the session cookie for future interactions.

Progress monitoring

Keep track of downloads with options like w and progress to display progress bars, estimated times, and transfer speeds. Example: Downloading a large file? Use curl -w "%{speed_download} KB/s"> to see the download speed in real-time.

Authentication techniques

Accessing protected APIs? cURL supports methods like Basic, Digest, NTLM and even OAuth. Construct and send authorization headers tailored to each method. Example: Accessing a company's internal API? Craft a cURL request with a Basic authentication header containing your username and password, encoded for secure transmission.

Data formats and parsing

Work with various formats like JSON, XML, and custom concoctions. Utilize tools like jq for JSON and regular expressions to navigate and extract valuable information. Example: Scraping product prices from an online store? Combine cURL with jq to fetch the website's HTML, filter through it, and extract the price data stored in JSON format.

Security testing

Ethical hackers use cURL for vulnerability assessments. Probe for misconfigured SSL certificates, exploit open directories or test password strength to help websites identify and patch security weaknesses. Remember: always act responsibly and with proper authorization.

Monitoring and alerting

Monitor server health and API uptime and trigger alerts when things go south. Imagine receiving an SMS notification if your website goes down, giving you precious time to react.

Integration with other tools

Pipe cURL's output to grep for data filtering, use it within Python scripts, or even send data directly to your database.

Escaping user input

Avoid shell injection vulnerabilities by always escaping user input. Utilize tools like curl_easy_escape and proper quoting techniques.

HTTPS over HTTP

Don't send your data on postcards! Use HTTPS whenever possible for secure, encrypted communication.

cURL alternatives

While cURL is a powerful and popular tool, there are other alternatives available for specific needs. Here are some popular alternatives:

- Wget: A free and open-source command-line tool similar to cURL, primarily used for downloading files.

- HTTPie: A user-friendly command-line HTTP client.

- Insomnia: A comprehensive GUI-based API client for developers.

- Postman: A popular desktop application for testing APIs and making HTTP requests. It provides a user-friendly interface and advanced features for managing API requests and responses.

- REST Assured: A Java library for testing REST APIs. It provides a fluent and expressive API for making HTTP requests and validating responses.

- Paw: A paid desktop application for making HTTP requests and testing APIs. It offers a user-friendly interface and advanced features like environment variables, data mocks, and pre-request scripts.

FAQ

What's the difference between GET and POST requests?

A GET request is used to retrieve data from a server, while a POST request is used to send data to a server.

What is the difference between HTTP and HTTPS?

HTTP is the unencrypted version of the protocol, while HTTPS is the encrypted version. HTTPS is more secure and should be used whenever possible.

What are some security considerations when using cURL?

Always be careful when using cURL with sensitive data. It is important to use the HTTPS protocol whenever possible and to avoid sending sensitive information in plain text.