70 years of scientific labor, countless careers, billions upon billions of dollars of investment, hundreds of thousands of scientific papers, and AI supercomputers running at top speed for months. And the AI that the world finally gets is...prompt completion.

-- Dr. Michael Woodridge, author of A Brief History of Artificial Intelligence

I need to start with a disclaimer: I’m not an AI expert and never will be. I’m just a writer standing in front of an LLM, asking it to stop calling itself an AI language model. However far removed from artificial intelligence my background in late antiquity may be, I’m interested enough in machine learning to know this much: large language models, like ChatGPT, are not real AI. Of course, we all have to call them that because that’s how they’ve been marketed, and there’s nothing we can do to unload that smoking gun. But that doesn’t mean we have to always pretend we don’t know any better, right?

Why are LLMs called AI?

Marketing hype and histrionics aside, one possible reason the term AI has become irreversibly linked with LLMs is the fact that ChatGPT is the product of OpenAI. It’s right there in the name, after all.

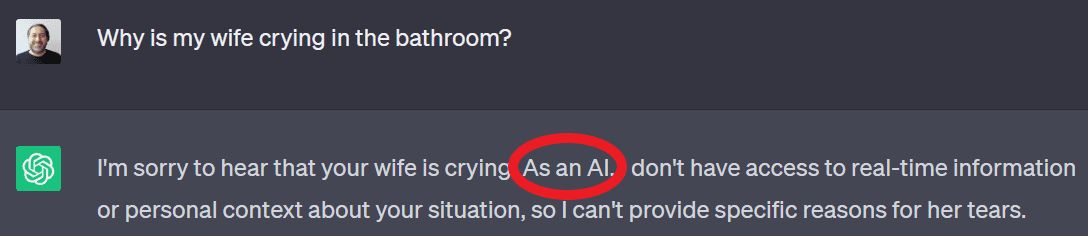

It doesn't help that ChatGPT identifies as an AI whenever it can't answer your question. For example:

Another reason is that people tend to confuse machine learning (ML) and its sub-field, deep learning (DL), with artificial intelligence. Though ML is considered an area of AI, there’s a key difference.

AI encompasses the notion of machines imitating human intelligence, while machine learning is about teaching machines to perform specific tasks with accuracy by identifying patterns. LLMs fall into the second category.

Why LLMs are not AI

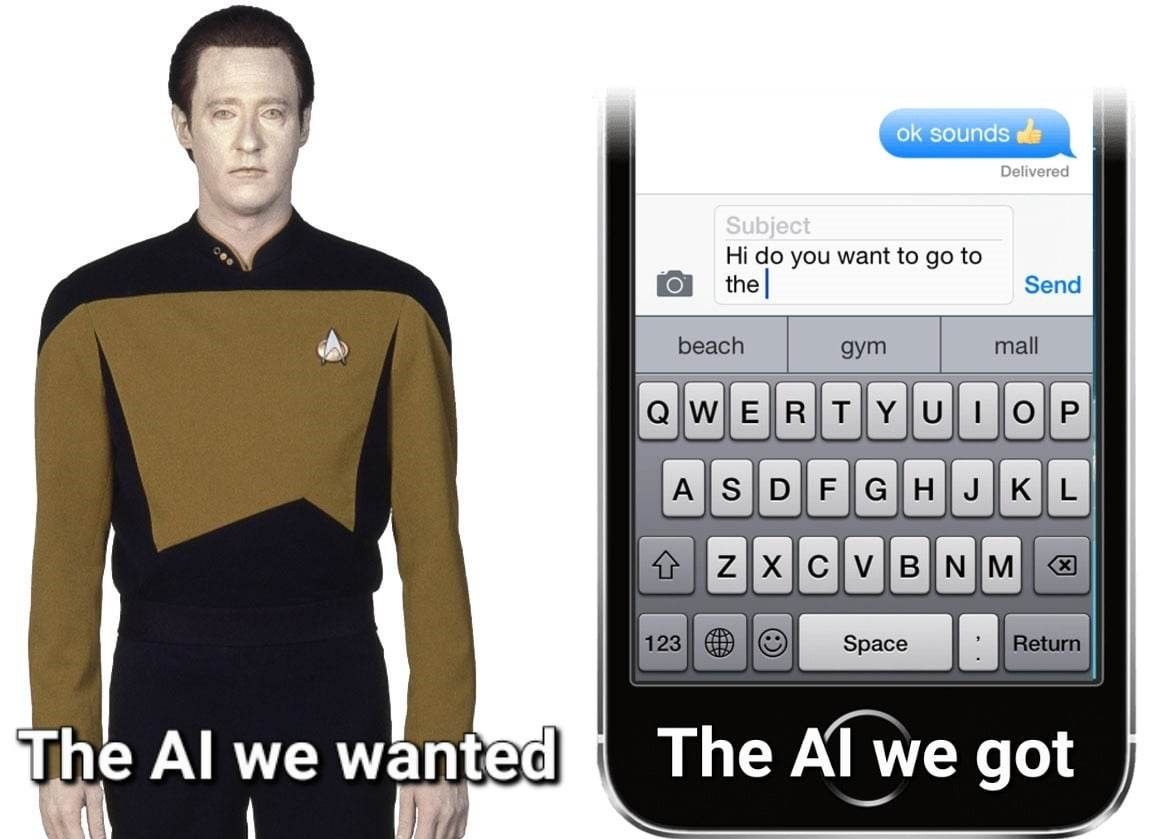

It might appear that LLMs have learned to imitate the human trait of overconfidence. The plethora of amusing examples of ChatGPT giving false information with singular self-certainty suggests that. But in reality, LLMs are nothing more than text predictors. They generate natural text by predicting the next likely word, much like your smartphone does when it offers to finish your sentences for you. Only they do it on a massive scale thanks to advancements in LLM development services. They perform this single task quite creditably a lot of the time. Yes, there are many cases of epic ChatGPT fails, but on the whole, it does its job very well. That’s because it’s what we’ve trained it to do, and it learned to do it through data ingestion.

Why ChatGPT knows more than you

People might be under the impression that the reason AI has progressed so fast in the last few decades is that machines have become intelligent. However, what is behind this progress is a lot more boring than that. Yes, neural networks have vastly improved in recent years, but the main reason for this is the sheer volume of data that has become available and the computing power needed to train neural networks.

The data used to train GPT, for example, was 575 gigabytes of text (more than any of us could ever read in a lifetime). Where did this text come from? It came from the web (every single word of it) - that is to say, it came from us - and it was all retrieved and downloaded for natural language processing through a technique called web scraping.

Considering that ChatGPT was trained on the largest volume of text data the world has ever known with the help of the best data labeling tools, it’s not surprising that it can be very convincing. But it is only an illusion of intelligence. Competence in written communication does not mean that it is capable of anything else.

What has surprised many, however, is its ability to do things we didn’t expect it to learn from natural language processing. When you train a large language model on every piece of text on the web, with 175 billion parameters in its neural network (that was GPT-3; GPT-4 has 1 trillion), you’re bound to be surprised by just how good it is. But let’s not get carried away by interpreting that as a sign of intelligence.

Is this as good as it gets?

For sure, large language models are going to get even better over time, but while some speculate that LLMs mark the beginning of a dystopian future ruled by machines, and others see them as a promising road to AGI (artificial general intelligence), there are those who consider them an unfortunate detour. With so much time, money, and resources being invested in competing with OpenAI and all the possibilities it has opened up, let’s hope that LLMs don’t slow down progress in other worthwhile AI pursuits.