Among the dozens of scraping tools that have appeared in the last two years, two stand out for AI‑centric design philosophies:

- Crawl4AI – an open‑source Python library that lets you host and extend every part of the crawler yourself.

- Firecrawl – an API‑first crawler that turns any URL into LLM‑ready Markdown/JSON in seconds.

We’ll compare the two stacks across architecture, developer experience, scaling, extraction intelligence, ecosystem, and pricing.

At the end, we'll explain why you should consider Apify as an alternative to both.

Crawl4AI vs. Firecrawl at a glance

| Capability | Crawl4AI | Firecrawl |

|---|---|---|

| Core model | OSS Python library you can run anywhere | API‑first SaaS (credits) + AGPL self‑host fork |

| Extraction style | Selector‑based or auto‑learned (“Adaptive Crawling”) | Zero‑selector natural‑language prompts (“Extract”) |

| Dynamic‑content handling | Playwright browsers with Virtual Scroll support | Pre‑warmed Headless Chromium; service decides HTML vs. browser on the fly |

| Built‑in intelligence | Adaptive link scoring, async URL seeder, PDF parsing | Automatic JS detection, markdown token reduction, FIRE‑1 navigation agent |

| Scaling model | You control Docker/K8s, queues, proxies | Global fleet of browser workers; per‑plan concurrency caps |

| Vector/RAG loaders | LangChain, LlamaIndex community adapters | Official LangChain & LlamaIndex loaders with chunking + metadata |

| Pricing headline | Free, OSS; infra costs only | 1 page = 1 credit; 100 k pages = $83/mo on annual Standard plan |

| Licence | MIT | Hosted API (commercial) • Core engine AGPL‑3.0 |

| Latest release | v0.7.1 (17 Jul 2025) | v1.15.0 (4 Jul 2025) |

Philosophy and architecture

Crawl4AI is tailored for engineers building AI-centered data pipelines. It was born in the open‑source community as “Scrapy for LLMs.” Everything — task queues, browser management, storage layers — lives in your repo or cluster. Recent “Adaptive Intelligence” releases add pattern‑learning so the crawler learns reliable selectors over time, but the guiding idea is total transparency and hackability.

Firecrawl comes from the opposite direction: hide the crawling machinery behind a single /scrape, /crawl, /map, or /extract endpoint. The service guesses whether a simple HTTP fetch works or if a browser is needed, runs the job in its fleet, caches the result, and returns LLM‑friendly markdown or JSON — complete with an LLMs.txt file that collapses entire sites into a single document for downstream embedding.

Developer experience and customization

Crawl4AI is a Python-first library that offers a clean async API and a CLI, configurable modules (e.g., CrawlerRunConfig, VirtualScrollConfig, LinkPreviewConfig that let you bolt on deep features without subclass soup), and support for custom hooks — such as running JavaScript before crawling or managing session state via cookies and local storage. Because it’s a library, you can inject your own Playwright scripts, add bespoke back‑off logic, or wire the crawler into Celery/Redis queues. Advanced extraction strategies include CSS selectors, XPath, regex, and LLM-based parsing, so you can mix and match depending on the complexity of your domain.

Firecrawl is language-agnostic by design, accessible via a REST API, and has official SDKs in Python and Node.js (with community support for Go, Rust, and C#). The /extract endpoint lets users define schemas in plain English — no selectors needed. You might say “Extract the title and price from this page,” and get structured JSON back. A playground interface and template exports help developers rapidly iterate before dropping code into production.

Infrastructure and autoscaling

Crawl4AI gives you full control over infrastructure, which is ideal for teams that want deep observability and tight integration with internal systems. You run the browsers — typically Playwright — as Docker containers or Kubernetes pods. This flexibility means you can tailor everything from headless Chrome flags to CPU allocation, but it also means managing retry logic, scaling policies, and proxy pools yourself.

Firecrawl, on the other hand, is fully managed. Browser infrastructure is abstracted away: you send a URL, and the Firecrawl platform decides whether a basic HTTP fetch or a full browser session is needed. It runs headless Chromium instances as required, using a global browser fleet that scales horizontally based on your concurrency plan. Proxy rotation, geo-targeting, and stealth techniques are built in — no config required. The system includes per-request logs, p95 latency tracking, and automatic retries. FIRE‑1, Firecrawl’s built-in navigation agent, can even solve simple captchas autonomously. For teams that want zero infrastructure headaches, this is a compelling trade-off.

| Crawl4AI | Firecrawl | |

|---|---|---|

| Who manages the browsers? | You (Docker images or K8s pods) | Firecrawl |

| Proxy rotation & geotargeting | Bring‑your‑own; middleware examples in docs | Built‑in stealth proxy network with geo‑hints |

| Monitoring | Expose Prometheus metrics or hook into ELK | Dashboard with per‑request logs, p95 latency |

| Retries & captchas | Library helpers + your own back‑off code. | Automatic, plus FIRE‑1 agent can solve basic captchas |

Extraction intelligence

Crawl4AI blends classic scraping precision with emerging adaptive techniques. It’s built on the idea that reliable extraction often needs human‑guided heuristics — so you can use hardcoded CSS/XPath selectors or let the crawler learn its own over time. The “Adaptive Crawling” engine tracks selector confidence scores, detects breakages, and evolves with each run. It’s particularly strong for domains with stable layout patterns, where investing in selector tuning pays dividends. Full Playwright support means it handles SPAs and infinite scrolling natively, and its PDF pipeline enables rich-text extraction from documents. When scraped pages include downloadable documents, you may need standards-compliant archiving with a PDF/A compliance CLI that can outline terminal commands to validate and convert PDFs before storage. However, token management and conversion efficiency are up to you — markdown output is optional, and no default budgeting is applied.

Firecrawl flips the paradigm: selectors are replaced with natural-language prompts like “Extract the blog title and author.” Its models interpret the DOM and return structured JSON, skipping brittle markup dependencies. This makes Firecrawl ideal for domains with varied layouts or rapid change — the crawler adapts with no code updates. Pre‑warmed browsers mean dynamic JS sites load quickly, and the service automatically downgrades to lightweight fetches when possible. Token-wise, Firecrawl outputs markdown by default, aggressively minimizing noise and cutting LLM token loads by up to 67%. Its format‑agnostic engine also parses PDFs, DOCX files, and Google Docs without plugins or extra config.

| Feature | Crawl4AI | Firecrawl |

|---|---|---|

| Dynamic JS sites | Full Playwright; Virtual Scroll grabs infinite feeds | Service decides fetch vs. browser; browser instances pre‑warmed for latency |

| Selectors | Adaptive Crawling learns and adjusts CSS/XPath selectors (confidence scores, history) | Skip selectors entirely; ask in plain English, models parse DOM into schema |

| Link prioritization | 3‑layer relevance scoring before queueing | /crawl heuristics favour in‑domain links; /search endpoint can spider SERP results in one call |

| Document formats | PDF parsing pipeline added in v0.7.0 | Built‑in PDF, DOCX, Google Docs handlers |

| Token efficiency | Optional HTML→Markdown conversion but no token budgeting. | Markdown by default; p75 speeds improved and token count cut by ≈ 67% for RAG |

AI pipeline Integration

Both Crawl4AI and Firecrawl ship loaders for LangChain and LlamaIndex, but Firecrawl’s are official, include chunk sizing and metadata, and handle pagination transparently. Crawl4AI loaders exist as community repos — perfectly usable, but you maintain them.

Firecrawl also surfaces Zapier/Make blocks, so non‑developers can drop scraped data into Sheets or Slack; Crawl4AI relies on your code.

Ecosystem and community

Crawl4AI has a vibrant community presence on GitHub and Discord, with ongoing weekly updates. While it doesn’t offer enterprise support SLAs, the project is well‑loved by developers and frequently updated with new features and documentation contributions.

Firecrawl is built with an ecosystem of SDKs, documentation, and user templates. It provides support through community Discord channels, SLAs for paid plans, and enterprise-level integrations. Developers point to its speed and productivity gains.

| Metric | Crawl4AI | Firecrawl |

|---|---|---|

| GitHub stars (July 2025) | 49k★ | 43 k ★ |

| Release cadence | Weekly pre‑releases + monthly tagged versions | Bi‑weekly SaaS deploys; monthly open‑source sync |

| Self-hosting | Docker image & Helm chart; MIT licence | firecrawl-simple fork strips billing logic, AGPL‑3.0 |

| Community hangouts | Discord, monthly meet‑ups | Discord, open office hours, YC alumni Slack |

Pricing and licensing

Crawl4AI is fully open-source under an MIT license. There are no platform costs, but you must supply your own infrastructure, proxy service, LLM models, and DevOps support, so variable operational costs exist.

Firecrawl uses a predictable credit model: 1 page ≈ 1 credit. Paid plans start at $16/month. Extraction tasks that go beyond simple scraping consume additional credits or tokens, and Firecrawl offers dedicated “Extract” plans ranging from $89 to $719/month, depending on token volume.

Firecrawl's hosted service is closed‑source; if you self‑host the AGPL‑licensed core engine, you must open-source your modifications when you redistribute them.

| Monthly volume | Crawl4AI cost | Firecrawl cost |

|---|---|---|

| 3k pages | Infra cost only (≈ $2 of AWS on micro‑instance) | $16 Hobby pricing plan |

| 100k pages | EC2 c5.xlarge + proxies ≈ $70 (DIY) | $83 Standard pricing plan) |

| 500k pages | K8s cluster + proxies ≈ $250 but labor-intensive | $333 Growth pricing plan |

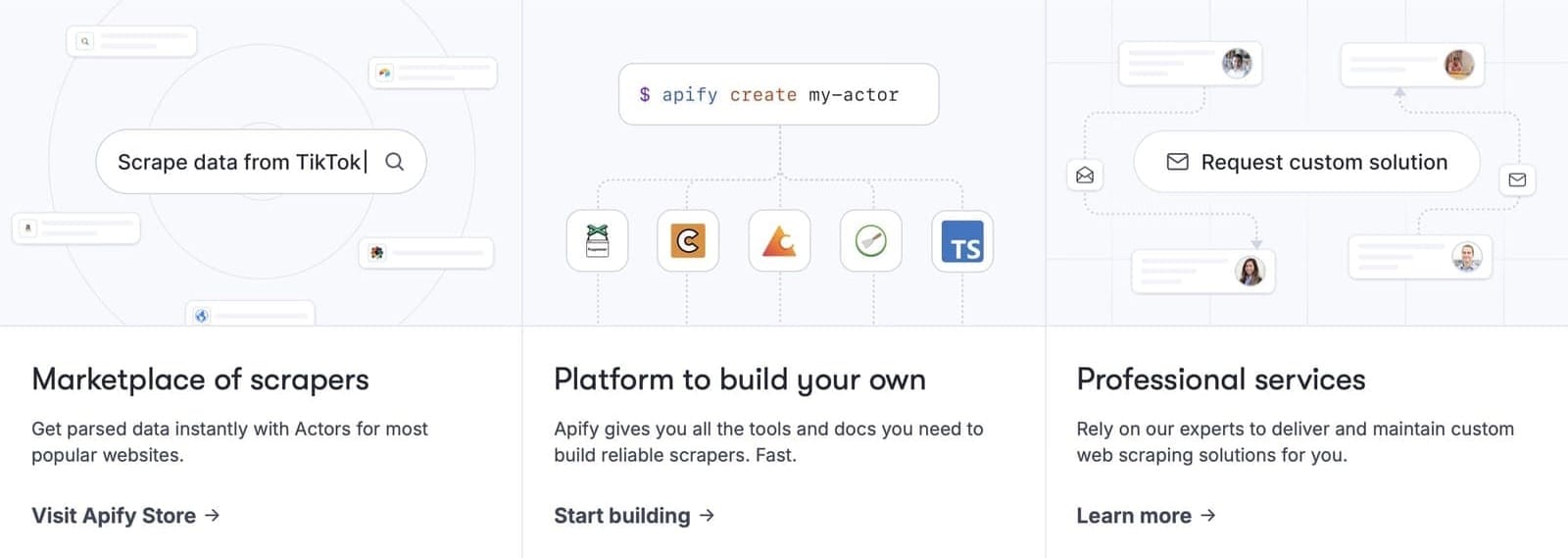

A flexible alternative to Crawl4AI and Firecrawl

If Crawl4AI is DIY and Firecrawl is API‑first, Apify sits in the middle: a platform plus a marketplace.

Here's why you should consider Apify as an alternative:

- 10,000+ ready‑made scrapers cover every kind of website, such as Amazon, Google Maps, LinkedIn, Apollo, TikTok, Reddit, X, Instagram, Facebook, and many more. All can be used with an intuitive UI (no coding needed).

- Managed global proxy network and CAPTCHA‑solving. Scrapers on the Apify platform have proxy rotation, browser fingerprinting, and CAPTCHA-solving baked in. No need to pay for third-party services.

- Serverless execution. You can code a scraper in JS/TS or Python, deploy it to the cloud, and Apify auto‑scales it exactly like AWS Lambda — no servers to patch.

- First‑party export and integrations (S3, Firestore, Airtable, Kafka). Firecrawl ships LangChain/LlamaIndex loaders, but with Apify, you can also push to object storage or message queues.

- Multiple pricing modes. Classic compute‑unit billing and a pay‑per‑event model, where you charge by events like “run started”, not just results, which can make large-scale scraping cheaper.

- Free on‑ramp. $5 credits every month forever; pay a subscription only once you outgrow the free tier.

Want to know exactly how Apify compares with Firecrawl? Check out our detailed comparison below.

Explore other alternatives to Crawl4AI and Firecrawl here:

Note: This evaluation is based on our understanding of information available to us as of July 2025. Readers should conduct their own research for detailed comparisons. Product names, logos, and brands are used for identification only and remain the property of their respective owners. Their use does not imply affiliation or endorsement.