We’ve been scraping the web at Apify for almost eight years now. We’ve built our cloud platform, a popular open-source web scraping library, and hundreds of web scrapers for companies large and small. Thousands of developers from all over the world use our tech to build reliable scrapers faster, and many even sell them on Apify Store.

But we also failed countless times. We lost very large customers to dumb mistakes, and we struggled with unlocking value for many of our users thanks to misaligned expectations. Perhaps we were naive, or just busy with building a startup, but we often forgot to realize that our customers were not experts in web scraping and that the things we thought obvious, were very new and unexpected to them.

The following 6 things you should know before buying or building a web scraper are a concentrated summary of what we’ve learned over the years and what we should’ve been telling our customers from Apify’s day one.

If you don’t have a lot of experience with web scraping, you might find some of these things unexpected, or even shocking, but trust me, it’s better to be shocked now, than two months later, when your expensive scraper suddenly stops working.

1. Every website is different

Even though, to the human eye, a website looks like a website, underneath the buttons, images, and tables they’re all very different. These differences often arise from how data containers are structured within the website's code. That makes it hard to estimate how long a web scraping project will take, or how expensive it will be, before taking a thorough look at the target websites.

With regular web applications, the complexity of a project is determined by your requirements and the features you need. With web scrapers, it’s driven mostly by the complexity of the target website, which you have no control over. To determine the features the scraper will need to have, and also to identify potential roadblocks, developers must first analyze the website.

Common factors of web scraper complexity

Some of the most important factors that influence the cost and time to completion of a web scraping project are the following:

Anti-scraping protections

Even though web scraping is perfectly legal, websites often try to block traffic they identify as coming from a web scraper. It’s therefore essential for the scraper to appear as human-like as possible. This can be achieved using headless browsers and clever obfuscation techniques, but many of them increase the price of a project by orders of magnitude. A good initial analysis will identify the protections and provide a cost-efficient plan for overcoming them. Great web scraper developers are already familiar with most of the protections out there and they can reliably avoid being blocked.

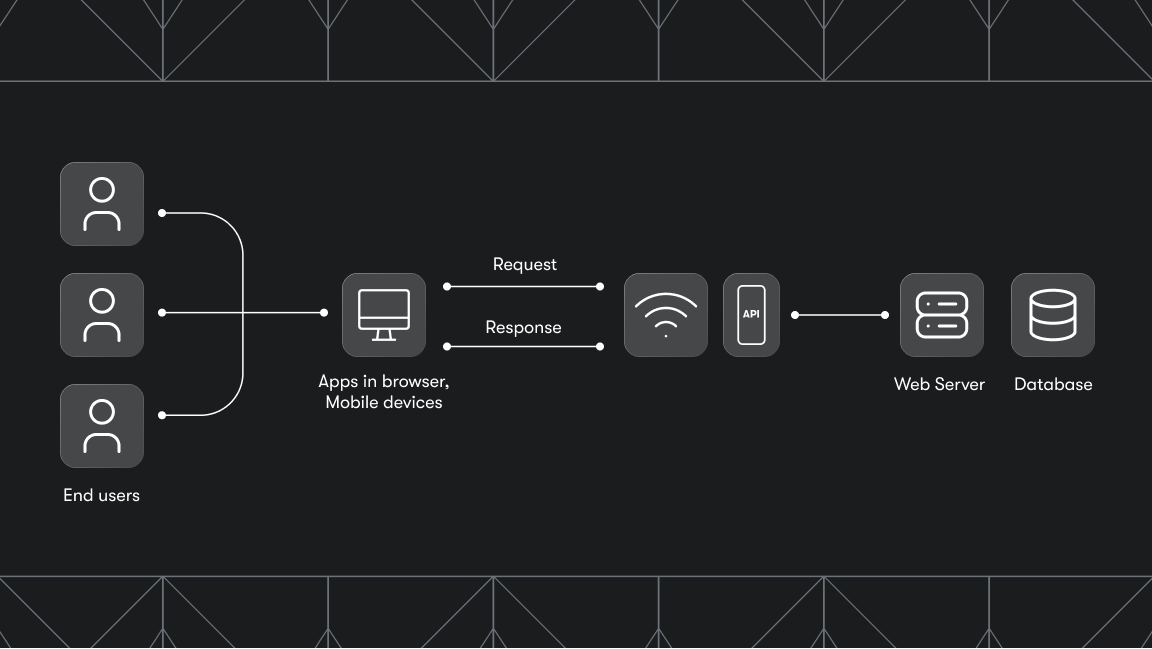

Architecture of the website

Some websites can be scraped very quickly and cheaply with simple HTTP requests and HTML or JSON parsing. Other websites require a headless browser to access their data. Headless browser scrapers need a lot of CPU power and memory to operate, which makes them 10-20 times more expensive to run than HTTP scrapers. A great web scraper developer will always try to find a way to use an HTTP scraper by reverse engineering the website’s architecture, but unfortunately, it’s not always possible.

How fast and often do you need the data

Speed and frequency of web scraping have a profound impact on complexity. The faster you scrape, the more difficult it is to appear like a human user. Not only do you need more IP addresses, and more device fingerprints, but with super large-scale scraping, you also have the non-trivial engineering overhead of managing and synchronizing tens or hundreds of concurrently running web scrapers.

Finally, there’s the issue of overloading the target website. Amazon.com can handle significantly more scraping than your local dentist’s website, but in many cases, it’s not easy to figure out how much traffic a website can handle safely if you want to scrape ethically. Great web scraper developers know how to pace their scrapers. And when they make a mistake, they can quickly identify it and immediately downscale the scraping operation.

2. Websites change without asking

Web scrapers are different from traditional software applications because even the best web scraper can stop working at any moment, without warning. The question is not if, but when.

Web scrapers break because they are programmed to understand the structure of the websites they visit, and if the structure changes, the scraper will no longer be able to find the data it’s looking for. Sometimes a human can’t even spot the difference, but any time the website’s HTML structure, APIs, or other components change, it can cause a scraping disruption.

Professional web scraper programmers can reduce the chances of a scraper breaking, but never to zero. When a brand launches a full website redesign, the web scraper will need to be programmed again, from scratch. Reliable web scraping therefore requires constant monitoring of the target websites and of the scrapers’ performance.

How can you handle website changes?

First, think about how critical the data is for you on a scale from 1 to 3, with 1 being nice-to-have and 3 mission-critical. Think both in terms of how fast you need the data as well as about its importance. Do you need the data for a one-off data analysis? A monthly review? A real-time notification system? If you can’t get the data in the expected quality, can you postpone the analysis, or will your production systems and integrations fail?

1: Nice-to-have data

That’s data you can either wait for or don’t care about missing out on. For those projects, it’s best to simply accept as a fact that the websites can change before your next scrape and that you might have to fix the scraper. Regular maintenance often does not make sense, because it adds extra recurring costs that could exceed the price of a new scraper. The best course of action is to try if the scraper still works, and if it doesn’t, order an update or fix it yourself. This might take a few hours, days, or weeks, depending on the complexity of the scraper and how much money you want to spend.

2: Business-critical data

This is data that’s important for your project, but not having it at the right time and in the right quality won’t threaten the existence of the project itself. For example, data for a weekly competitive pricing analysis. You won’t be able to price as efficiently without it, but your business will continue. Most web scraping projects fall into this category. Here the best practice is to set up a monitoring system around the scrapers and to have developers ready to start fixing issues right away.

The monitoring system must serve two functions. First, it must notify you in real time when something is wrong. Some scrapers can run for hours or days, and you don’t want to wait until the end of the scrape to learn that all your data is useless. Second, it must provide detailed information about what is wrong. Which pages failed to be scraped, how many items are invalid, which data points are missing, and so on.

A robust monitoring system gives developers an early warning and valuable information to fix the web scraper as soon as possible. Still, if you don’t have developers at hand to start debugging right away, the monitoring itself can’t save you. It doesn’t matter if you source your developers in-house or from a vendor, but ideally, you should have a dedicated developer (or a team) ready to jump in within a matter of hours or a day. Any experienced developer will do, but if you want a quick and reliable fix, you need a developer that’s familiar with your scraper and the website it’s scraping. Otherwise, they’ll spend most of their time learning how the scraper works, which dramatically increases the cost of the update.

3: Mission-critical data

This category includes data that your business simply can’t live without, but also high-frequency analytics data, that have strict timing requirements. For example, data that needs to be scraped every day, and also delivered the same day before 5 a.m. When scraping the data usually takes 2 hours, the scraper starts at midnight, and your monitoring system reports that the scraper is broken, who’s going to fix it between 3 a.m. and 5 a.m.?

The best practice for high-quality mission-critical data is not only regular monitoring and maintenance of the scrapers but also proactive testing and performance analytics. Many issues with web scrapers can be caught early by regular health checks. Those are small scrapes that test various features of the target website. Depending on the requirements of the project, they can run every day, hour, or minute, and will give developers the earliest possible warning about issues they need to investigate.

3. Small changes in web scraper specifications can cause dramatic changes in cost

This applies to all software development, but maybe even more so to web scrapers, because the architecture of the scraper is largely dictated by the architecture of the target website, and not by your software architects. Let’s illustrate this with an example.

You want to build a web scraper that collects product information such as name, price, description, and stock availability from an e-commerce store. Your developer then finds that there are 1,000,000 products in the store. To scrape the information you need, the scraper has to visit ~1,000,000 pages. Using a simple HTTP crawler this will cost you, let’s say, $100. Assuming you want fresh data weekly, the project will cost you ~$400 a month.

Then you think it would be great to also get the product reviews. But to get all of them, the scraper needs to visit a separate page for each product. This doubles the scraping cost because the scraper must visit 2 million pages instead of 1 million. That’s an extra ~$400 for a total of ~$800 a month.

Finally, you realize that you would also like to know the delivery cost estimate that’s displayed right under the price. Unfortunately, this estimate is computed dynamically on the page using a third-party service, and your developer tells you that you will need a headless browser to do the computation. This increases the price of scraping product details ~20 times. From $100 to $2,000.

In total, those two relatively small adjustments pushed the monthly price of scraping from ~$400 to ~$8,400.

4. There are legal limits to what you can scrape

Even though web scraping is perfectly legal, there are rules and regulations every web scraper must follow. In short, you need to be careful when you’re scraping:

- personal data (emails, names, photos of people, birthdates …)

- copyrighted content (videos, images, news, blog posts …)

- data that’s only available after signing up (accepting terms of use)

Web scraper consultants and developers can give you guidance on your project, but they can’t replace professional advice from your local lawyer. Laws and regulations are very different across the world. Web scraping professionals have seen a fair share of projects, and they can reasonably guess whether your own project will be regulated or not, but they’re not globally certified lawyers.

Keep in mind that LLC laws differ by state, so understanding your specific obligations is important. For example, California LLCs must adhere to strict data privacy law firm as the CCPA, which require extra caution when handling personal or sensitive information. In contrast, LLC formation requirements vary across the United States, with many states imposing fewer data privacy obligations and relying more on federal regulations.

5. Start with a proof of concept for your web scraper

Even though you might want to kick off your new initiative and reap the benefits of web data at full scale right away, I strongly recommend starting with a proof of concept or an MVP.

As I explained earlier, all web scraping projects venture into uncharted territory. Websites are controlled by third parties and they don’t guarantee any sort of uptime or data quality. Sometimes you’ll find that they’re grossly over-reporting the number of available products. Other times the website changes so often, that the web scraper maintenance costs become unbearable. Remember Twitter (now X) and their shenanigans with the public availability of tweets.

The inherent unpredictability of web scraping can be mitigated by approaching it as an R&D project. Build the minimal first version, learn, and iterate. If you’re looking to scrape 100 competitors, start by validating your ideas on the first 5. Choose the most impactful ones or the ones that your developers view as the most difficult to scrape. Make sure the ROI is there, and empowered by the learnings, start building the next batch of websites. You will get better results, faster, and at a more competitive price this way – I promise.

6. Prepare for turning data into insights

Mining companies are experts in mining ore. Web scraping companies and developers are experts in mining data. And just like mining companies aren’t the best vendors for building bridges or car engines using the mined ore, web scraping companies often have limited experience with banking, automotive, fashion, or any other complex business domain.

Before you buy or build a web scraper, you must ask yourself whether your vendor or your team has the skills and the capacity to turn the raw data into actionable insights. Web scraping is only the first part of the process that unlocks new business value.

It happened from time to time to our customers that they simply weren’t ready to process the vast amount of data web scraping offered. This led to sunk costs and the downscaling of their projects over time. They had the data, but they could not find the insights, which led to poor ROI.

Anything else?

Yes, there’s about a million things that can go wrong in a web scraping project. But that’s true in any field of human activity. Whether you choose to develop with open-source tools, use ready-made web scrapers or buy a fully-managed service, a little due diligence will go a long way. And if you understand and follow the 6 recommendations above, I’m confident that your web scrapers will be set up for long-term success.