If you think web crawling and web scraping are the same, you're not alone - but you're also not right. In this article, we’ll map out the differences and use cases for both processes, so that you can choose the best approach for your needs.

Web crawling vs. web scraping

Web crawling is the process of browsing the internet to discover and index content. Crawlers automatically follow links from one page to another, mapping the structure of websites and gathering URLs. It’s typically used by search engines to build searchable web indexes.

Web scraping means extracting specific datasets from websites, often by parsing the HTML content of a page to collect targeted information (e.g., product prices, contact details, or reviews). It’s used to gather structured data from unstructured web content for analysis, automation, or integration into other applications.

Key differences

| Web crawling | Web scraping | |

|---|---|---|

| Purpose | Indexing and understanding the structure of websites. | Extracting specific data from web pages for analysis, monitoring, or storage. |

| Primary output | List of indexed URLs. | Structured data in formats like CSV or JSON. |

| Scope | Covers a broader range of web content, often the entire web or a large part of a website. | Targeted focus on specific elements of specific web pages. |

| Process | Starts with seed URLs, identifies hyperlinks, adds new links to a queue, repeats process. | Targets web pages, downloads HTML, parses HTML to locate and extract data, saves data. |

A web crawler's main job is to build a structured map of what’s out there. Crawlers produce a list of indexed URLs that represent the web pages they’ve discovered.

A web scraper zooms in on particular elements (like product prices, reviews, or contact details). It delivers outputs as structured data in formats such as CSV or JSON.

What is web scraping?

Web scraping gathers structured data from unstructured web content. Scraping aims to extract specific information and provides structured data extracted from web pages. It’s a focused process, targeting specific elements on specific pages. Examples of scrapers:

Simplified process example for scraping a website:

- Starting point: Choosing specific web pages (URLs) containing the data of interest.

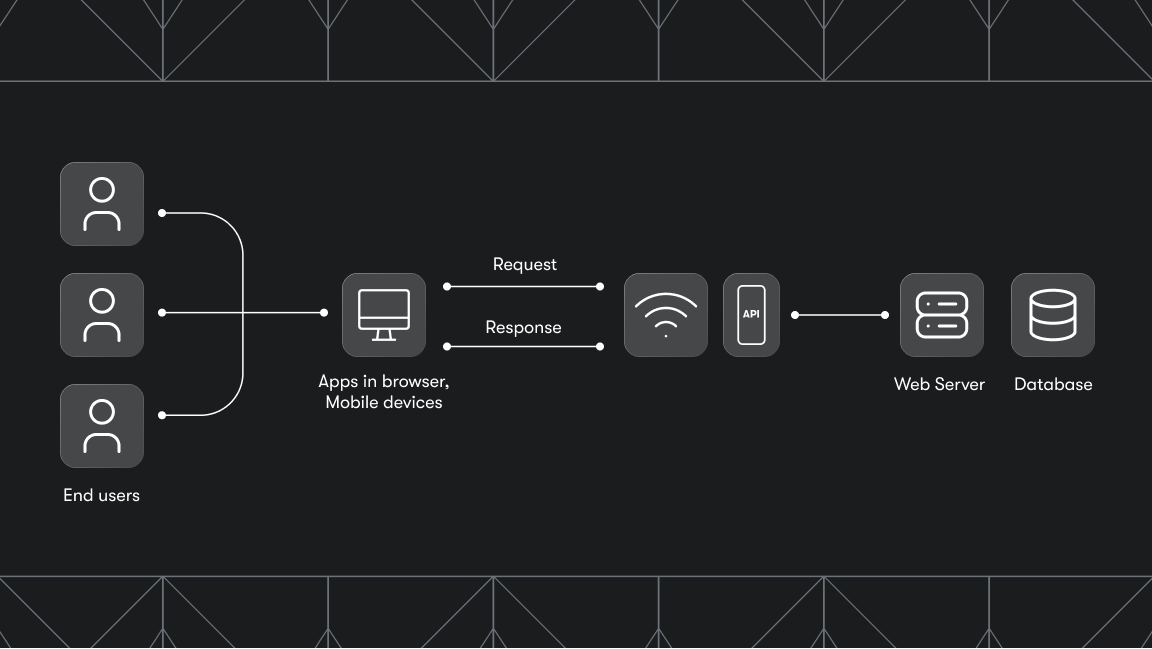

- The scraper sends HTTP requests to a web server.

- The server responds with the page's HTML code.

- The scraper parses the code to locate particular HTML tags, classes, or attributes that contain the data to be scraped.

- The scraper delivers output datasets.

Here’s how to scrape any website with Apify’s Web Scraper API from Apify Store:

What can a web scraper be used for?

Web scrapers are used across various use cases, wherever large datasets are needed. Some examples include:

- Market research. Scrapers can facilitate the collection of customer reviews, social media posts, and news articles to track sentiment trends and competitor activities.

- Lead generation. You can gather business emails, phone numbers, and company profiles from directories and social platforms. The collected data can be pushed into CRMs via API, and recurring scrapes can be scheduled to keep lead lists current.

- Data for generative AI. Scrapers extract text, images, and media from websites. This data can be used to feed AI pipelines, ensuring that AI models are trained on fresh, domain-specific data.

- Competitive intelligence. By monitoring competitor locations, reviews, and offerings, you can conduct sentiment analysis to understand competitors' strengths and weaknesses.

- Price comparison. You can scrape product prices and stock levels across global and local e-commerce sites. Results can be integrated with analytics stacks.

- Product development. You can scrape competitor catalogs, social feedback, and search trends to inform feature prioritization and UX design. Scraped data can be fed into your roadmap tools or analytics pipelines for prototyping.

- Machine learning. Scrapers can help you build large‑scale datasets from thousands of web sources. With clean, labeled data, scrapers can power your NLP, computer vision, or RAG workflows.

- Stock market. Scrapers help you monitor stock prices, market trends, and financial news for investment decisions.

- Social media monitoring: Crawlers can help you monitor how content or brand mentions spread across unexpected forums and social media platforms.

What is a web crawler?

A web crawler (aka spider or spiderbot) is a bot that can map the structure of websites and gather URLs. Crawlers index and understand the website structure, covering a broader range of web content, often the entire web or a substantial part of a website. They typically deliver results in a list of indexed URLs. Examples of scrapers:

- Googlebot used by Google search.

- AmazonBot - Amazon’s web crawler.

- Bingbot - Microsoft Bing’s search engine crawler.

Simplified process for crawling a website:

- Starting point: The crawler begins with a list of starting pages (called seed URLs).

- The crawler visits each URL and identifies all hyperlinks.

- The crawler adds newly discovered links to a queue of URLs to be visited next.

- The crawler continues the process recursively to create a complete picture.

When to use a web crawler?

Here are some scenarios where you'd typically need the web crawling process:

- Search engine optimization (SEO). Crawling can show you how search engines view your website. For example, you can optimize how Google sees and evaluates your site for SERP by checking backlinks as authority signals, spotting orphan pages, or detecting redirects or loops confusing Googlebot.

- Market research. You can identify competitors and get an overview of the dynamics of a specific industry online. Crawlers can map how competitors organize their content: with categories, product pages, blog posts, and landing pages.

- Content aggregation. Crawlers can collect URLs from different sources for later extraction. They can also help you with content gaps and discover topics your site hasn’t covered.

- Link auditing: You can check the validity of links on your website to make sure they aren't broken. Crawlers can also optimize your link flow so that high-priority pages get more SEO “juice”.

- Web archiving: Crawlers can help you identify web pages to be preserved for historical records or compliance. Crawlers can also run periodically to capture snapshots of a site over time.

- Plagiarism detection: You can find out whether your content was copied or used without permission by indexing web pages, allowing for quick and accurate comparisons.

- Geo-targeted content: Crawling lets you compare how web content differs based on different geographic locations, if the site has locale-adaptive pages.

- News and event tracking: Follow trending topics and current events by crawling news sites and collecting URLs of articles and blog posts.

Which one do I need to look for?

Choosing between a crawler and a scraper depends entirely on the outcome you want to achieve. If you need to explore, monitor, map, or archive websites, you need a crawler. If you need to extract specific data for analysis or applications, use a scraper.

Web crawling and web scraping can work together

Since web crawling is for indexing the web and understanding its intricate structure, while web scraping pulls out targeted data from the web, both processes might be used in the data collection process.

A hybrid approach works well when you need to identify the landscape and find the URLs of interest first (crawling), followed by extracting the detailed data later (scraping).

The next time you're involved in a data extraction project, take a moment to consider your objectives. Are you looking to understand the broader structure of a website or the entire web? You need web crawling. Do you need specific pieces of web data for analysis or decision-making? Time for web scraping. These tools are often most effective when used together, offering a comprehensive solution for extracting data.

Conclusion

Understanding the distinction between web crawling and web scraping is essential for anyone working with web data. While crawlers excel at mapping website structures and discovering content at scale, scrapers focus on extracting precise data points for immediate use. Neither approach is inherently superior - they simply serve different purposes.

The key is matching your tool to your objective. Need to understand how a website is organized or find all relevant pages in a domain? Start with crawling. Looking to extract product prices, contact information, or customer reviews for analysis? Web scraping is your answer. You can also use a combined solution: crawl first to discover your targets, then scrape to extract the specific data you need.

FAQ

Is web crawling legal?

Similar to web scraping, web crawling is legal when accessing publicly available data. Crawling is a standard practice for search engines like Google and Bing, enabling them to index and organize the vast amount of information available on the internet. However, it’s important to conduct crawling ethically, just like with any other data collection process: collecting personal data without consent can violate privacy laws, so always be mindful when processing sensitive information in the digital space.

Is web scraping the same as web crawling?

No. Web crawling is the process of browsing the internet to discover and index content. Web scraping means extracting specific datasets from websites.

What is the main difference between web crawling and web scraping?

Both processes have different use cases and offer different outputs: web crawling is used to index and understand the website structure, while web scraping extracts specific data from web pages for analysis, monitoring, or storage.