So you're aware of web scraping and web crawling, and you've heard about some convincing advantages, but you're a little worried about the disadvantages. We think that we can help you see that the pros outweigh the cons. To be honest, we're a bit biased, because Apify is a global web scraping expert (even SaaSHub thinks so), after more than six successful years of extracting data, but it's also fair to say that we've seen both sides of the coin and we understand your dilemma.

If you're in a rush, here's a handy shareable infographic to give you an overview of the pros and cons:

Alright, let's get started with the good side of web scraping, and we promise that this won't get too technical.

Advantages of web scraping

Speed

First and foremost, the best thing about using web scraping technology is the speed it provides. Everyone who knows about web scraping associates it with speed. When you use web scraping tools - programs, software, or techniques - they basically put an end to the manual collection of data from websites. Web scraping enables you to rapidly scrape many websites at the same time without having to watch and control every single request. You can also set it up just one time and it will scrape a whole website within an hour or much less - instead of what would have taken a week for a single person to complete. This is the main issue web scraping is created to solve. And if you want to alter the scraping parameters - go ahead and tailor it, scrapers are not set in stone.

Another part of the reason why web scraping is quick is not only about how fast it scans the web pages and extracts data out of them but also about the process of incorporating web scraping into your routine. It’s fairly easy to get started with web scrapers because you don't have to be concerned about building, downloading, integrating, or installing them. Therefore, after going through the setup, you’re all set to start web scraping. Now imagine what you can get done with a speedy scraper - information from about 1,000 products from an online store in five minutes, wrapped into a neat little Excel table, for instance. And we have dozens of those in our Apify Store, some quicker, some a bit slower, but always efficient.

Web scraping delivers successful and dynamic future evaluation. Since data scraping can assess consumer attitude, their needs, and desires, one can even perform an extensive predictive analysis, too. Obtaining a thorough idea of consumer preferences is a blessing, and this promotes businesses to plan the future effectively.

Data extraction at scale

This one is easy - humans 0, robots 1 - and there’s nothing wrong with that. It’s quite difficult to imagine dealing with data manually since there’s so much of it. Web scraping tools provide you with data at much greater volume than you would ever be able to collect manually. If your challenge is, say, checking the prices of your competitor’s products and services weekly, that would probably take you ages. It also wouldn’t be too efficient, because you can’t keep that up even if you have a strong and motivated team. Instead, you decide to work the system and run a scraper that collects all the data you need, on an hourly basis, costs comparatively little, and never gets tired. Just to put it into perspective: with an Amazon API scraper, you can get information about all products on Amazon (okay, maybe that use case would take a while, as Amazon.com sells a total of 75,138,297 products as of March 2021). Now imagine how much time and effort that would take for a human team to accomplish gathering and compiling all that data. Not the most efficient way to do things if there’s an alternative automated solution available. You could even go so far as to call web scraping the dawn of a new phase in the post-industrial revolution.

Here's how the investment industry benefits from web scraping. Hedge funds occasionally use the web scraping technique to collect alternate data in order to avoid flops. It helps in the detection of unexpected threats as well as prospective investment opportunities. Investment decisions are complicated since they normally entail a series of steps from developing a hypothetical thesis to experimenting and studying before making a smart decision. Historical data research is the most effective technique to assess an investing concept. It enables you to acquire insight into the fundamental reason for prior failures or achievements, avoidable mistakes, and potential future investment returns.

Web scraping is a method for extracting historical data more effectively, which may then be fed into a machine learning database for model training. As a result, investment organizations that use big data increase the accuracy of their analysis results, allowing them to make better decisions. You can check out more cases of using web scraping for real estate and investment opportunities here.

Cost-effective

One of the best things about web scraping is that it’s a complicated service provided at a rather low cost. A simple scraper can often do the whole job, so you won’t need to invest in building up a complex system or hiring extra staff. Time is money, with the evolution and increasing speed of the web, without the automation of repetitive tasks, a professional data extraction project would be impossible. For example, you could employ a temporary worker to run analyses, check websites, carry out mundane tasks, but all of this can be automated with simple scripts (and, by the way, run on the Apify platform tirelessly and repetitively). When choosing how to execute a web scraping project, using a web scraping tool is always a more viable option than outsourcing the whole process. People have better things to do than collecting data from the web like digital librarians.

Another thing is that, once the core mechanism for extracting data is up and running, you get the opportunity to crawl the whole domain and not just one or several pages. So the returns of that one-time investment into making a scraper are pretty high and these tools have the potential to save you a lot of money. Overall, choosing a web scraping API has significant advantages over outsourcing web scraping projects, which can get expensive. Making or ordering APIs may not be the cheapest option, but in terms of the benefits they provide to developers and businesses, they are still on the less expensive side. Prices vary based on the number of API requests, the scale, and the capacity you need. However, the return on investment makes web scraping APIs an invaluable investment into an increasingly automated future.

Web scraping can make even sentiment analysis a more affordable task: as we know, thousands of consumers publish their product and service experiences on online review sites every day. This massive amount of data is open to the public and may be simply scraped for information about businesses, rivals, possible opportunities, and trends. Web scraping combined with NLP (Natural Language Processing) may also reveal how customers react to their products and services, as well as what their feedback is on campaigns and products.

Flexibility and systematic approach

This advantage is the only one able to compete with the speed that scraping data provides since scrapers are intrinsically in flux. Web scraping scripts - APIs - are not hard-coded solutions. For that reason, they are highly modifiable, open, and compatible with other scripts. So here’s the drill: create a scraper for one big task and reshape it to fit many different tasks by making only tiny changes to the core. You can set up a scraper, a deduplication Actor, a monitoring Actor as well as an app integration - all within one system. This all will be collaborating together with no limitations, extra cost, or any new platform needed to be implemented.

Here’s an example of a workflow built out of four different APIs if you want to scrape all available monitors from Amazon and compare them with the ones you have in your online store. The first step is data collection with a scraper, the next is to have another Actor for data upload into your database, another could be a scraper to check the price differences, and the API chain can go as far as your workflow needs go.

This approach forms an ecosystem of well-tuned APIs that just fit into your workflow. What does this mean to users and businesses? It means that a single investment provides a non-rigid, adaptable solution that collects as much data as you need. The web scraping API empowers users to customize the data collection and analysis process, and take full advantage of its features to fulfill all their web scraping ambitions. And those could be anything: from email notifications and price drop alerts to contact detail collection and tailored scrapers.

Performance reliability and robustness

Web scraping is a process in and of itself that guarantees data accuracy. Just set up your scraper correctly once and it will accurately collect data directly from websites, with a very low chance of errors. How does that work? Well, monotonous and repetitive tasks often lead to errors because they are simply boring for humans. If you’re dealing with financials, pricing, time-sensitive data, or good old sales - inaccuracies and mistakes can cost quite a lot of time and resources to be found and fixed, and if not found - the issues just snowball from that point on. That concerns any kind of data, so it is of the utmost importance to not only be able to collect data but also have it in a readable and clean format. In the modern world, this is not a task for a human, but for a machine. Robots will only make errors that are prewritten by humans in the code. If you write the script correctly, you can to a large extent eliminate the factor of human error and ensure that the information and data you get is of better quality, every single time you collect it. This is the only guarantee for that information to be seamlessly integrated with other databases and used in data analysis tools.

Low maintenance costs

This advantage is a spillover from the flexibility. As with anything that evolves, websites change over time with new designs, categories, and page layouts. Most of the changes need to be reflected in the way the scraper does its job. The cost of reflecting those changes is one of the things that is severely underestimated when introducing a new SaaS. Oftentimes people think about it in sort of an old-school way - as if once you install something, the updates will somehow appear and happen automatically, and there’s no need to keep an eye on them. But our fast-paced internet world requires adaptive solutions so it comes as no wonder that on average, the maintenance and servicing costs can make the budget skyrocket. Luckily, there’s no need to worry about maintenance expenses with web scraping software. If necessary, all of these changes can be implemented in the scraper by slightly tweaking it. In such a way, there is a. no need to develop any other tools for a new website every time, b. any change can be fixed in a reasonable time, and c. affordably priced.

Not only is scraping the web for lead generation or overall search queries an extreme time saver for B2B businesses, but it also can kick start your success online. Finding contact info for businesses in a geographic area or niche in an automated way cuts down on human error and makes lead generation quick and easy. Scraping the web for content topics will improve your SEO ranking if data is used properly, saving you a lot of time-consuming manual work. You can use data scraping to get in touch with local and nationwide brands to help boost their presence online.

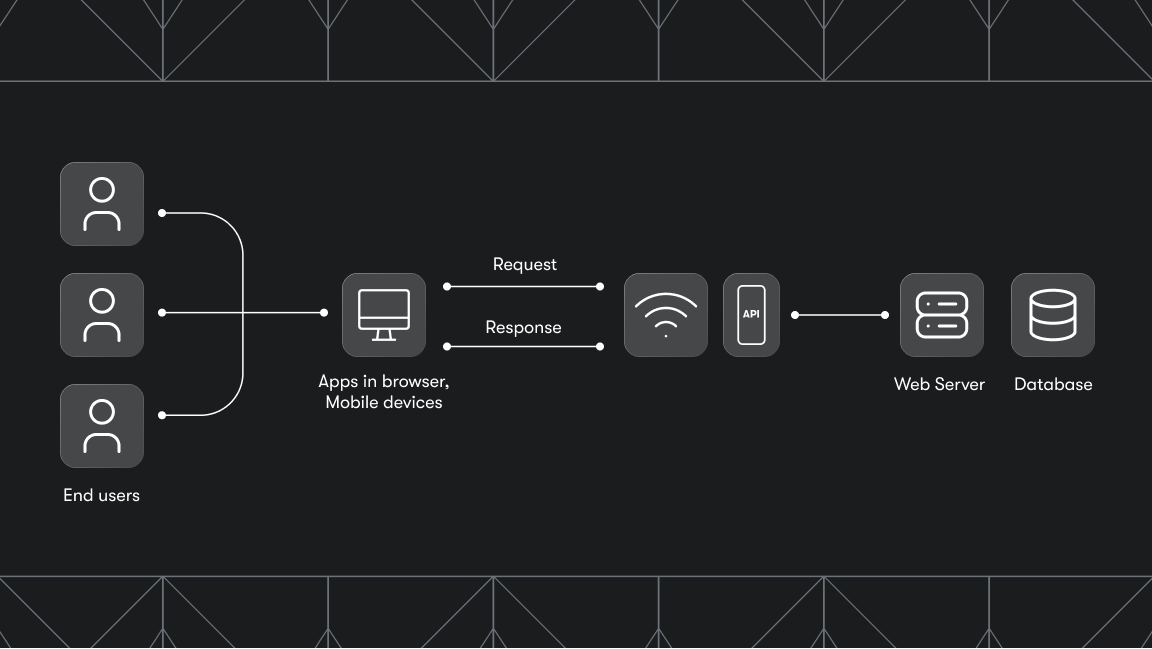

Automatic delivery of structured data

This one may sound a bit more tricky, but well-scraped data arrives in a machine-readable format by default, so simple values can often immediately be used in other databases and programs. This fact alone - effortless API integration with other applications - is one of its most likeable features for pros and non-pros alike. You are in charge of defining the format, depth, and structure of the output dataset. In that way, it can be easily linked to your next program or tool. All you need for that is a set of credentials and a basic understanding of the API documentation. That makes web scraping just the first (albeit important) step to focus on in your data analysis workflow involving other built-in solutions - for example, data visualization tools. On top of that, using automated software and programs to store scraped data ensures that your information remains secure.

As web scraping is a complex task involving multiple programming languages and software packages, it is often challenging for companies to do so independently. Since web scraping is automated and requires almost no supervision, this means I will not have to worry about it disrupting your core business. The best part is that a web scraping company will also do the necessary maintenance for my account, including basic troubleshooting, upgrades, and back-ups. This will help ensure I am always using the resource to its fullest potential.

The most significant benefit of using web scraping is that I can be assured that my data is protected. Most companies have strict security policies concerning the handling of confidential data, including privacy and confidentiality agreements. For example, as a reverse phone number look-up company, we try collecting phone numbers and personal details of people to create a grey pages directory. If you use traditional research methods, such as collecting information from people one by one, it will be time-consuming and expensive. Web scraping offers a more convenient way to solve this problem: by collecting data from all the major sources via their online pages, you can get the most accurate information and find the lowest rates faster.

Disadvantages of web scraping

Web scraping has a learning curve

Sometimes, when users get excited about the advantages of web scraping, they also get intimidated by all the coding that it seems to imply. Please don’t be. For now, looking at a scraper is just like looking under the hood of the car at the engine: some seem more complicated or impressive, some are less, but in the end, it’s just a machine that’s tunable, customizable and, most of all - logical. These days, everything is moving towards more user-friendly and intuitive interfaces, with less and less manual coding interventions involved. So both programmers and non-techies can take advantage of the benefits of web scraping with ready-made solutions for hundreds of websites. And don’t worry - once you get the hang of it, your web scraping skills will never leave you.

However, if you’re up for a challenge, the creation of your own scraper can be time-consuming, especially if you start from scratch. With a great toolkit and well-documented processes at hand though, this task is not impossible to achieve. Also, one of the most helpful things about web scraping with Apify is having a community of like-minded people who know exactly what they’re doing and who you can always ask for help if you’re facing an issue or have questions. Because more often than not, if you’re up against a specific issue, the chances are that somebody else is/was dealing with it as well, so ask away. Alternatively, you can always throw in the towel and order a custom solution for the website you want to scrape, but not before you try creating your own one. Seriously, it’s not as daunting as it looks.

Web scraping needs perpetual maintenance

Another thing to keep in mind is that, in the world of SaaS, the service is just the beginning of the journey. The real deal is product maintenance. The reason why we mention maintenance is simple: since your scraper’s work is intrinsically connected to an external website, you have no control over whenever that website changes its HTML structure or content. Therefore, developers have to react to those changes, or the scraper breaks or becomes outdated and can’t keep up. There are some details that will get updated automatically, but any scraper will usually need regular attention to keep it up and running. This rings true no matter whether you’re using some great web scraping software or building your own scraper - you have to make sure the oil in the scraper’s gears is always new and is mirroring the changes of the website you’re scraping.

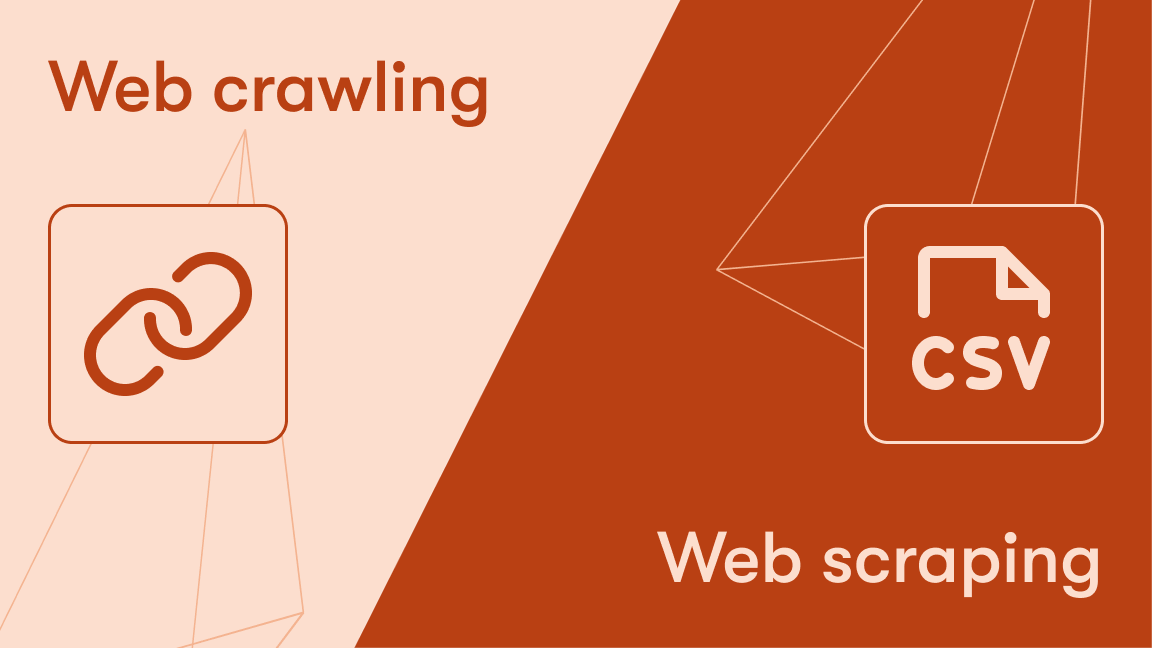

Data extraction is not the same as data analysis

When dealing with such complicated matters as data extraction and data processing, it is important to set the right expectations. No matter how great the web scraping tool you’re using is, in the majority of cases, it will not do a data analysis job for you. The job of a scraper is generally to collect the requested type of data, wrap it into the format you need, and upload it into your computer or database without loss. The data will arrive in a structured format, however, more complex data will need to be processed so that they can be used in other programs. This whole process can be quite resource-intensive and time-consuming, which is what you should be prepared for if you’re facing a big data analysis project.

Scrapers can get blocked

And this brings us to the last disadvantage in our list, and it could be a bit of a blocker. Some websites just don't like to be scraped. This might be because they believe that scrapers are consuming their resources, or it might just be that they don't want to make it easy for other companies to compete with them. In some cases, access is blocked because of the origin of the scraper, so that a request coming from a particular country or IP address is not permitted.

This kind of IP blocking is often solved by the use of proxy servers or by taking measures to prevent browser or device fingerprinting. These strategies can enable scraping bots to operate under the radar in many cases. But sometimes even these solutions are not enough to deal with aggressive blocking and a website can't be scraped - the ultimate disadvantage in our list.

However, as web scraping has become a more widespread tool for many businesses, websites are becoming less suspicious of scraping and lowering some of their resistance to it. So even if a website has blocked scrapers in the past, that may change over time.

What about the legality of web scraping?

You might have expected to see something here about web scraping and the law or you might be worried that web scraping is illegal. But that's just one of the many myths surrounding the legality of web scraping. Web scraping is legal and it is rapidly becoming one of the most important tools that legitimate businesses use to get the data they need.

What next? What can web scraping do for you?

Now that you have the full picture, we think that you can make a well-informed decision on how you can benefit from web scraping for research, business, or personal use. There are some potential disadvantages, but they're just challenges and nothing to be afraid of.

If you're still not sure whether you should use web scraping - try experimenting with it yourself - we have plenty of scrapers to choose from, and most of them are free to use. If you're not sure how exactly you can make use of web scraping, just check out our Industries page for inspiration and real use cases.

And if your scraping projects need something special - tell us more and we'll figure out a solution for whatever your needs might be.

In this video, learn about web scraping, its various use cases, and the Apify Platform.