We're Apify. We've created 1,600+ data extraction tools and unofficial APIs for popular websites, including Twitter (X). Check us out.

Starting off as a simple ‘microblogging’ system for users to share short posts called tweets, Twitter (now X.com) has more than 368 million users, and more than 500 million tweets are posted every day. As you might imagine, that means that there’s a lot of useful data behind those 280 characters just sitting around on Twitter.

For that reason, creating a Python script able to gather all that Twitter data can seem intimidating at first, especially considering the recent changes with Twitter API. But with the right steps, building a Python Twitter scraper can be a pretty straightforward process. Even so, if you don’t want to create your own Twitter scraper from scratch, you can use one of Apify's Twitter scrapers and start collecting data from X.com in less than 2 minutes.

This tutorial will walk you through creating a web scraper using an open-source Python library. We'll start by setting up your environment, then move on to logging in and retrieving Twitter data, and finally storing and exporting this data.

🐦 Is Twitter scraping a good alternative to the official Twitter API?

Even though the official Twitter API provides structured data access, it also enforces rate limits, registration, authentication, an API key; and since 2023, it also comes with a hefty price. Using the Python Twitter library like Tweepy can simplify the authentication part. But what about the rest?

With the Twitter API having become less accessible, it makes more sense to rely on alternative methods to get data from Twitter. Web scraping allows you to do more with Twitter data than the API does.

Independent research has also found that web scraping has advantages over the Twitter API in terms of speed and flexibility.

Web scraping is faster than Twitter API, and it is more flexible in terms of obtaining data

Web Scraping versus Twitter API: A Comparison for a Credibility Analysis

So what are our options for web scraping here? Well, we'll be exploring these two in our guide:

- Build your own Twitter crawler in Python. It will act as an unofficial API.

- Use a ready-made web crawler like a Twitter scraper. It already works as an unofficial API.

We're not looking for easy ways out. So let's get into the thick of it with the first one. Let's build a Python crawler for Twitter.

1. Pick the right web scraping library for X.com

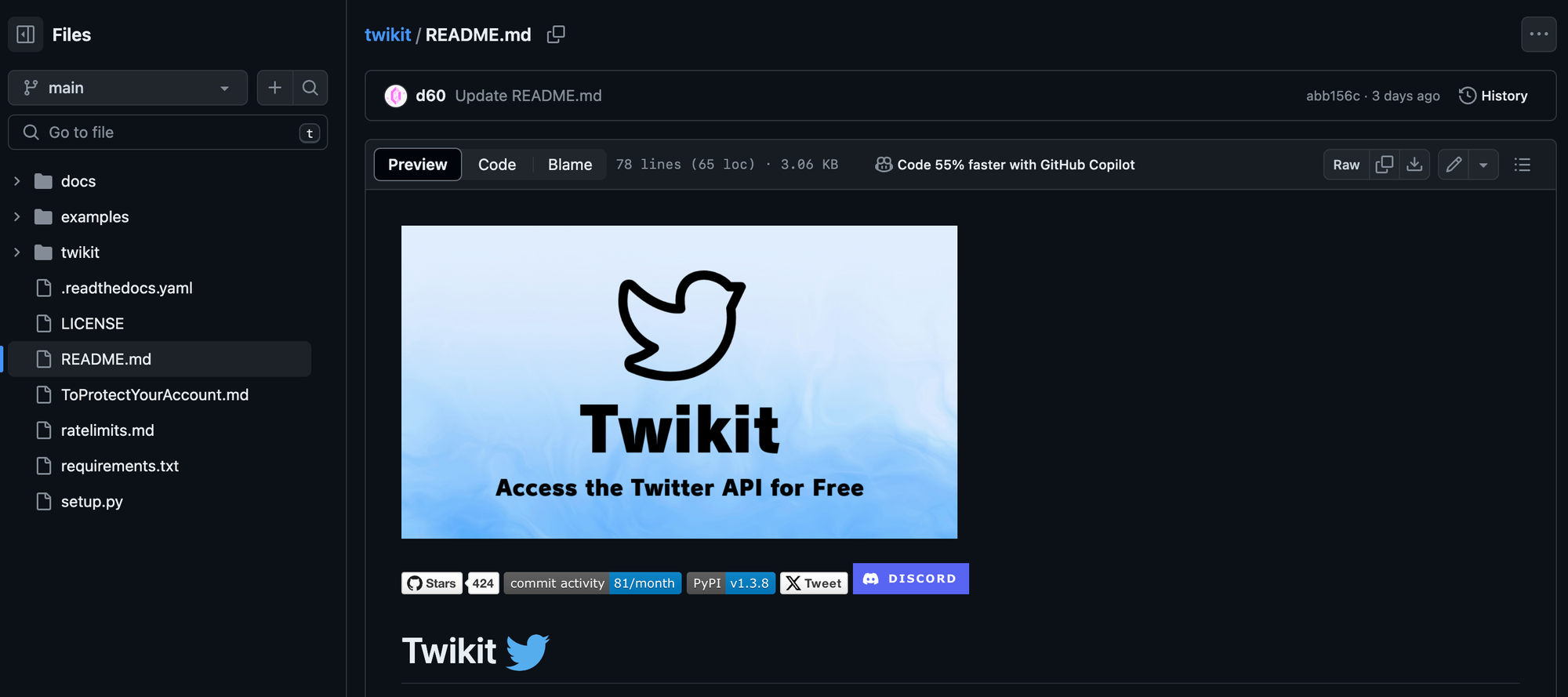

Let's start with our basics, Twikit. Twikit is an open-source library dedicated to scraping Twitter data in Python. It's essentially an unofficial Python Twitter API created with multiple purposes in mind: from scraping tweets and user info to posting tweets, as well as liking or following users.

Now, you might be wondering: why are we starting off with a library? Aren't we creating a web scraper from scratch? Of course, you can write a Python Twitter scraper from scratch. But web scraping these days requires far from just a few actions on a page.

If you want your Python Twitter scraper to be successful at its task, you need to strengthen it with something that can interact with web browsers and protect you from getting blocked (proxies, headers, IP address rotation, etc.) — a whole infrastructure of things. Which is what Twikit library will provide for us, so all we have left to do is build the scraper itself. So let's get started.

2. Set up your environment

Before creating a new project, we highly encourage you to create a virtual environment. Before you dive into writing the script, ensure you have Python installed on your computer.

To start with the project, just run pip command:

pip install twikit pandas

3. Import libraries

Now that the installation is done, the actual first part of your script will involve importing the libraries you'll be using. twikit is essential for interacting with Twitter data, json for JSON processing, and pandas for data handling.

from twikit import Client

import json

import pandas as pd

4. Initialize the client

To interact with Twitter data, you'll need to initialize a Client object from twikit. This object allows you to perform various operations like logging in, fetching tweets, etc. Make sure to replace 'en-US' with the relevant language/locale if necessary.

client = Client('en-US')

5. Login with provided user credentials

These days, to access most data on Twitter, you'll need to log in. Logging in with the sole purpose of scraping is frowned upon and discouraged by Twitter, but it's impossible to get any real Twitter data from behind Twitter's login wall without logging in. So for educational purposes, we're going to show you that it's possible with this open-source library:

client.login(auth_info_1='yourusername', password='yourpassword')

client.save_cookies('cookies.json')

client.load_cookies(path='cookies.json')

You will need to replace 'yourusername' and 'yourpassword' with your Twitter credentials.

In order not to endanger your account by needlessly logging in every time you need to scrape Twitter, you can opt for saving your cookies and reusing them each time. After logging in once and saving the cookies, you can comment out the login part and directly load the cookies in subsequent runs.

To avoid overloading the website, getting marked as suspicious, or getting blocked. Read about more reasons why it's advisable to stay logged in and tips on how to behave on the website.

6. Begin X.com scraping – scrape tweets with Python

Once you have an initialized Client, you can pick what data you want to scrape. The library offers many methods that include also creating new tweets, sending messages, etc. We are interested in the get part of the library.

To scrape tweets from a specific user, you'll need to use the get_user_by_screen_name method with the user's screen name as the parameter.

Then, you can extract a specified number of tweets using the get_tweets method. In our example, we're getting the last 5 tweets from the user with a public account zelenskyyua.

user = client.get_user_by_screen_name('zelenskyyua')

tweets = user.get_tweets('Tweets', count=5)

7. Store scraped X.com data

After getting the list of tweets from Twitter, you can loop through them and store the scraped tweet properties. Our example collects such properties as the creation date, favorite count, and full text of each tweet.

tweets_to_store = []

for tweet in tweets:

tweets_to_store.append({

'created_at': tweet.created_at,

'favorite_count': tweet.favorite_count,

'full_text': tweet.full_text,

})

8. Analyze and save tweet data

Now that you have your tweet data, you can use the library we've imported before, pandas to convert it into a DataFrame. This step would make it easier for us to sort, filter, and analyze the extracted Twitter data. Let's first save this Twitter data to a CSV file.

df = pd.DataFrame(tweets_to_store)

df.to_csv('tweets.csv', index=False)

print(df.sort_values(by='favorite_count', ascending=False))

9. Export X.com data as JSON (or other format)

If you prefer the data in JSON format — say, for integration into web applications — you can convert your list to a JSON string and print it.

print(json.dumps(tweets_to_store, indent=4))

You can export in CSV, JSON, or HTML. Then, you can upload the scraped data to a time-series database, like QuestDB.

10. See the full code for building an X.com scraper in Python

So this is what the full code looks like. Pretty neat, isn't it? It would have been way longer without installed libraries. But this is a great thing about programming: you can share your tools and build even better tools together.

from twikit import Client

import json

import pandas as pd

client = Client('en-US')

## You can comment this `login`` part out after the first time you run the script (and you have the `cookies.json`` file)

client.login(

auth_info_1='yourusername',

password='yourpassword',

)

client.save_cookies('cookies.json');

client.load_cookies(path='cookies.json');

user = client.get_user_by_screen_name('zelenskyyua')

tweets = user.get_tweets('Tweets', count=5)

tweets_to_store = [];

for tweet in tweets:

tweets_to_store.append({

'created_at': tweet.created_at,

'favorite_count': tweet.favorite_count,

'full_text': tweet.full_text,

})

# We can make the data into a pandas dataframe and store it as a CSV file

df = pd.DataFrame(tweets_to_store)

df.to_csv('tweets.csv', index=False)

# Pandas also allows us to sort or filter the data

print(df.sort_values(by='favorite_count', ascending=False))

# We can also print the data as a JSON object

print(json.dumps(tweets_to_store, indent=4))

So there you have it, a step-by-step guide to scraping, storing, and exporting Twitter data using Python. Keep in mind that this script is simply a great starting point for developing more complex data analysis tools or integrating Twitter data into your applications.

11. Avoid getting blocked

So we're done, right? The issue with scraping nowadays is that it requires much more than just a scraper. Websites are more than happy to block you for visiting way too often, even if all you're doing is just copying some tweets into an Excel spreadsheet. That means you will need to gear up your Python scraper.

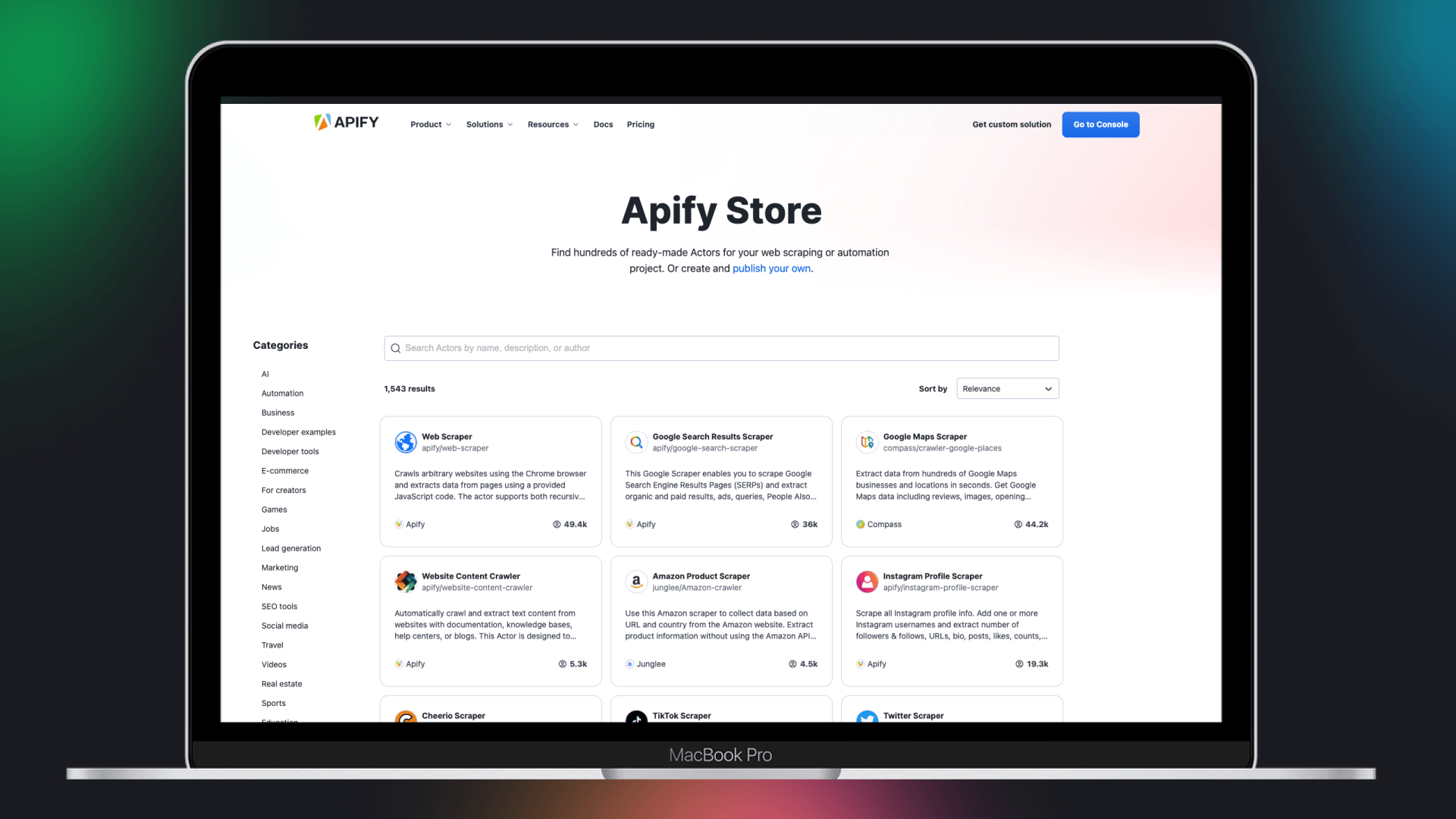

💡 How to scrape X.com data with a ready-made scraper

For reliable and convenient web scraping you'll need proxies, for monitoring results and scheduling scraper's runs – some webhooks, for plugging in scraped data without too much hassle – your own API or integrations, etc. Luckily, such comprehensive solutions already exist. Any scraper that lives on the Apify platform gets multiple lives.

Access proven scraping tools instantly

Tap into a library of battle-tested scrapers from fellow devs

Get started for free on any Apify plan — no setup required

With plenty of ready-made scraping tools at your fingertips, just hop over to Apify Store, choose a profile from X.com to scrape, and kick off the scraper. Once it's done, grab your extracted data in Excel, JSON, HTML, or CSV format, take a quick peek, and download it or stash it away for later. Feel free to tweak the input settings and explore the vast world of data waiting to be uncovered from tweets and other content on X.com!

Here's a short video demo showing you how to go about scraping Twitter the easy way:

❓FAQ

Can I scrape Twitter data using Python with the Twitter API?

Yes. For reliable Twitter data scraping, it's recommended that you use the official Twitter API combined with something to help parse the data, like the Tweepy library. Here's a basic example of using Twitter API in Python with Tweepy to scrape tweets from a user's timeline:

import tweepy

# Authenticate to the Twitter API

consumer_key = 'your_consumer_key'

consumer_secret = 'your_consumer_secret'

access_token = 'your_access_token'

access_token_secret = 'your_access_token_secret'

auth = tweepy.OAuthHandler(consumer_key, consumer_secret)

auth.set_access_token(access_token, access_token_secret)

api = tweepy.API(auth)

# Use the API to fetch recent tweets from a user

tweets = api.user_timeline(screen_name='zelenskyyua', count=5)

for tweet in tweets:

print(tweet.text)

Can you scrape Twitter with Python?

Yes. You can build an independent web scraper or web crawler using Python for any website, including Twitter.

Is web scraping Twitter legal?

You're not the first person to ask if Twitter scraping is legal, and you probably won't be the last. Since scraping basically automates tasks that could be done manually by a human, it is legal. So provided that you are only obtaining data that is openly available, the answer is yes.

On top of that, in 2022, US Ninth Circuit Court of Appeals confirmed this with a ruling that scraping publicly accessible data is legal. The court's decision further confirms that everything posted on the internet is fair game for crawling and scraping. Recently, web scraping made it into the public eye during the defamation trial of Johnny Depp v. Amber Heard as a method of investigation.

Does Twitter ban scrapers?

Yes, X.com prohibits scraping its platform without permission, and it actively bans scrapers that violate its terms of service. Although it isn't illegal, according to Twitter's Terms of Use, unauthorized scraping may result in suspension or termination of access.

What can Twitter data be used for?

For a Twitter user or marketer, access to data about how others engage with their tweets can be vital for developing a brand. For companies, gathering data across Twitter can provide them gain a competitive advantage. Academic researchers and journalists can make use of the data to understand how people interact and identify trends before they rise to the surface. Once you have the data, what you do with it is up to you.

🐦 Want to scrape X.com data in other ways?

Apify Store also offers other Twitter (X.com) scrapers to carry out smaller scraping tasks. You only need to insert a keyword or a URL and start your run to extract your results, including Twitter followers, profile photos, usernames, tweets, images, and more.

The full range of Twitter scrapers includes:

| 🅇 X Twitter | 🐦 Twitter Scraper | 📱 Tweet Scraper |

| 🔗 Twitter URL Scraper | 🎥 Twitter Video Downloader | ❌ Best Twitter Scraper |

| 🧞♂️ Twitter Profile Scraper | 🛰️ Twitter Spaces Scraper | 🧭 Twitter Explorer |