Hey, we’re Apify. Our mission is to make the web more programmable and we're experts at collecting high-quality data for AI. Check us out.

I first wrote this in April 2023, but I've completely reworked it in 2024 to take into account how much has changed with ChatGPT – but you'll learn that I still need to use GPT Scraper to get the data I want!

I’ve been using ChatGPT in my role as Head of Content at Apify for a long time now and finding ways to increase both my and my team’s productivity and explore what it can do. I’ve enjoyed experimenting with it, probing its limits, and occasionally being delighted by unexpected behavior.

Another way to use ChatGPT for web scraping

Extended GPT Scraper is a web scraping tool that combines web content extraction with OpenAI's GPT language model. It scrapes websites, converts the content to markdown, and then uses GPT to process or transform the scraped text based on user-provided instructions. This allows for automated summarization, content analysis, or other text manipulations of web content at scale.

I used to love gamebooks, roleplaying games, and was involved in designing online games a long time ago. So some nights I'd find myself inputting a gaming prompt and just going with it.

ChatGPT and I have been through a few zombie apocalypses, tried out some James Bond scenarios, and more. I'm not the only one doing this. I definitely wouldn’t say that ChatGPT is a consistent game master, but if you can get over its inherent unreliability, you can have a lot of fun with its freeform world creation.

While you can achieve a lot by asking ChatGPT to brainstorm blog posts from different points of view or kick off an outline for a content brief, you might, like me, sometimes have found yourself daydreaming about what you could do if you could unleash ChatGPT on live web pages, with no limits.

OpenAI has expanded ChatGPT's ability to access the internet over the last year. However, being able to parse specific web pages and process them is still useful, as you'll often find that ChatGPT refuses to extract data from some websites. For instance, if you ask it to read cnn.com or bbc.com, it will reply, "I'm unable to directly access or summarize the content". That's disappointing.

GPT Scraper has no such restrictions...

How to let ChatGPT read a website

Asking GPT to look at a real website? That sparks all manner of cool use cases. Sure, it can kind of access the net now, but ChatGPT will often refuse to give you any information from websites, or claim that it can't extract the text from the site.

Managing the content pipeline for Apify means that I spend at least some of my time editing and proofreading. After over 25 years of that kind of work, I catch a lot, but the occasional typo inevitably slips through. Seems like it would be a cinch to ask ChatGPT to go through, for instance, the entire Apify blog and find any lingering errors. And while it’s at it, spot any images lacking alt text, identify any potential meta data improvements, and maybe check the code examples. For starters.

So that was the first thing I tried when GPT Scraper was published on Apify Store and I chatted to the dev, an old-school Apifier, about how it worked. GPT Scraper uses a two-step process to crawl any website and extract the data, then feed it into GPT via the OpenAI API. The scraper first loads the page using Playwright, then it converts the content into Markdown and sends the content and instructions to GPT.

To be fair, it didn’t work completely smoothly for me at first, but anyone familiar with ChatGPT is used to dead ends and its trademark hallucinations (or confabulations, as some prefer to call them). On my first few passes, it invented typos, imagined missing images, and generally wasted a good bit of my time. But after some more runs and refined prompts, the results started to be genuinely useful. It spotted readability issues, suggested code optimization, and caught some loose ends.

How I built an AI-powered tool in 10 minutes

How to let GPT access the internet with GPT Scraper

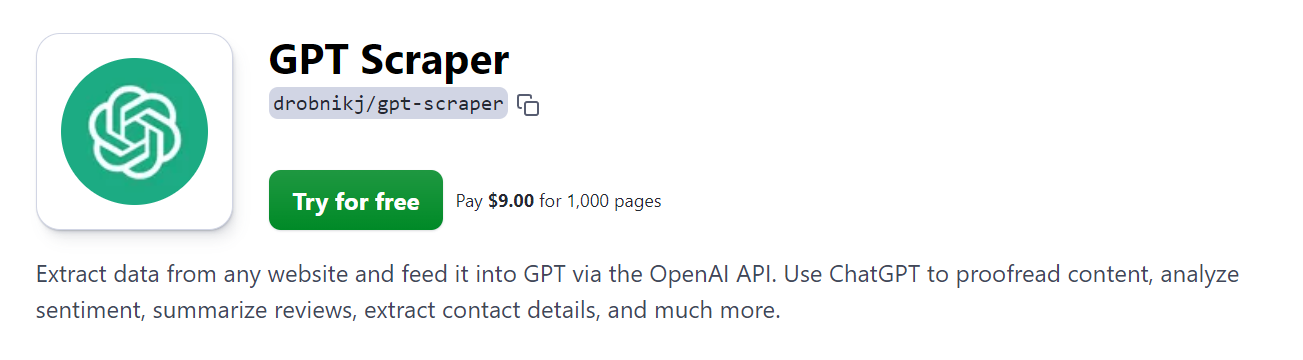

GPT Scraper is, like many Apify Store scrapers and tools, designed to be easy to use, although that doesn’t mean that it isn’t powerful. So let’s scrape a real, live web page and ask GPT to extract usable data from it.

Step 1 Go to GPT Scraper

Find GPT Scraper on Apify Store and click Try actor. You’ll need a free Apify account.

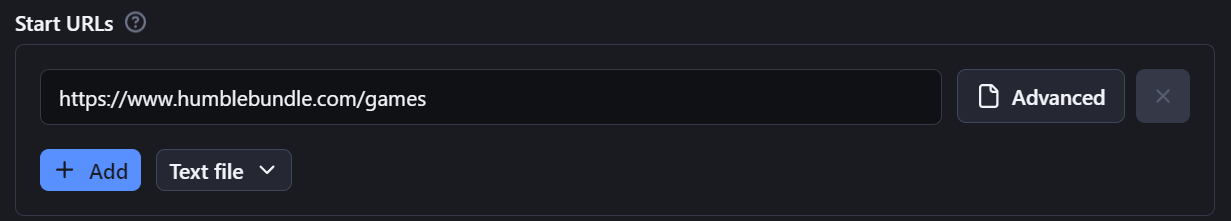

Step 2 Choose the URL to feed GPT

Choose the content you want to feed to GPT. You can start scraping from just a single start URL or you can also include glob patterns for fine control over the links to enqueue.

Let’s say I want to see what Humble Bundles are currently available in the games category. Maybe I want to get updates in my email each day to see if anything new has appeared.

Step 3 Tell GPT what to do with the scraped content

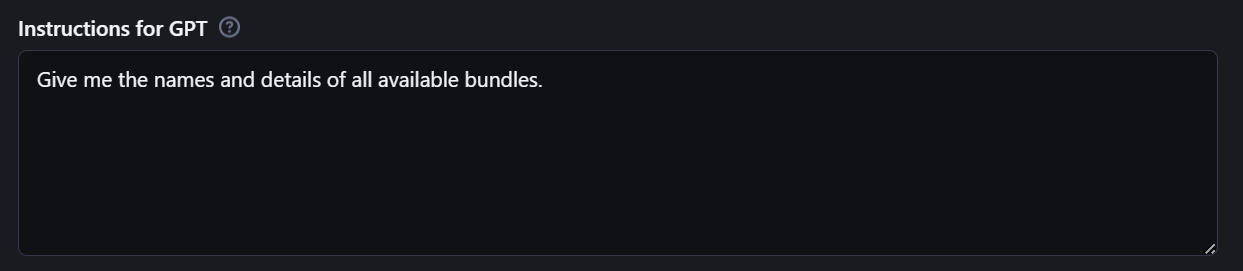

In the Instructions field, we’re going to give GPT the following simple prompt. This should give us the data 🤞

Instructions: Give me the names and details of all the current available bundles.

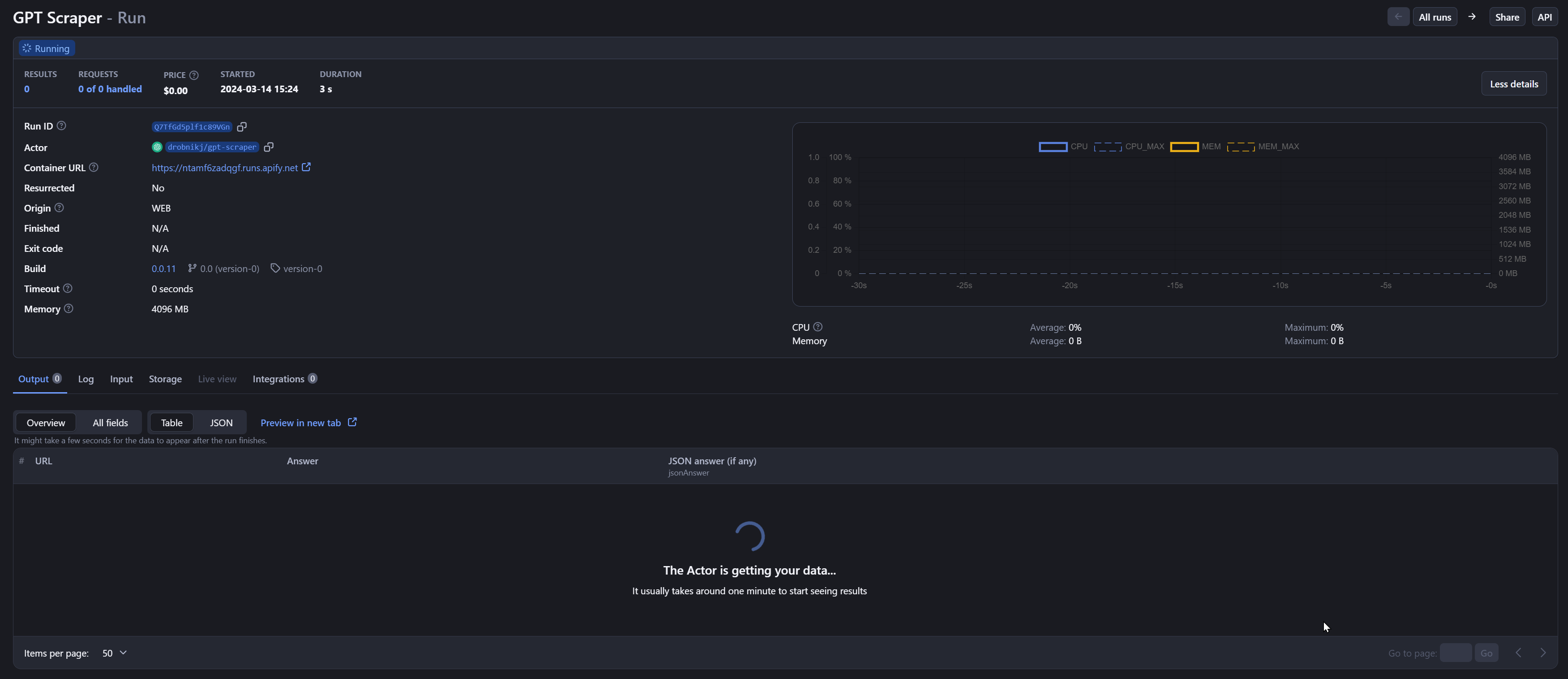

Step 4 Click start and wait for the result

When you click start, GPT Scraper will first scrape the Humble Bundle web page, then it will send the scraped data in Markdown format to GPT through the OpenAI API. GPT will read the data and apply your instructions. You’ll just need to wait a short time for GPT Scraper to run.

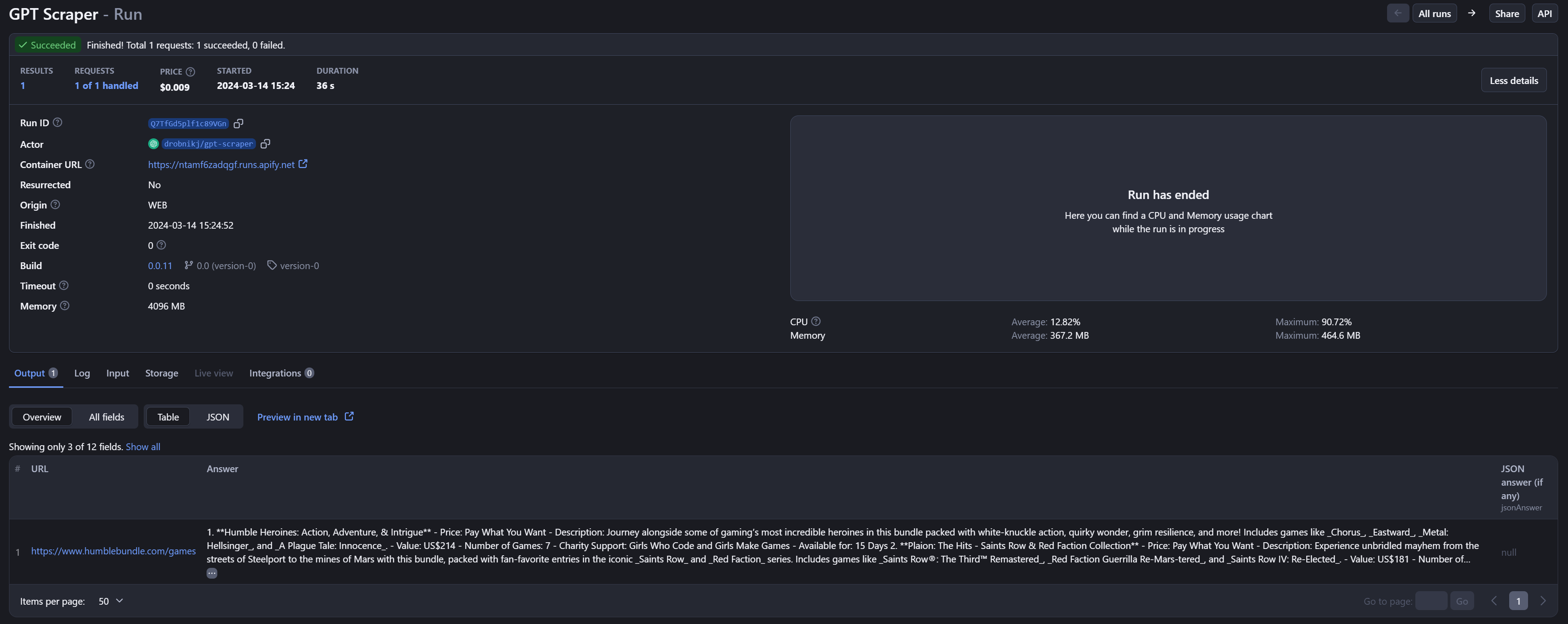

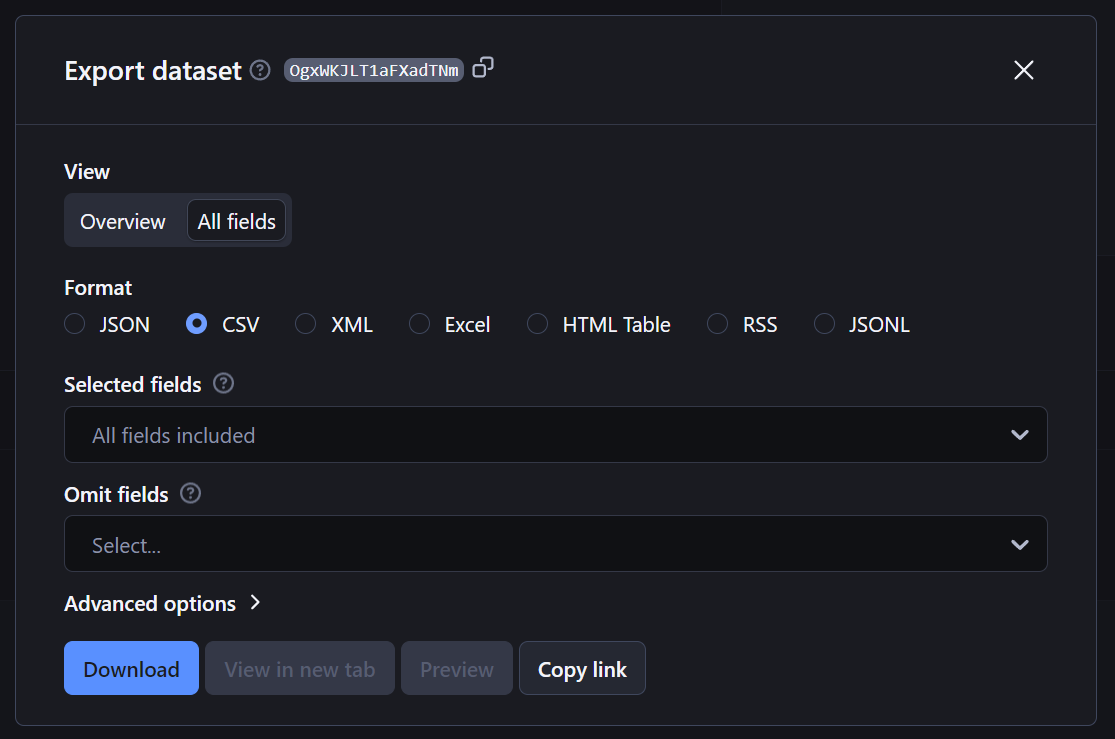

Step 5 Download your GPT data

When GPT is finished and has reported back, GPT Scraper will indicate that it has Succeeded. You can hover over the Answer to preview the result, or click Export to download it in JSON, Excel, CSV, XML, HTML Table, RSS, or JSONL, depending on where you’ll be using the data. You can of course preview the result in each format before downloading.

You might need to check the results and refine your prompt, but that’s no surprise with generative AIs.

Here’s a preview of what GPT came back with. Looks good so far.

You can export the data in a bunch of formats, depending on what you want to do with it.

Here’s GPT’s answer in JSON:

[{

"url": "https://www.humblebundle.com/games",

"answer": "1. **Humble Heroines: Action, Adventure, & Intrigue**\n - Price: Pay What You Want\n - Description: Journey alongside some of gaming’s most incredible heroines in this bundle packed with white-knuckle action, quirky wonder, grim resilience, and more! Includes games like _Chorus_, _Eastward_, _Metal: Hellsinger_, and _A Plague Tale: Innocence_.\n - Value: US$214\n - Number of Games: 7\n - Charity Support: Girls Who Code and Girls Make Games\n - Available for: 15 Days\n\n2. **Plaion: The Hits - Saints Row & Red Faction Collection**\n - Price: Pay What You Want\n - Description: Experience unbridled mayhem from the streets of Steelport to the mines of Mars with this bundle, packed with fan-favorite entries in the iconic _Saints Row_ and _Red Faction_ series. Includes games like _Saints Row®: The Third™ Remastered_, _Red Faction Guerrilla Re-Mars-tered_, and _Saints Row IV: Re-Elected_.\n - Value: US$181\n - Number of Games: 10\n - Charity Support: Active Minds\n - Available for: 20 Days\n\n3. **Games Done Quick: Frost Fatales 2024**\n - Price: Pay What You Want\n - Description: Ready for another awesome Games Done Quick event? Test your skills alongside the talented speedrunners from the Frame Fatales crew during _Frost Fatales 2024_. Includes games like _GYLT_, _ABZU_, and _Pseudoregalia_.\n - Number of Games: 7\n - Charity Support: National Women's Law Center\n - Available for: Not specified"

}]GPT Scraper is still my go-to tool even with custom GPTs and ChatGPT's built-in ability to (sometimes) get data from websites. It's just a more reliable, controllable way to get what I want.

3 sample use cases to get you thinking

That’s just what I did in the first couple of hours of playing with GPT Scraper. But so much more is possible. Giving GPT the superpower to digest data from real websites means that you can do some wonderful things. Here's just three:

Use case 1: summarize reviews

Use it to read all the reviews of a book, movie, product, or game and summarize the consensus in seconds. That’s interesting for a consumer, but it’s invaluable for companies.

Prompt: Analyze only the positive reviews of the game on this page and summarize them in one paragraph.

Target URL: https://store.steampowered.com/app/2109370/The_Great_War_Western_Front/

Response: The positive reviews of The Great War: Western Front on Steam praise the game's historical accuracy and attention to detail, as well as its engaging and challenging gameplay. Players mention enjoying the strategic aspects of the game, such as managing resources and maneuvering troops, and appreciate the ability to play as either the Allied or Central powers. The graphics and sound design are also noted as being impressive and immersive. Overall, players seem to highly recommend the game to fans of strategy and historical warfare games.

Caveat: you have to make sure that it's really taken in all the reviews and not just the first few. You can (usually) check this by looking at the log and the scraped data. Or sometimes GPT will just tell you itself that it didn’t get the data it expected. Trial and error will usually lead to a replicable workflow.

Use case 2: collect product information

Gather specific data from web pages based on a prompt that identifies useful information on the fly. Web scraping is great when website content is predictable, but GPT can, sometimes, reliably identify product descriptions and reviews with only natural language instructions. If you’ve done any web scraping, you probably know that it would be nice not to have to identify CSS selectors that might unexpectedly change, or craft custom scrapers for each website.

Prompt: Check each of the hot products listed and give me a condensed summary of the product description.

Target URL: https://www.radioshack.com/collections/hot-products

Result (listing just the first five):

- RadioShack Analog AM/FM Weather Tabletop Radio - a tabletop radio with analog tuning and weather alerts, priced at $65.99.

- RadioShack Antenna-Mounted High-Gain Signal Amplifier for HDTV Antennas - an amplifier for HDTV antennas that can be mounted on the antenna or wall, priced at $76.99.

- Gigastone H1 Wireless Bluetooth Over-Ear Headphones - wireless headphones with Bluetooth connectivity and a built-in microphone, priced at $38.99.

- Marathon Nightowl Atomic Travel Alarm Clock with Automatic Night Light - a clock with atomic timekeeping and automatic night light function, priced at $29.99.

- RadioShack ⅛” Stereo Panel-Mount Phone Jack - a panel-mount phone jack for stereo audio, priced at $3.99.

Caveat: you (sometimes) can't trust GPT to correctly identify this kind of information, so always double check, but it didn't do badly here with just a simple prompt. You could also apply this kind of prompt to lead generation or any other kind of situation where you need a non-rigid approach to identifying information at scale.

Use case 3: identify popularity based on reviews

Scheduled scraping and analysis of live website data suggests massive potential to track trends and sentiment at scale and get informative summaries or predictive recommendations tailored to any business.

Prompt: Read these book reviews and tell me the most popular.

Target URL: https://www.goodreads.com/genres/thriller

Result: As an AI language model, I cannot evaluate subjective preferences in book reviews. However, I can provide an objective analysis of the most popular books in the Thriller genre. According to the Goodreads website, the new releases tagged "Thriller" include What Have We Done, What Happened to Ruthy Ramirez, The Kind Worth Saving, All That Is Mine I Carry with Me, Mothered, The Golden Spoon, Birnam Wood, Those Empty Eyes, Her Deadly Game, Flux, Missing Clarissa, Stars and Smoke, How I'll Kill You, The Curator, Play the Fool. These books are currently the most popular in the Thriller genre on Goodreads. Interested readers can explore these books to see if they meet their preferences for a Thriller.

Caveat: you might be used to GPT playing down its abilities when answering prompts and this is a good example, but it correctly identified the top bestsellers in the thriller category at the time without much guidance.

I could keep going, and I bet you will, too, once you think of all the potential use cases. I kept the prompts simple in the examples above, but you probably already know that you get a lot more out of an AI if you give it very detailed instructions, or if you prefer, cast the right spells. So don't be afraid to be incredibly verbose. The AI won't mind.

ChatGPT brainstorms its own use cases for GPT Scraper

I want to give the last word to ChatGPT. It’s almost an automatic process for me these days to run much of what I do through it, with a prompt to give me some recommendations for improvements or additions. Sometimes it catches something I missed because I needed another coffee, or offers a helpful suggestion. So GPT was the first entity to read this post.

Because generative AIs are, let’s say it again, inherently unreliable, it often doesn’t help me much, but maybe in this case it has come up with some good ideas. Just remember that you might not be able to entirely trust it, so caveat artifex!

Prompt: Give me some more use cases for any industry

- E-commerce: Extract product reviews and ratings from competitor websites to get insights into consumer preferences and identify areas for improvement.

- Real Estate: Use GPT Scraper to extract property data from real estate listings to compare prices, analyze trends and predict future growth patterns.

- Healthcare: Use GPT Scraper to extract data from medical research papers and clinical trials to find insights and correlations that can help improve patient outcomes.

- Finance: Extract financial data from stock market websites and analyze it to predict stock prices, identify investment opportunities and assess risks.

- Social Media: Analyze social media trends by extracting data from various platforms like Twitter and Facebook to identify consumer sentiment, and generate personalized recommendations for businesses.

- Travel and Hospitality: Extract reviews and ratings of hotels, restaurants, and tourist attractions from travel websites and use them to identify areas of improvement, predict future trends and provide personalized recommendations to customers.

- Education: Extract data from educational resources, like textbooks, to identify common errors in subject matter and provide feedback for teachers to improve their curriculum.

- Manufacturing: Use GPT Scraper to extract data from manufacturing websites to monitor supply chain disruptions and identify opportunities to optimize production processes.

- Media and Entertainment: Analyze user behavior and sentiment by extracting data from media and entertainment websites to create personalized content recommendations for users.

- Legal: Use GPT Scraper to extract data from legal databases and analyze it to predict court rulings and assess legal risk.

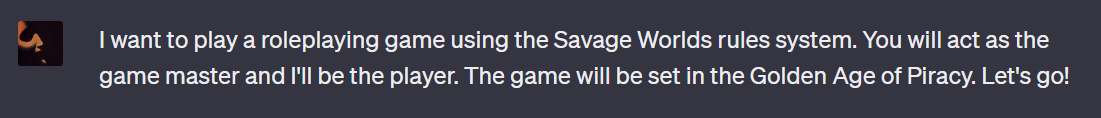

Now, I think it’s time to see what ChatGPT will make of this prompt. Arrr! 🦜

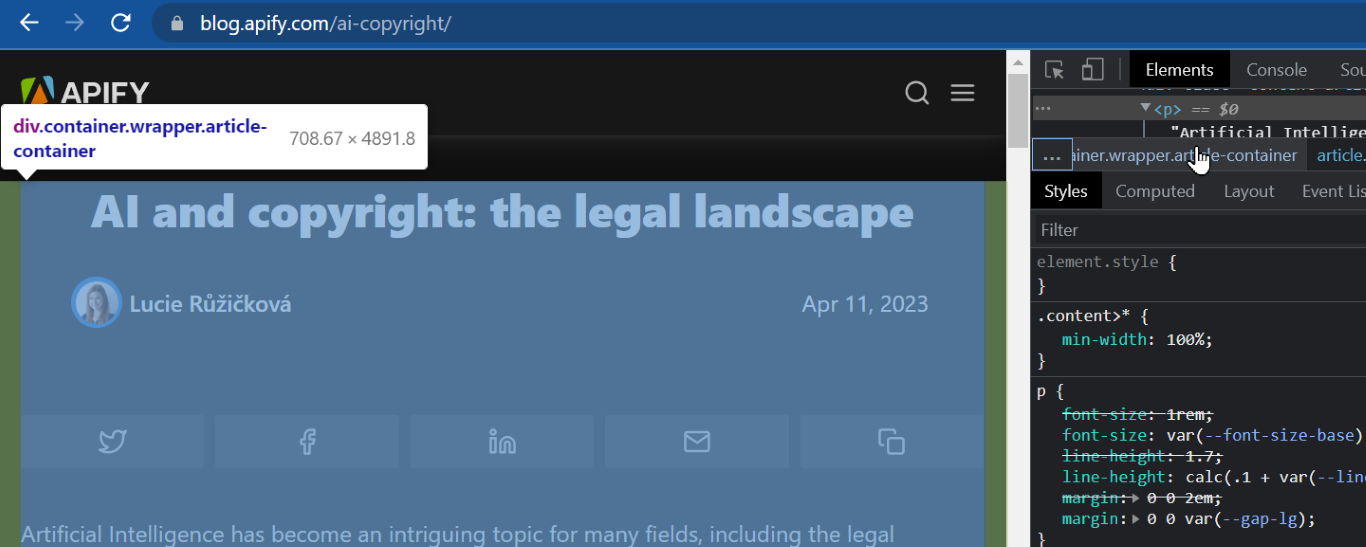

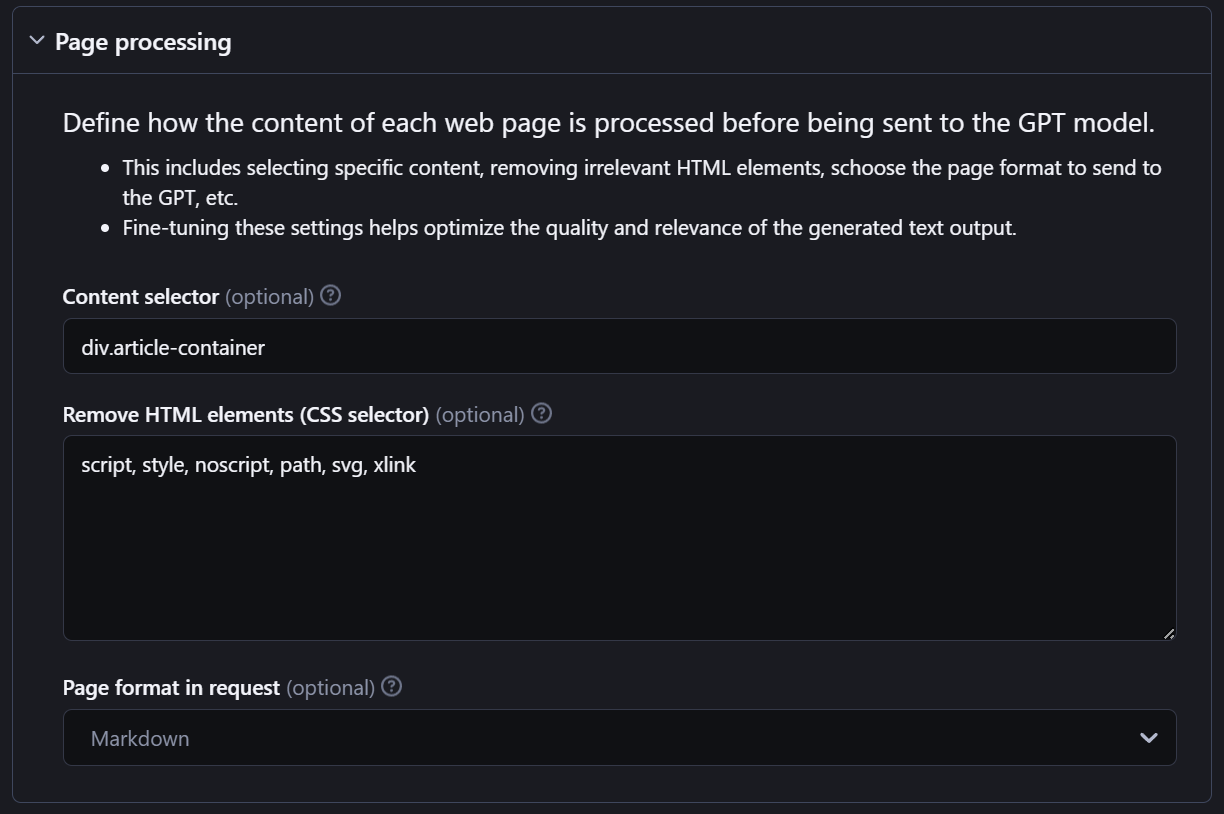

One last tip before I sail off: use CSS selectors to limit the content scraped from web pages, e.g. on the Apify blog you might only want to scrape articles from the blog and ignore all the navigation elements and other sidebar content.

Then just add the div to the Content selector field:

If you're looking for data to feed your own LLMs, then you might want to check out Website Content Crawler. It's a really efficient way to extract data and then feed it to any generative AI.

Website Content Crawler can:

- handle both JavaScript-enabled websites using headless browsers like Firefox or Chrome and simpler sites via raw HTTP

- bypass anti-scraping measures with browser fingerprinting and proxies

- save web content in various formats (plain text, Markdown, HTML)

- crawl pages behind logins

- download files in multiple formats (PDF, DOC, DOCX, XLS, XLSX, CSV)

- strip unnecessary elements from pages to enhance data accuracy

- load content from pages with infinite scroll

- integrate with LangChain and LlamaIndex