Manually downloading or saving images from a website is an exhausting task, especially when it’s done in bulk. The best solutions are to scrape all the images from a website at once using a programming language like Python or to use a ready-made scraper from a platform like Apify.

In this tutorial, we’ll guide you through all the steps of scraping images from a single website/URL using both code and a pre-built no-code scraper, from A to Z.

How to scrape images from a website

This section takes you from zero to hero in this context, explaining how to scrape every image from a website or a single URL using Crawlee for Python, along with beautifulsoup .

Once you follow the below steps, you’ll have an image scraper ready to use and also to be deployed on a platform like Apify for automated scraping.

- Set up the environment.

- Writing the code

- Deploying on Apify (optional)

If you don’t like to write the code from scratch, however, you can use one of the ready-made scrapers on Apify; we’ll guide you through that process at the end of this tutorial.

1. Setting up the environment

Here's what you’ll need to continue with this tutorial:

- Python 3.9+: Make sure you have installed Python 3.9 or higher.

- Create a virtual environment (optional but recommended): It’s always a good practice to create a virtual environment (

venv) to isolate dependencies and avoid conflicts between packages.

python -m venv myenv

source myenv/bin/activate # On macOS/Linux

myenv\\Scripts\\activate # On Windows

- Required libraries: You’ll only need to install

crawleeandhttpxfor this program.

pip install 'crawlee[beautifulsoup]'

To import the libraries, use the following code:

import asyncio

from urllib.parse import urljoin # built-in

import httpx

from crawlee.crawlers import BeautifulSoupCrawler, BeautifulSoupCrawlingContext

from crawlee.storages import KeyValueStore, Dataset

If you want to avoid local downloads and set-up, skip the process and deploy on Apify.

2. Writing the code

Unlike scraping other elements, scraping images is a pretty straightforward task, and it doesn’t require much inspection of elements.

All you need to know about scraping images from websites is this:

- Images are typically contained in

<img>tags with the source URL in thesrcattribute - The image URLs may be relative (e.g.,

/images/photo.jpg) or absolute (e.g.,https://example.com/images/photo.jpg) - To extract all the relative URLs, you need to convert all the relative URLs to absolute URLs.

- When downloading images, you should:

- Send proper headers like User-Agent to avoid being blocked.

- Handle possible HTTP errors.

- Save the image with an appropriate file extension (

webp,png, and such.)

With that in mind, you can write the code, starting with the configuration:

START_URL = "https://apify.com/store"

Crawlee automatically manages storage via KeyValueStore and Dataset, which Apify relies on for data access. Therefore, you do not need to create manual directories for storage of data.

Then, create an async function to initiate the crawler like below:

async def main():

crawler = BeautifulSoupCrawler(

max_requests_per_crawl=100,

max_request_retries=2, # Allow retries for failed requests

)

kv_store = await KeyValueStore.open()

dataset = await Dataset.open()

# Create single httpx client for reuse

async with httpx.AsyncClient(headers={"User-Agent": "Mozilla/5.0"}) as client:

@crawler.router.default_handler

Next, a nested function to extract the images:

async def request_handler(context: BeautifulSoupCrawlingContext) -> None:

context.log.info(f"Processing {context.request.url}")

soup = context.soup

image_urls = []

# Extract image URLs

for img_tag in soup.find_all("img"):

img_src = img_tag.get("src")

if img_src:

absolute_url = urljoin(context.request.url, img_src)

image_urls.append(absolute_url)

context.log.info(f"Found {len(image_urls)} images on {context.request.url}")

# Process images

for idx, img_url in enumerate(image_urls):

try:

response = await client.get(img_url)

response.raise_for_status()

# Get file extension from Content-Type

content_type = response.headers.get("Content-Type", "image/jpeg")

file_extension = content_type.split("/")[-1].split(";")[0]

file_name = f"image_{idx}_{context.request.id}.{file_extension}"

# Store image in KeyValueStore

await kv_store.set_value(file_name, response.content, content_type=content_type)

context.log.info(f"Stored: {file_name}")

# Store metadata in Dataset

dataset_entry = {

"image_url": img_url,

"file_key": file_name,

"source_page": context.request.url

}

await dataset.push_data(dataset_entry)

except httpx.HTTPError as e:

context.log.error(f"Failed to download {img_url}: {e}")

# Run the crawler

await crawler.run([START_URL])This request_handler function works as follows:

- Parses the HTML with

beautifulsoup - Locates all img tags and extracts their src attributes

- Converts these image URLs to absolute URLs (using

urljoins). Note that this is done regardless of whether it’s an absolute URL or a relative one, simplifying the process.

For each image URL, it downloads the content asynchronously with the user agent, saves it with the appropriate file extension, logs success, records metadata in JSON format, and continues the crawling process from the specified starting URL.

Finally, call the function as this and run the script.

if __name__ == "__main__":

asyncio.run(main())

Once Crawlee shows the following results, the scraping is finished, and the images are available in your selected directory.

And voila! You have successfully built an image scraper using Crawlee for Python.

The complete code

import asyncio

from urllib.parse import urljoin

import httpx

from crawlee.crawlers import BeautifulSoupCrawler, BeautifulSoupCrawlingContext

from crawlee.storages import KeyValueStore, Dataset

START_URL = "https://apify.com/store"

async def main():

crawler = BeautifulSoupCrawler(

max_requests_per_crawl=100,

max_request_retries=2, # Allow retries for failed requests

)

kv_store = await KeyValueStore.open()

dataset = await Dataset.open()

# Create single httpx client for reuse

async with httpx.AsyncClient(headers={"User-Agent": "Mozilla/5.0"}) as client:

@crawler.router.default_handler

async def request_handler(context: BeautifulSoupCrawlingContext) -> None:

context.log.info(f"Processing {context.request.url}")

soup = context.soup

image_urls = []

# Extract image URLs

for img_tag in soup.find_all("img"):

img_src = img_tag.get("src")

if img_src:

absolute_url = urljoin(context.request.url, img_src)

image_urls.append(absolute_url)

context.log.info(f"Found {len(image_urls)} images on {context.request.url}")

# Process images

for idx, img_url in enumerate(image_urls):

try:

response = await client.get(img_url)

response.raise_for_status()

# Get file extension from Content-Type

content_type = response.headers.get("Content-Type", "image/jpeg")

file_extension = content_type.split("/")[-1].split(";")[0]

file_name = f"image_{idx}_{context.request.id}.{file_extension}"

# Store image in KeyValueStore

await kv_store.set_value(file_name, response.content, content_type=content_type)

context.log.info(f"Stored: {file_name}")

# Store metadata in Dataset

dataset_entry = {

"image_url": img_url,

"file_key": file_name,

"source_page": context.request.url

}

await dataset.push_data(dataset_entry)

except httpx.HTTPError as e:

context.log.error(f"Failed to download {img_url}: {e}")

# Run the crawler

await crawler.run([START_URL])

if __name__ == "__main__":

asyncio.run(main())

3. Deploying on Apify

Even though you have built an image scraper now, it lacks 2 important features: automated scraping and convenient storage. To solve this, you can deploy the scraper on a platform like Apify.

Follow these steps to deploy this script on Apify:

#1. Create an account on Apify

- You can get a forever-free plan - no credit card is required.

#2. Create a new Actor

- First, install Apify CLI using NPM or Homebrew in your terminal.

- Create a new Actor with Apify’s Crawlee + Beautifulsoup template as the base so that you don’t have to create every file from scratch.

apify create my-images-actor -t python-crawlee-beautifulsoup

- Change the directory to the Actor:

cd my-images-actor

#3. Change the main.py file

In order to deploy on Apify, you’ll have to make some minor changes in the main.py script, such as below:

- Using

from apify import Actorandasync with Actorcontext. This is because it requiresActorfor the scraper’s lifecycle management (at lines 7 and 14). - A bit more advanced error logs, and pushing metadata for failed downloads to

Datasetwithstatus: "failed_download”, for better error tracking (at lines 69-83). - The dataset_entry now also includes status and prefixes

file_keywith"images/"for more structured output (at lines 62-67) - Using

Dataset.push_datafor metadata storage on Apify (at line 84)

The main.py script can be found in the src found in your actor. Change it to the script with the above changes, which can be also found on GitHub.

#4. Deploy

- Type

apify loginto log in to your account. You can either use the API token or verify through your browser. - Once logged in, type

apify push, and you’re good to go.

Once deployed, head over to Apify Console > Your Actor > Click “Start” to run the scraper on Apify.

Once run, the output would be something like below:

Scrape images with a ready-made Actor

Building a scraper from scratch has its own advantages, like its flexibility, but it’s not without its downsides either. As you continue to use the scraper, you’ll have to work with more advanced error handling, storage issues, and such.

The best solution to this would be to use a ready-made scraper on Apify. They are built with error handling and other advanced features, and you don’t have to worry about storage either.

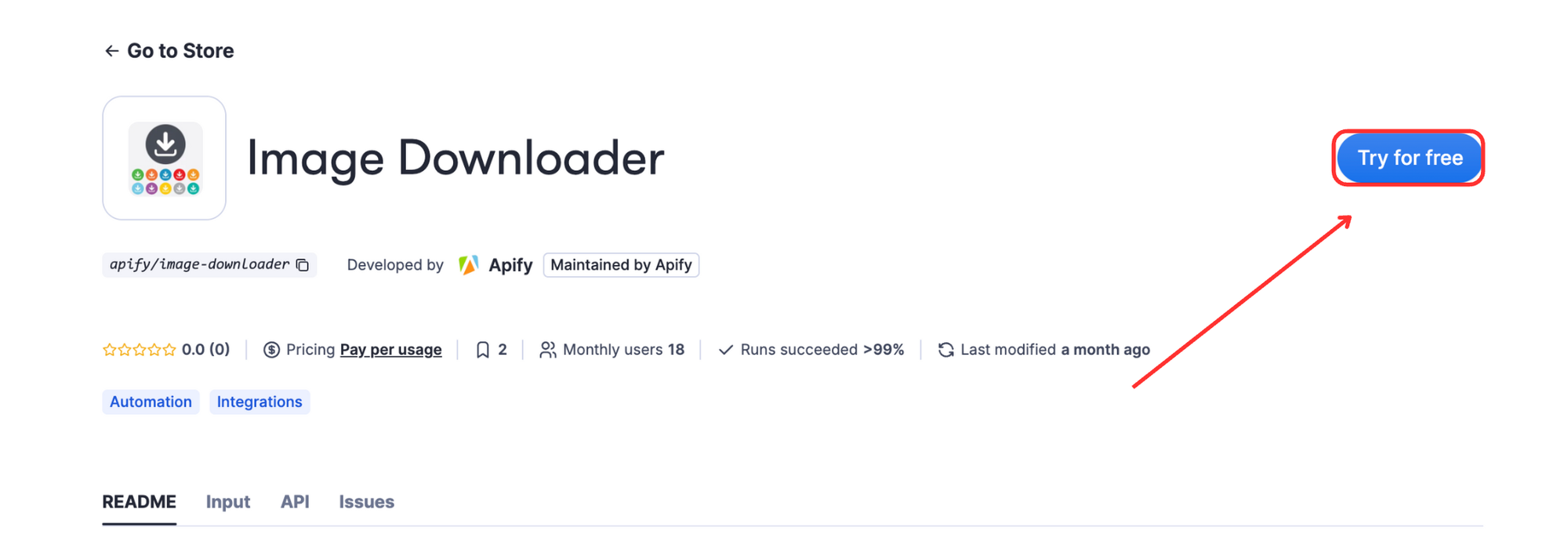

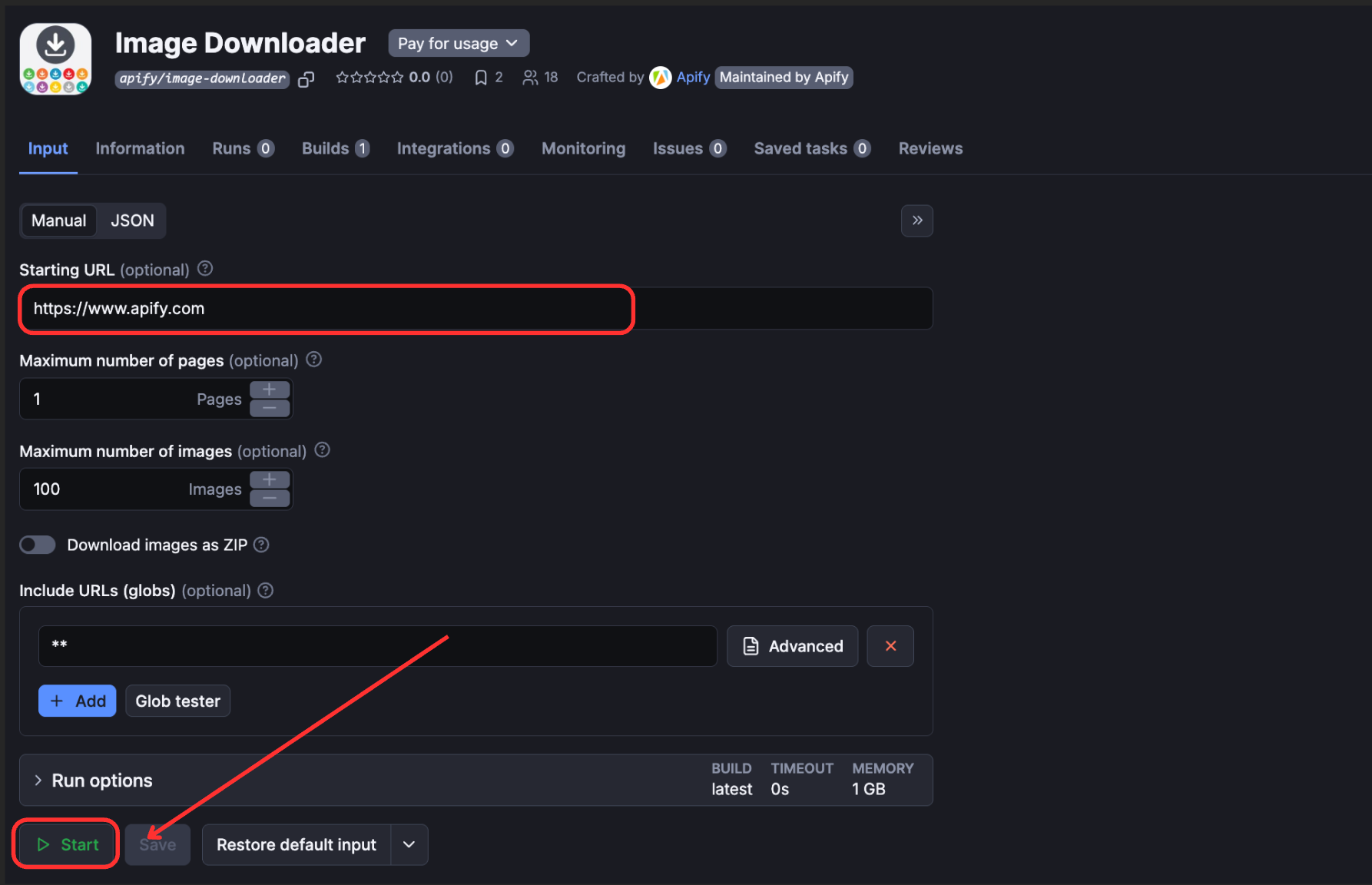

To try it out, you can go to Image Download Scraper on Apify > Try for free, and run the Actor.

In the console, fill out the entries as desired (URL, number of images or pages, etc.)

Once run, you’ll see an output like below:

Recap

Now you know how to build a web scraper to collect images from a URL using Crawlee for Python and the beautiful soup library, and deploy it on the Apify platform for easier management of the data. For an easier way to run a scraper without coding, use Apify’s ready-made scraper with advanced features.

Frequently Asked Questions (FAQs)

Can you scrape images from a website?

Yes, you can scrape images from a website by using programming libraries like BeautifulSoup or Selenium to locate image tags, extract their source URLs, and download them using HTTP requests. Or else, you can use a ready-made scraper on Apify.

Is it legal to scrape images from a website?

Yes, it is legal to scrape images from a website in certain contexts, but it comes with important limitations. Always check the website's terms of service, robots.txt file, and copyright status of images.

How to scrape images from a website?

To scrape images from a website, use a library like BeautifulSoup to parse the HTML, locate all tags, extract their src attributes, convert relative URLs to absolute ones, and download each image using HTTP requests with appropriate headers.