Hey, we're Apify. You can build, deploy, share, and monitor your scrapers and crawlers on the Apify platform. Check us out.

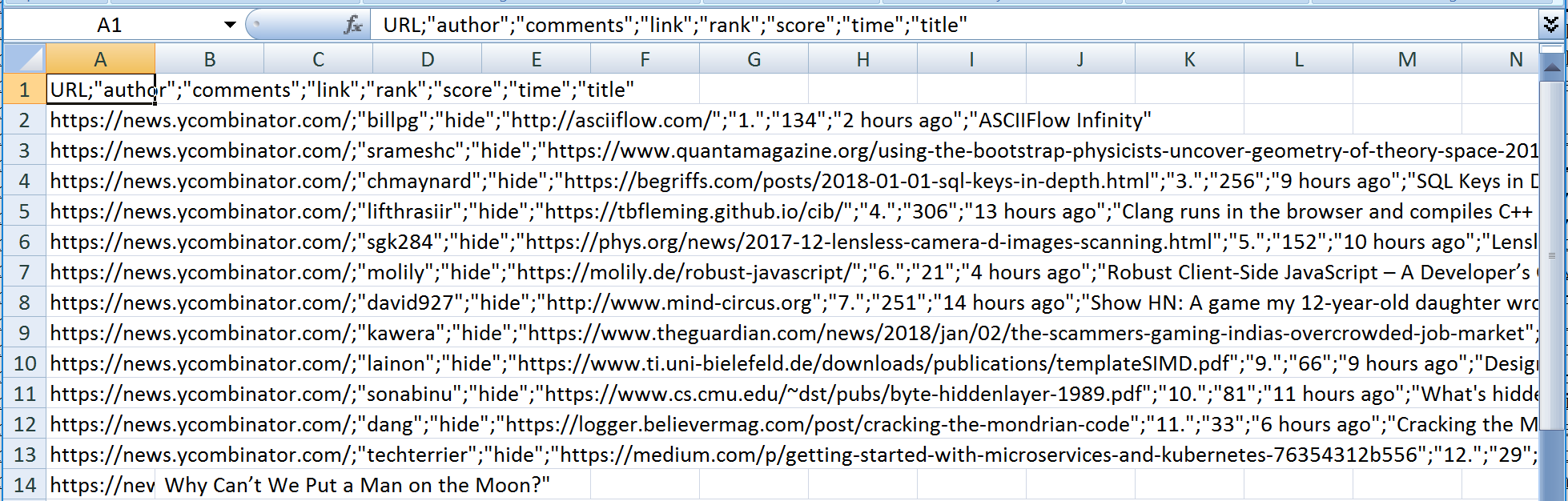

Since the early days of Apify, we have enabled our users to export crawling or web scraping results in tabular form using comma-separated values files (CSV). Although Microsoft Excel can theoretically open CSV files, but in reality, it often fails. Depending on the version of Excel, the local settings of the computer, and probably the weather outside, Excel sometimes opens the CSV files completely scrambled:

Although it is sometimes possible to make Excel open the CSV file,

e.g. by changing the encoding of the file from UTF-8 to UTF-16, by adding a byte order mark, or by changing the value-separator character, this was a huge pain for our users. So, we decided to add native support for Excel format (i.e. XLSX files).

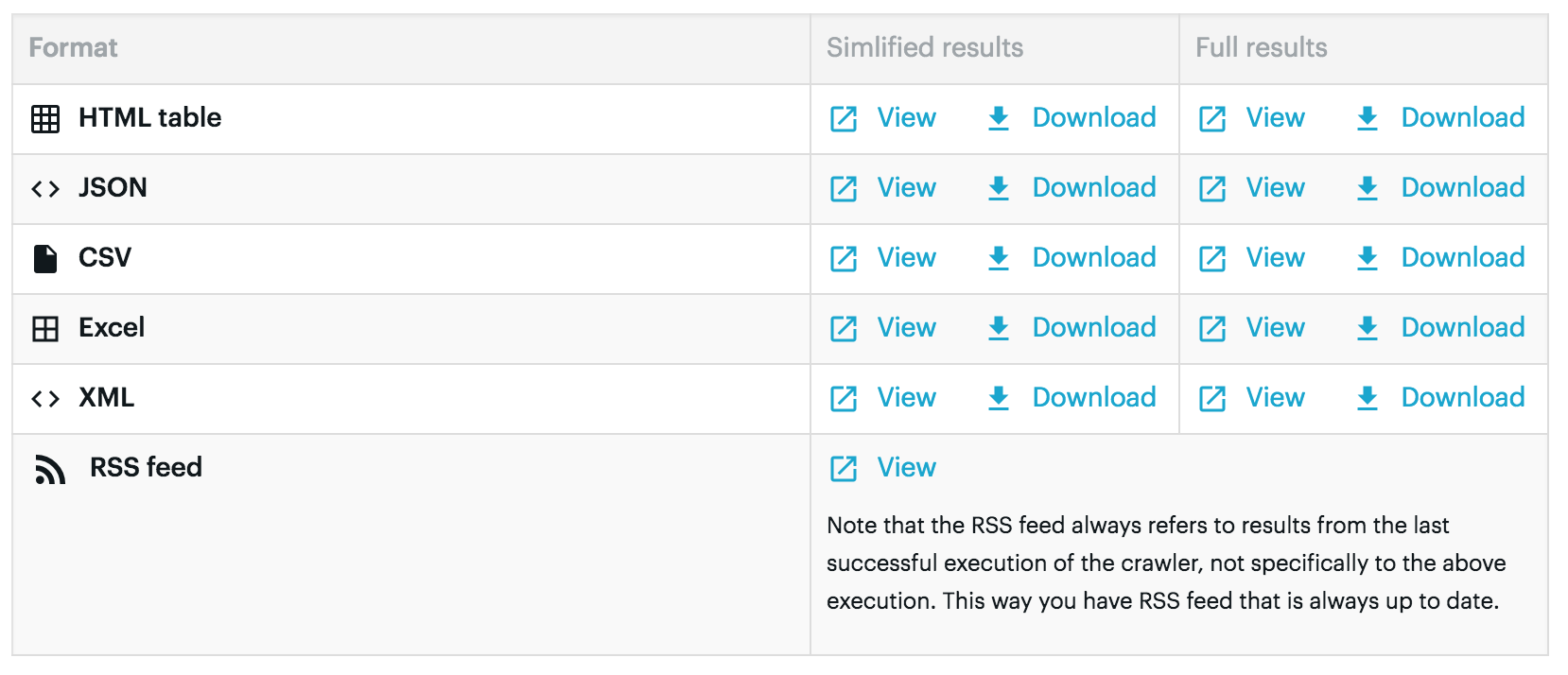

To export crawler results in Excel format, simply add the format=xlsx query parameter to the crawler run results API endpoint.

For example:

https://api.apify.com/v1/execs/tMuxNxqgPobnd7CqQ/results?attachment=1&format=xlsx&simplified=1You can also find links to Excel files on the crawler run details page:

From a technical perspective, adding support for Excel files (XLSX) was quite an interesting task. Although there are many open-source packages that enable the generation of XLSX files, most of them create the file in memory or on disk. That becomes a problem when the crawler results are large, because allocating a large amount of memory on your web server might choke it and degrade performance for other users.

Looking at the structure of the XLSX file format, it is simply a bunch of XML files compressed together into a single ZIP file. As both XML and ZIP files can be streamed on the fly, we thought it should be possible to export data to an XLSX file in streaming fashion so that the export would only consume a limited amount of memory, regardless of the data size.

As we couldn’t find any suitable library, we decided to build a new one. And because we’re getting so much from the open-source community, we want to give something back and make this tool open source. You can find the library published on NPM as xlsx-write-stream and the source code is on GitHub.

If you have any problem with the library, please create an issue on GitHub.