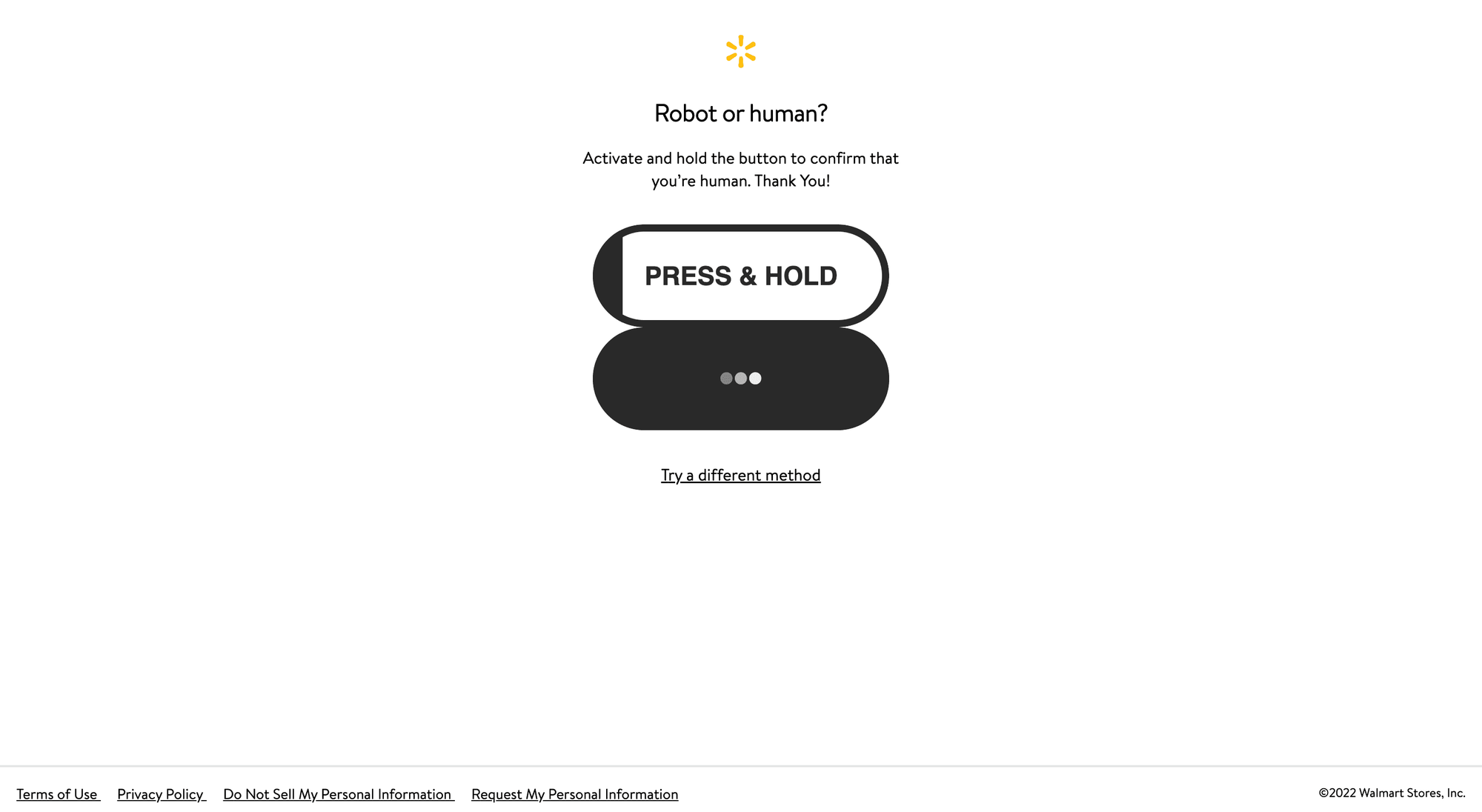

Scraping e-commerce data from Walmart’s product pages is difficult: The page structures vary, causing scrapers to fail when they encounter unexpected layouts, so you need to account for numerous edge cases.

On top of that, the anti-bot defenses such as Web Application Firewalls, rate limiters, CAPTCHAs, and JavaScript challenges further complicate automated extraction.

Luckily, an all-in-one solution like Apify’s E-commerce Scraping Tool helps overcome these challenges by providing a reliable, multi-platform API for retrieving Walmart data with a simple Python script.

Apify’s E-commerce Scraping Tool for scraping Walmart

E-commerce Scraping Tool extracts product information, pricing, reviews, seller details, and more from virtually any online retail platform, marketplace, or catalog - including Amazon, Walmart, eBay, and many others.

This tool was developed and maintained by Apify as an Actor - a cloud-based automation tool that runs on the Apify platform. Actors support both no-code workflows and API integration, giving you flexibility in how you collect data.

How to scrape data from Walmart via Python API

In this step-by-step tutorial, you’ll learn how to use E-commerce Scraping Tool to scrape Walmart product data via API in Python. Here's a process overview:

- Initialize your Python project

- Select E-commerce Scraping Tool

- Set up the API integration

- Prepare your Python script for Walmart e-commerce data scraping via API

- Retrieve and configure your Apify API token

- Configure E-commerce Scraping Tool

- Complete code

- Test the scraper

Prerequisites

To follow this guide section, verify that you have:

- An Apify account

- A basic understanding of how Apify Actors work when called via API, since the E-commerce Scraping Tool’s API-based mode works in the same way

- Python 3.10+ installed on your machine

- A Python IDE (e.g., PyCharm or Visual Studio Code with the Python extension)

- Familiarity with Python syntax and making HTTP requests

1. Initialize your Python project

If you’re starting from scratch, follow these steps to set up a Python project. Begin by creating a new directory for your Walmart e-commerce scraper:

mkdir walmart-e-commerce-scraper

Then, enter the project’s folder in the terminal and initialize a virtual environment inside it:

cd walmart-e-commerce-scraper

python -m venv .venv

Load the project in your favorite Python IDE and create a new file called scraper.py. This is where you’ll place the API-based e-commerce scraping logic.

On Windows, activate the virtual environment with this command:

.venv\Scripts\activate

Equivalently, on Linux/macOS, execute:

source .venv/bin/activate

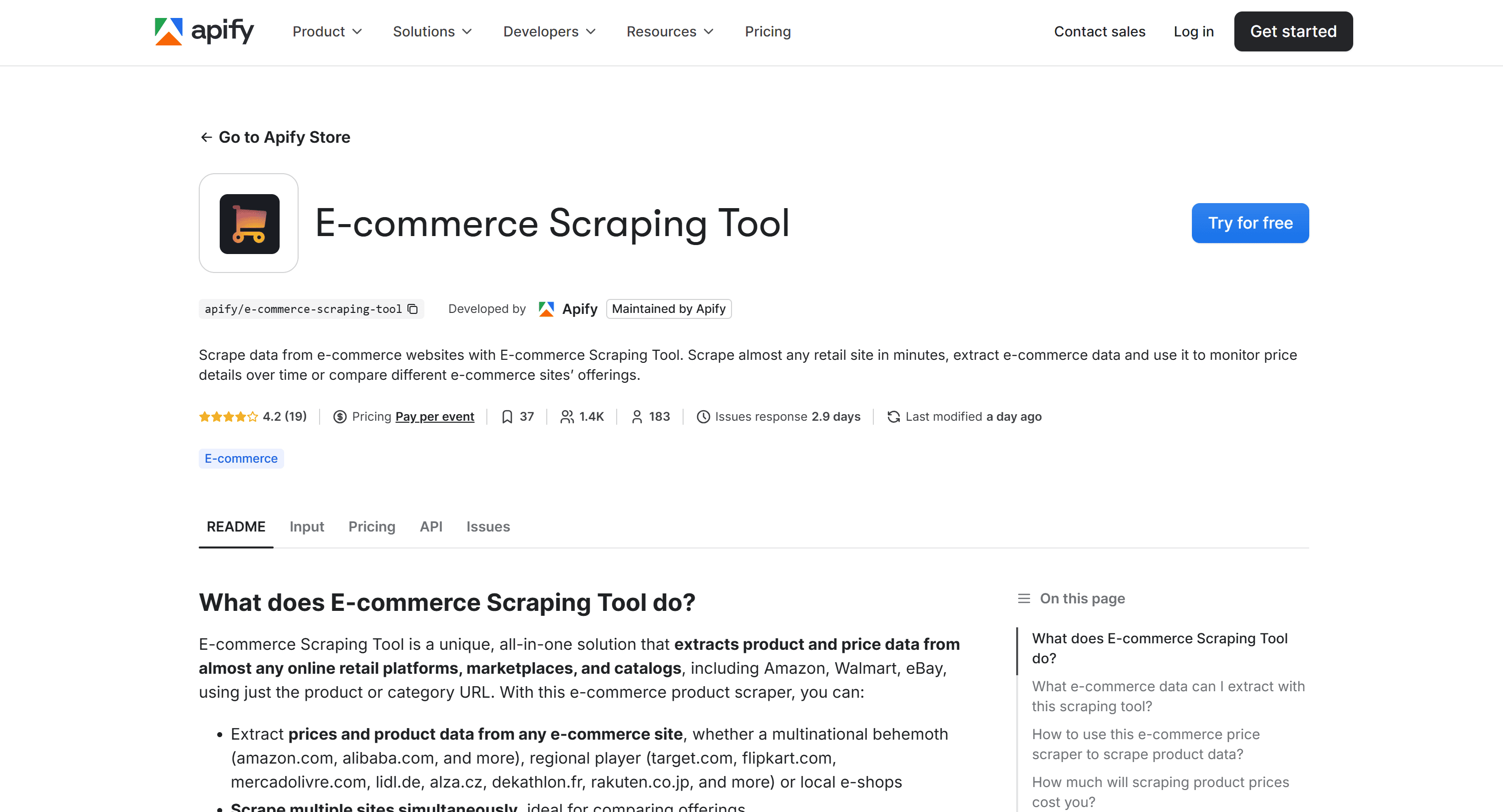

2. Select E-commerce Scraping Tool

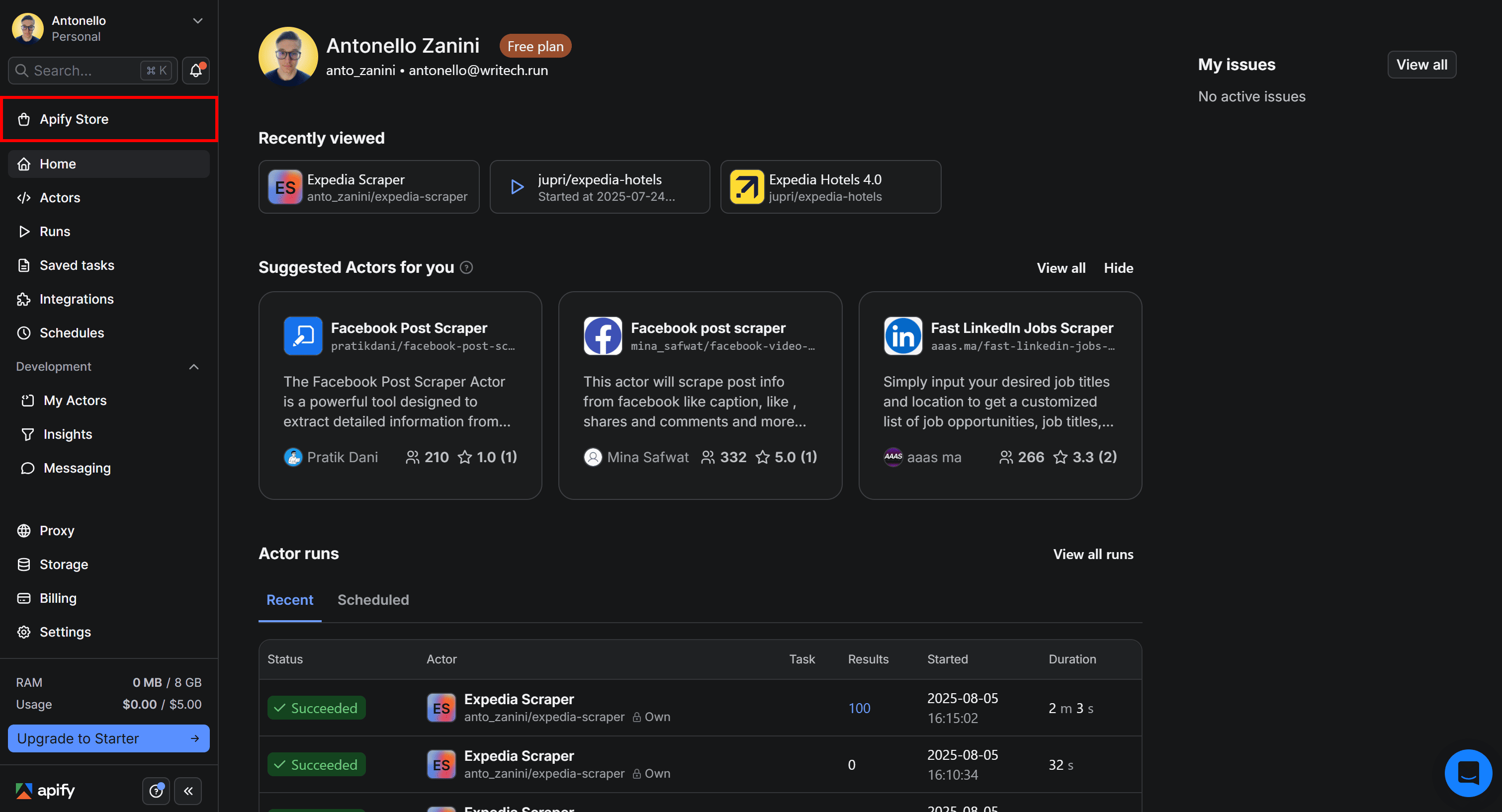

To get started with Walmart data collection via API using E-commerce Scraping Tool, log in to your Apify account. Open Apify Console and select Apify Store from the left-hand menu:

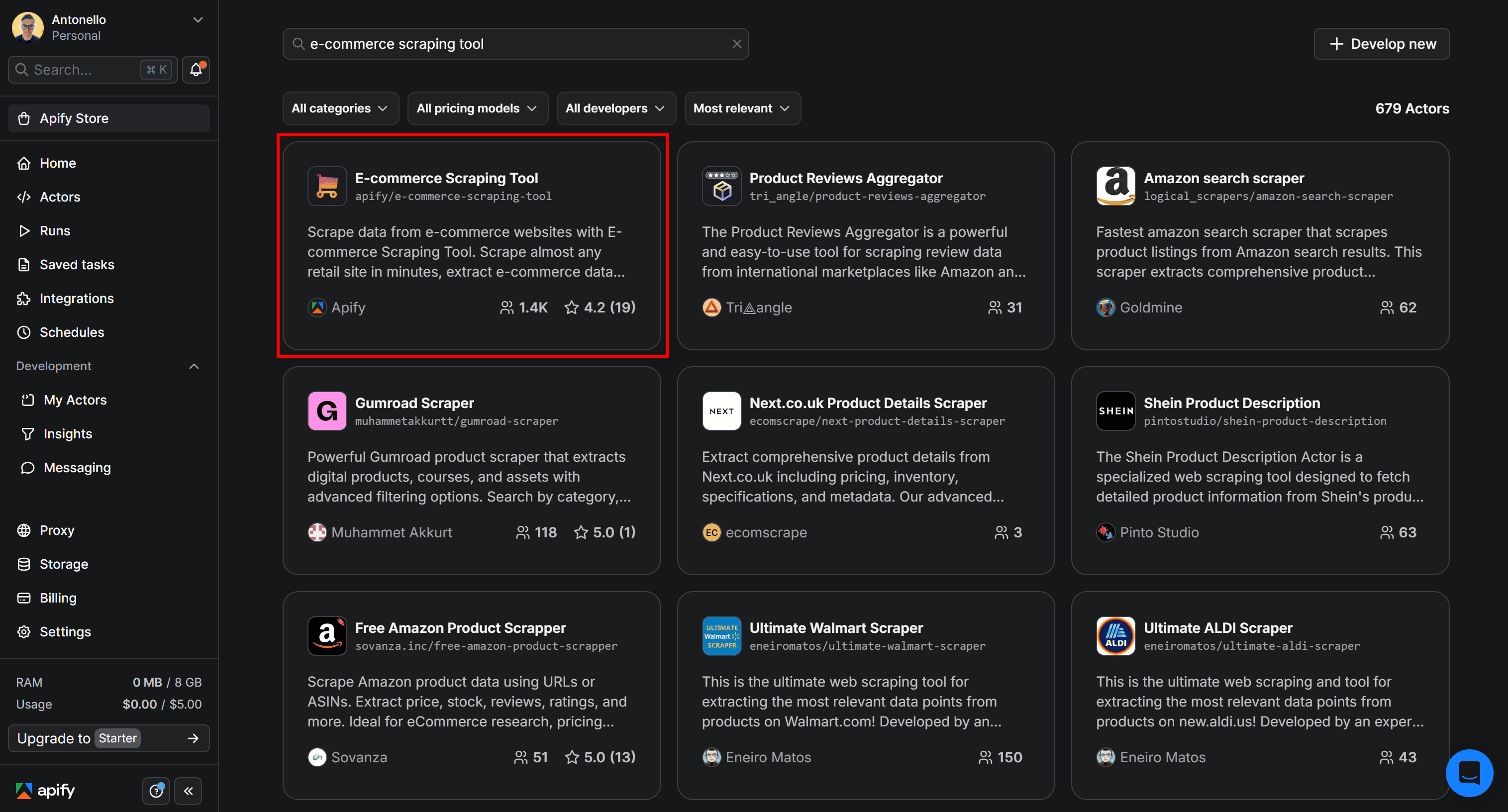

On Apify Store, search for “e-commerce scraping tool,” and select it from the list:

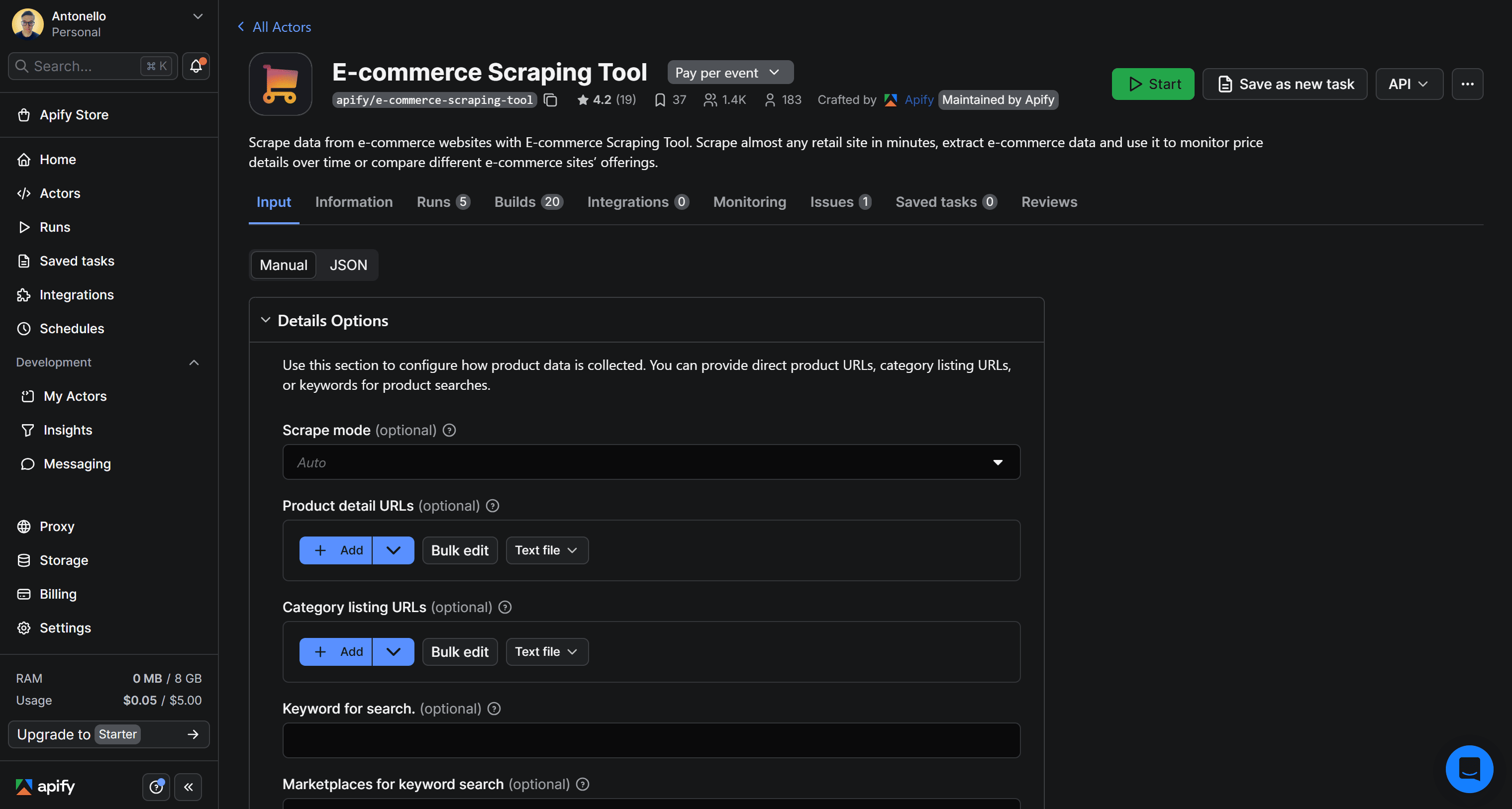

You’ll reach the E-commerce Scraping Tool Actor page:

3. Set up the API integration

Now's the time to configure the scraper for API access.

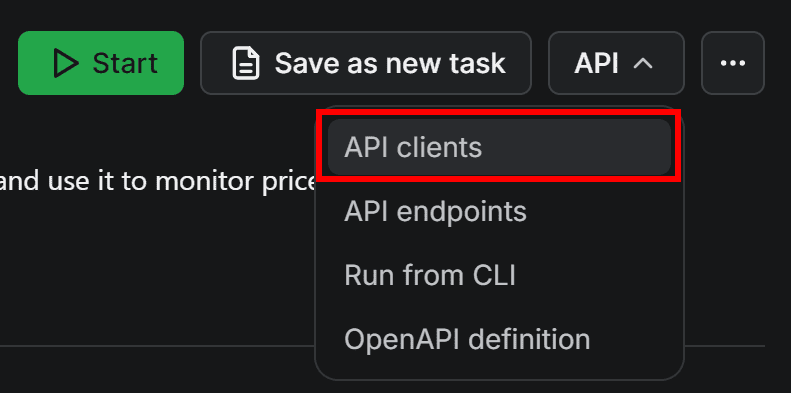

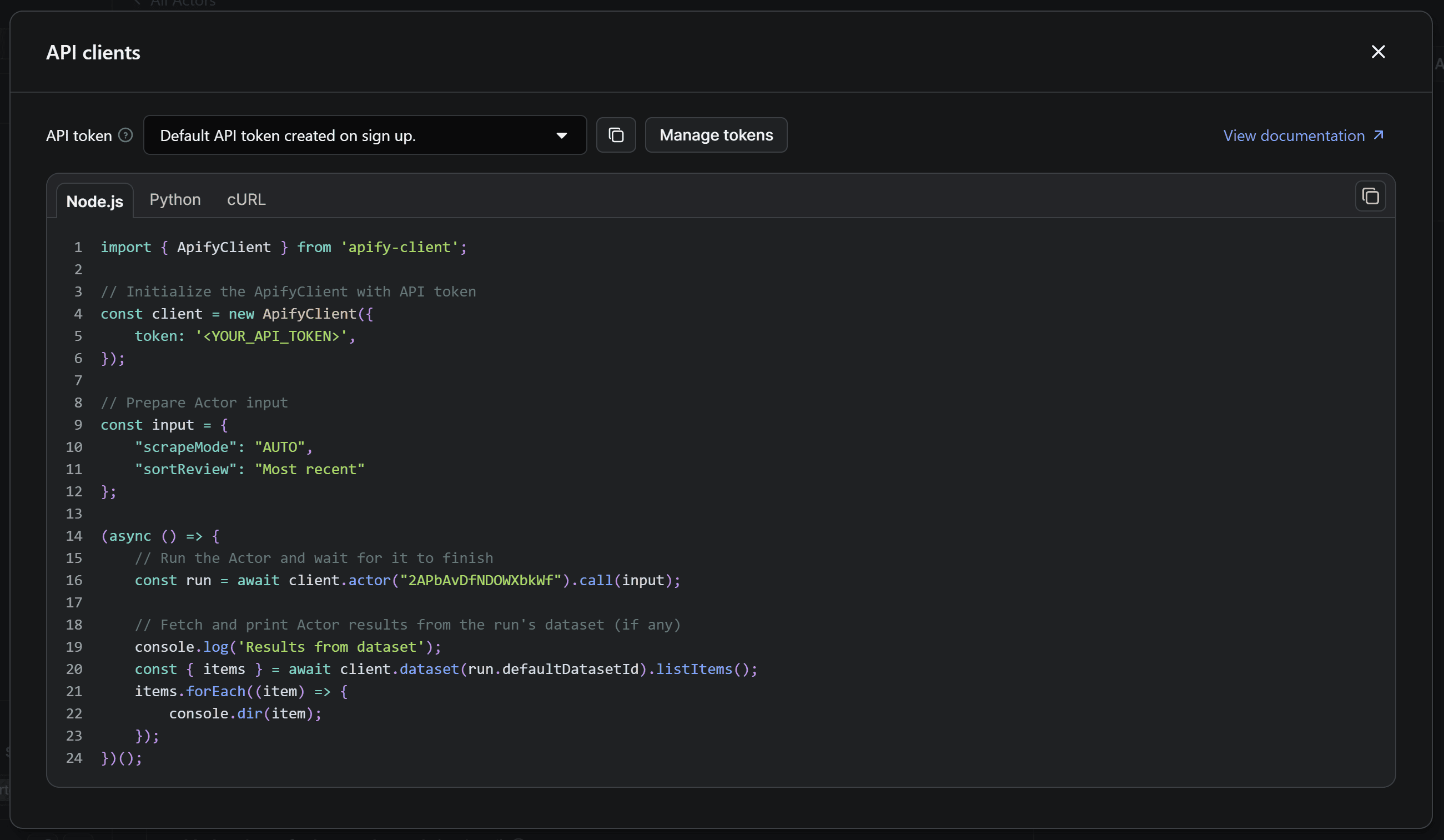

Open the API dropdown in the top-right corner and select the API clients option:

This will open the following modal:

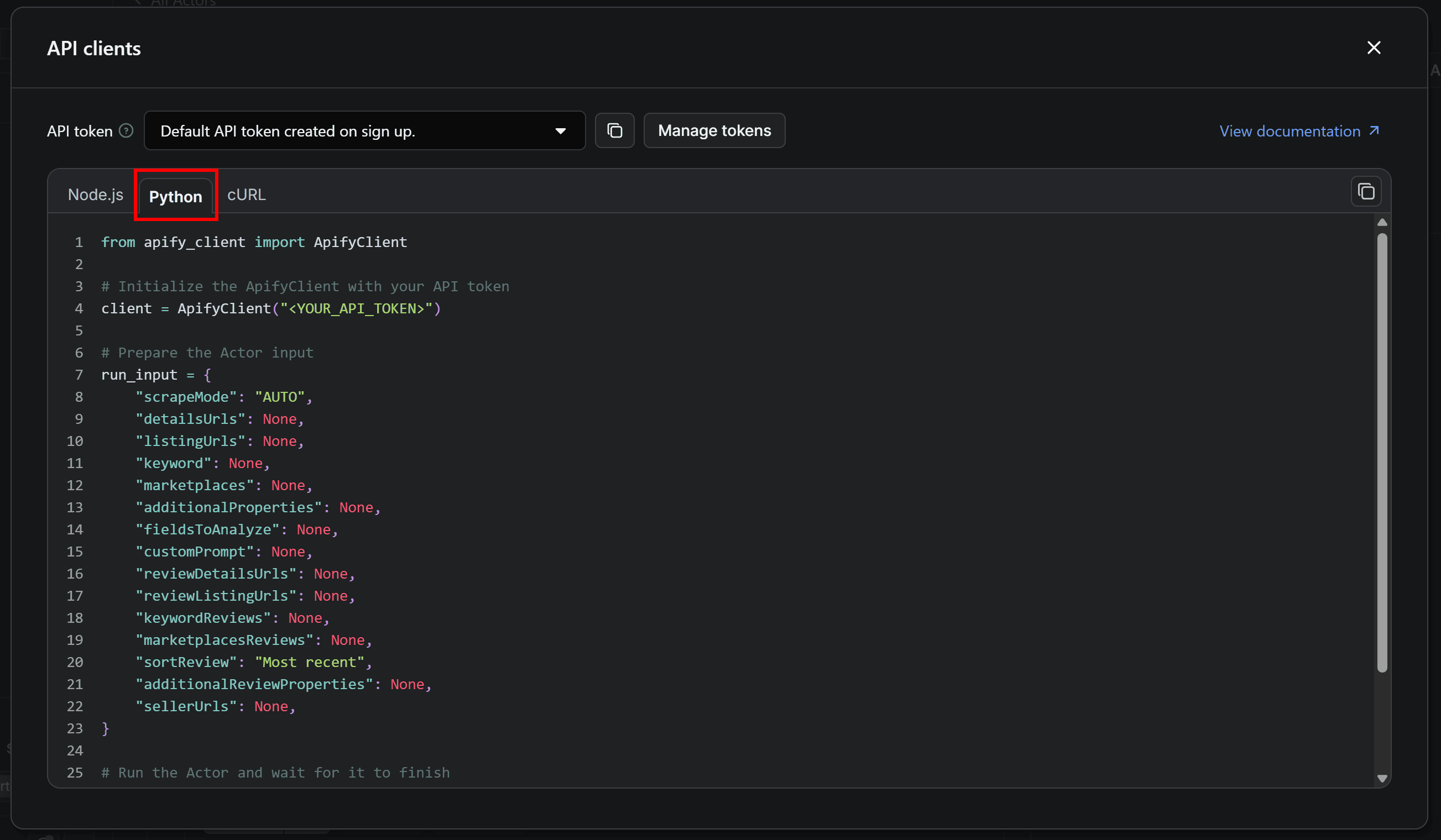

The modal contains ready-to-use code snippets for calling the selected Actor via the Apify API client. By default, it shows a Node.js snippet. Since you’ll be coding in Python, switch to the Python tab:

Copy the Python snippet shown in the modal and paste it into your scraper.py script. Keep the modal open, as we’ll get back to it shortly.

4. Prepare your Python script for Walmart e-commerce data scraping via API

The code snippet you copied from the API clients modal uses the apify_client Python library. To install it in your Python project, run the following in your activated virtual environment:

pip install apify_client

In the snippet, you’ll also notice a placeholder for the Apify API token (i.e. the "YOUR_API_TOKEN>" string). That’s a secret required to authenticate API calls made by the Apify client.

python-dotenv library, which allows you to load environment variables from a .env file.In your activated virtual environment, install python-dotenv with:

pip install python-dotenv

Next, add a .env file to your project’s root folder, which should now contain:

walmart-e-commerce-scraper/

├── .venv/

├── .env # <-----

└── scraper.py

Now, define the APIFY_API_TOKEN environment variable in the .env file:

APIFY_API_TOKEN="YOUR_APIFY_API_TOKEN"

"YOUR_APIFY_API_TOKEN" is a placeholder for your actual Apify API token, which you’ll retrieve and set in the next step.Update scraper.py to load environment variables and read the API token from the env you just defined:

from dotenv import load_dotenv

import os

# Load environment variables from .env file

load_dotenv()

# Read the required environment variable

APIFY_API_TOKEN = os.getenv("APIFY_API_TOKEN")

The load_dotenv() function from python-dotenv loads envs from the .env file, and os.getenv() allows you to access them in your code.

Lastly, pass the API token to the ApifyClient constructor:

client = ApifyClient(APIFY_API_TOKEN)

# ...

Your scraper.py script is now properly set up for secure authentication with the E-commerce Scraping Tool APIs.

5. Retrieve and configure your Apify API token

To complete the Actor's integration for API-based Walmart data scraping, you need to obtain your Apify API token and add it to your .env file.

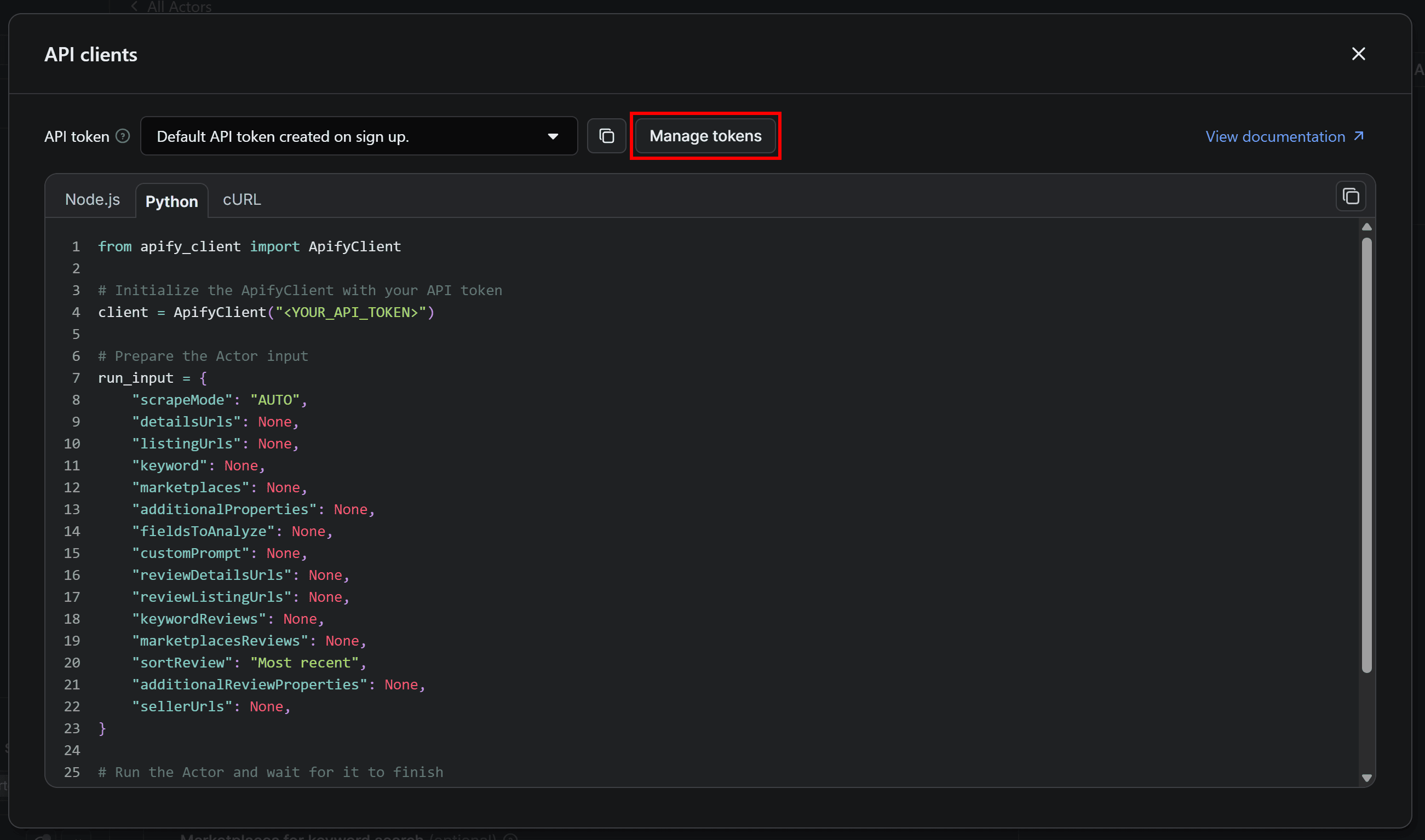

Go back to the E-commerce Scraping Tool Actor’s page in your browser. In the API clients modal, click the Manage tokens button:

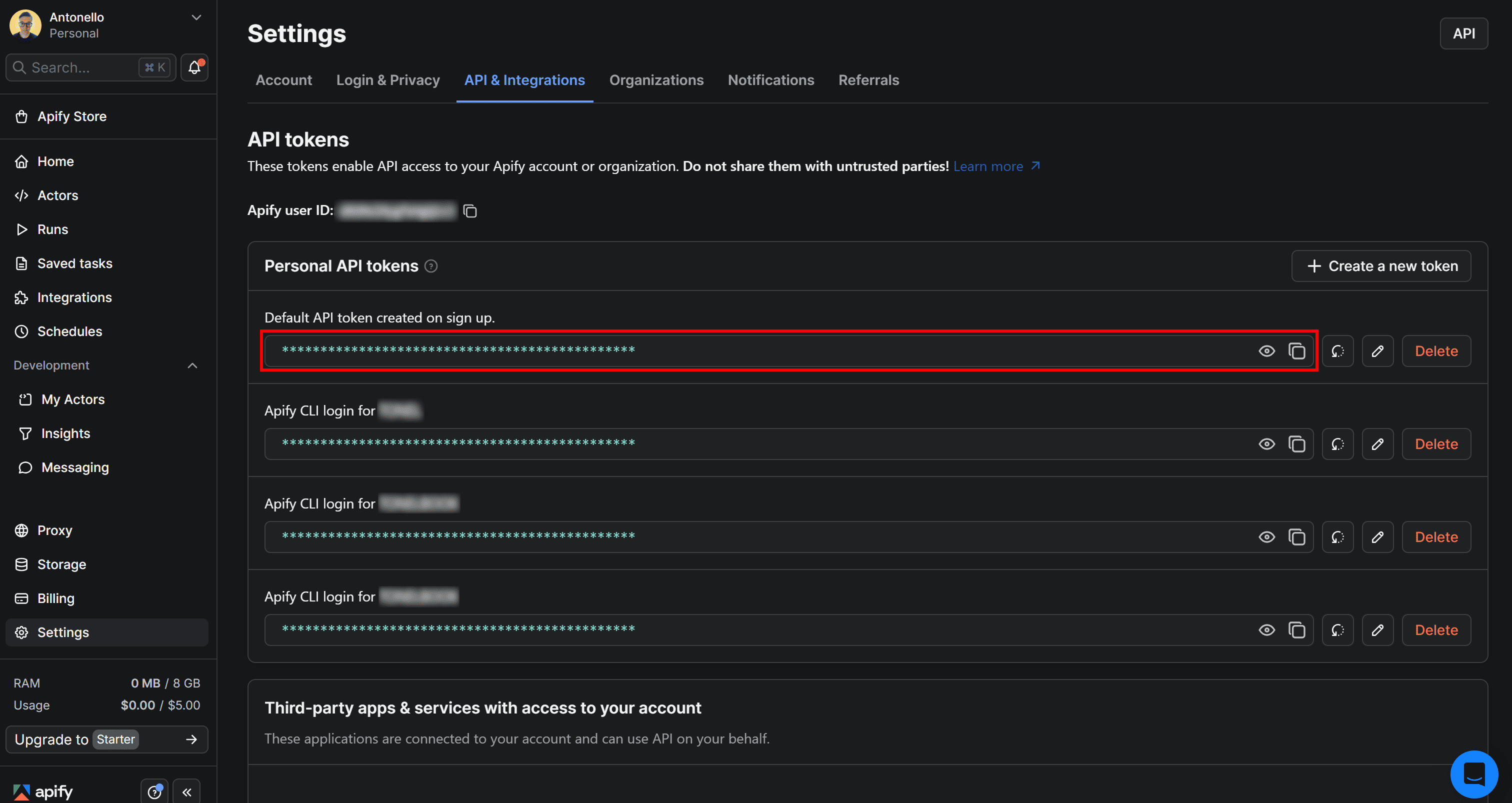

You’ll be taken to the API & Integrations section of the Settings page. Here, get your Apify API token from the Default API token created on sign up entry:

Now, paste the token into the value of the APIFY_API_TOKEN env in your .env file:

APIFY_API_TOKEN=PASTE_YOUR_TOKEN_HERE

Make sure to replace PASTE_YOUR_TOKEN_HERE with the actual token you just copied.

6. Configure E-commerce Scraping Tool

Like any other Apify actor, to retrieve the data you’re interested in, you need to provide the correct input parameters. When calling the ApifyClient, these inputs specify which pages the Actor should fetch data from when called via API.

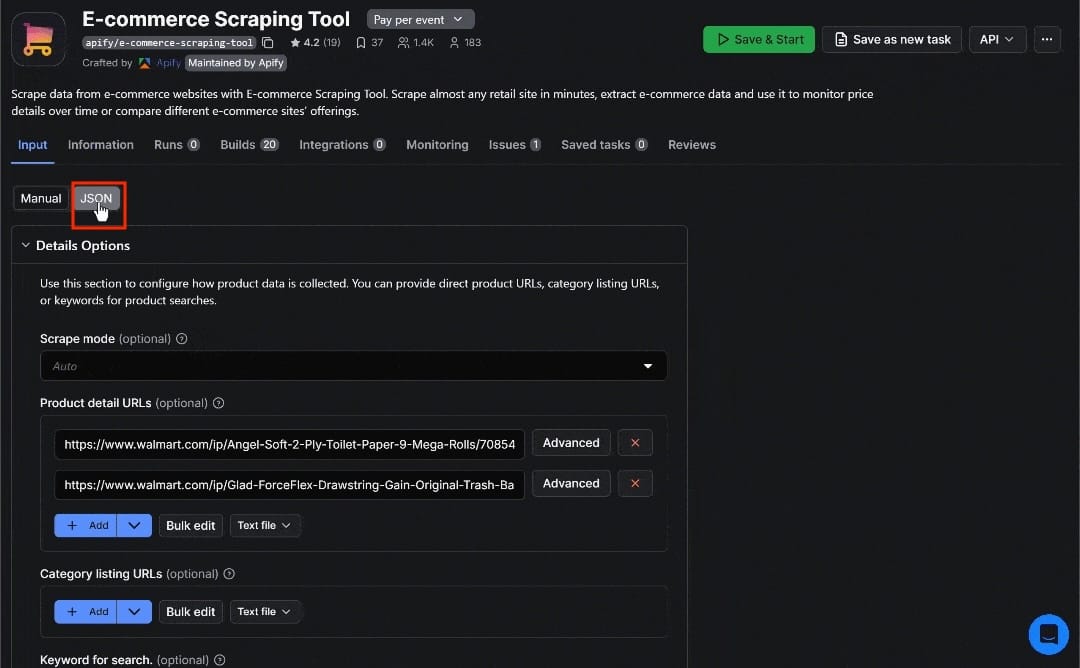

In this example, the target website is Walmart, and let’s assume you want to scrape data from the following two product pages:

https://www.walmart.com/ip/Angel-Soft-2-Ply-Toilet-Paper-9-Mega-Rolls/708542578https://www.walmart.com/ip/Glad-ForceFlex-Drawstring-Gain-Original-Trash-Bags-13-Gallon-140-Count/15601805681

A simple way to understand the input configuration is to experiment with the Input section on the Actor’s page. Paste the two product URLs into the Product detail URLs field and then switch to the JSON view:

As you can see, the two URLs are added to the detailsUrls array with the following structure:

{

// ...

"detailsUrls": [

{

"url": "https://www.walmart.com/ip/Angel-Soft-2-Ply-Toilet-Paper-9-Mega-Rolls/708542578"

},

{

"url": "https://www.walmart.com/ip/Glad-ForceFlex-Drawstring-Gain-Original-Trash-Bags-13-Gallon-140-Count/15601805681"

}

]

}

Now, convert that structure into Python syntax and call E-commerce Scraping Tool using the following Python code:

run_input = {

"scrapeMode": "AUTO",

"detailsUrls": [

{

"url": "https://www.walmart.com/ip/Angel-Soft-2-Ply-Toilet-Paper-9-Mega-Rolls/708542578"

},

{

"url": "https://www.walmart.com/ip/Glad-ForceFlex-Drawstring-Gain-Original-Trash-Bags-13-Gallon-140-Count/15601805681"

}

]

}

run = client.actor("2APbAvDfNDOWXbkWf").call(run_input=run_input)

With this configuration, the Actor will be properly set up to scrape data from the two specified Walmart product pages.

7. Complete code

Here’s the final code for your API-based Walmart data scraper powered by Apify’s E-Commerce Scraping Tool:

from dotenv import load_dotenv

import os

from apify_client import ApifyClient

# Load environment variables from .env file

load_dotenv()

# Read the required environment variable

APIFY_API_TOKEN = os.getenv("APIFY_API_TOKEN")

# Initialize the ApifyClient with your Apify API token

client = ApifyClient(APIFY_API_TOKEN)

# Prepare E-commerce Scraping Tool input for Walmart scraping

run_input = {

"scrapeMode": "AUTO",

"detailsUrls": [

{

"url": "https://www.walmart.com/ip/Angel-Soft-2-Ply-Toilet-Paper-9-Mega-Rolls/708542578"

},

{

"url": "https://www.walmart.com/ip/Glad-ForceFlex-Drawstring-Gain-Original-Trash-Bags-13-Gallon-140-Count/15601805681"

}

]

}

# Run E-commerce Scraping Tool and wait for the scraping task to finish

run = client.actor("2APbAvDfNDOWXbkWf").call(run_input=run_input)

# Fetch and print the Walmart product data results from the run’s dataset (if any)

for item in client.dataset(run["defaultDatasetId"]).iterate_items():

print(item)

With just about 30 lines of code, you’ve built a fully functional Walmart scraper. Now's the time to test it.

8. Test the scraper

In the terminal, run your Walmart e-commerce scraper with:

python scraper.py

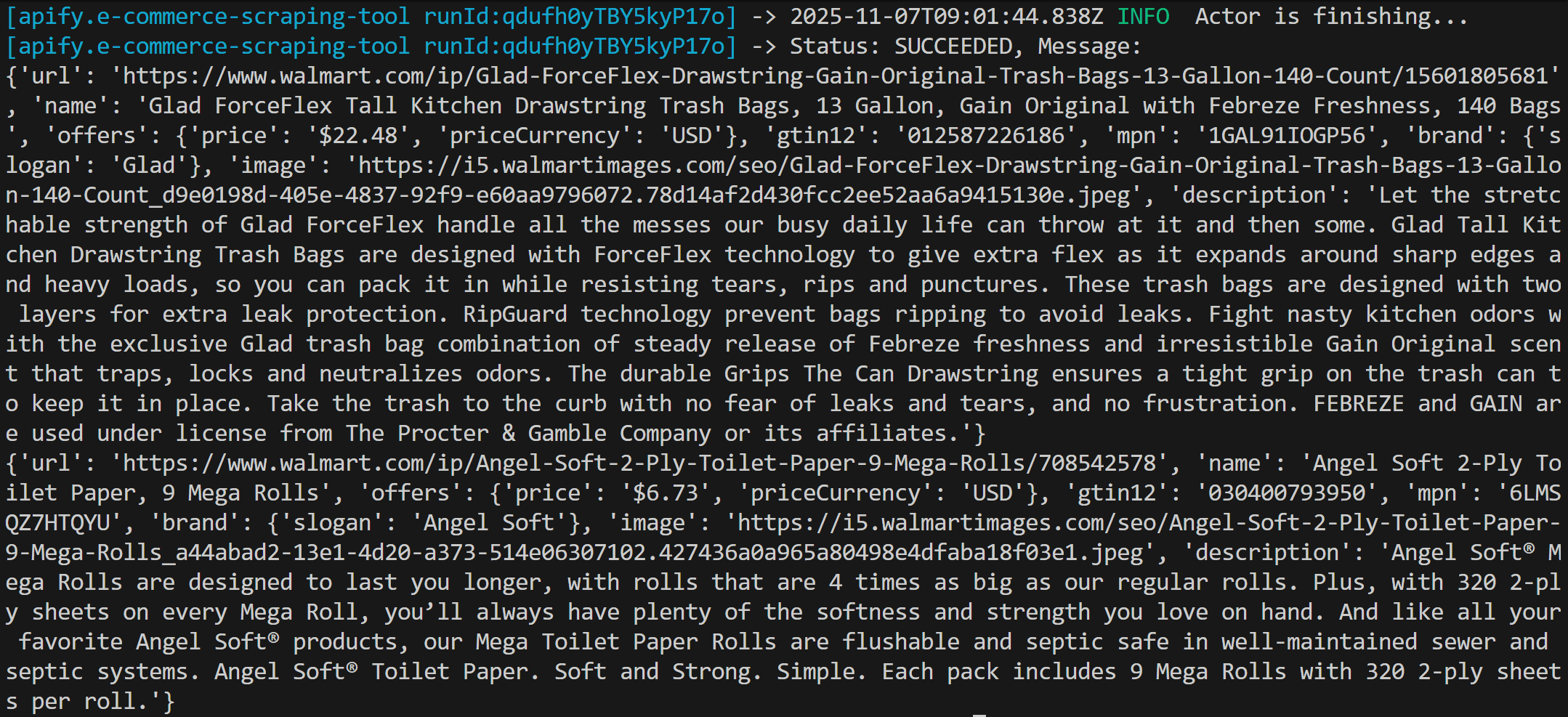

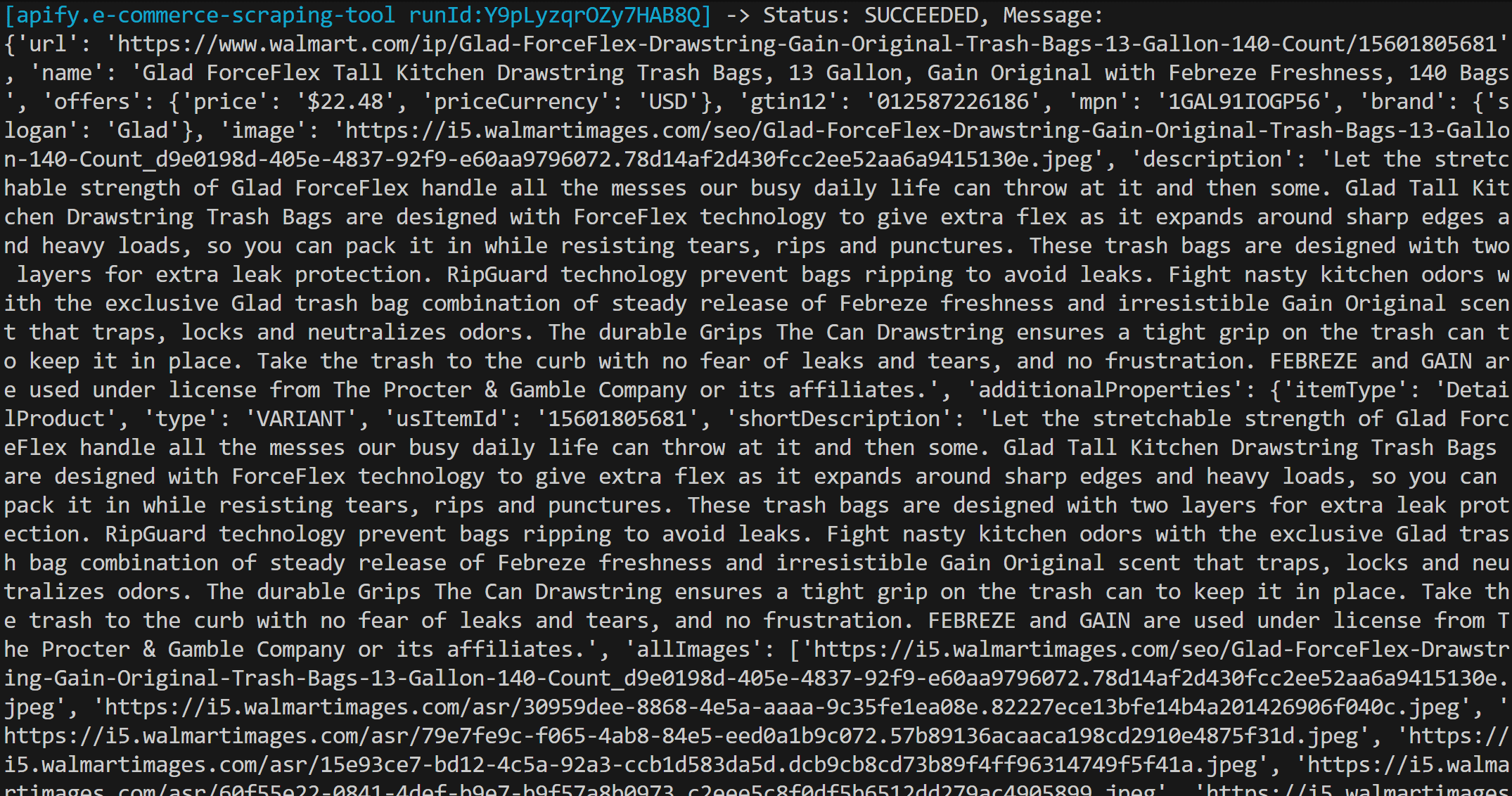

You’ll see the script connect to the configured Actor and produce output similar to this:

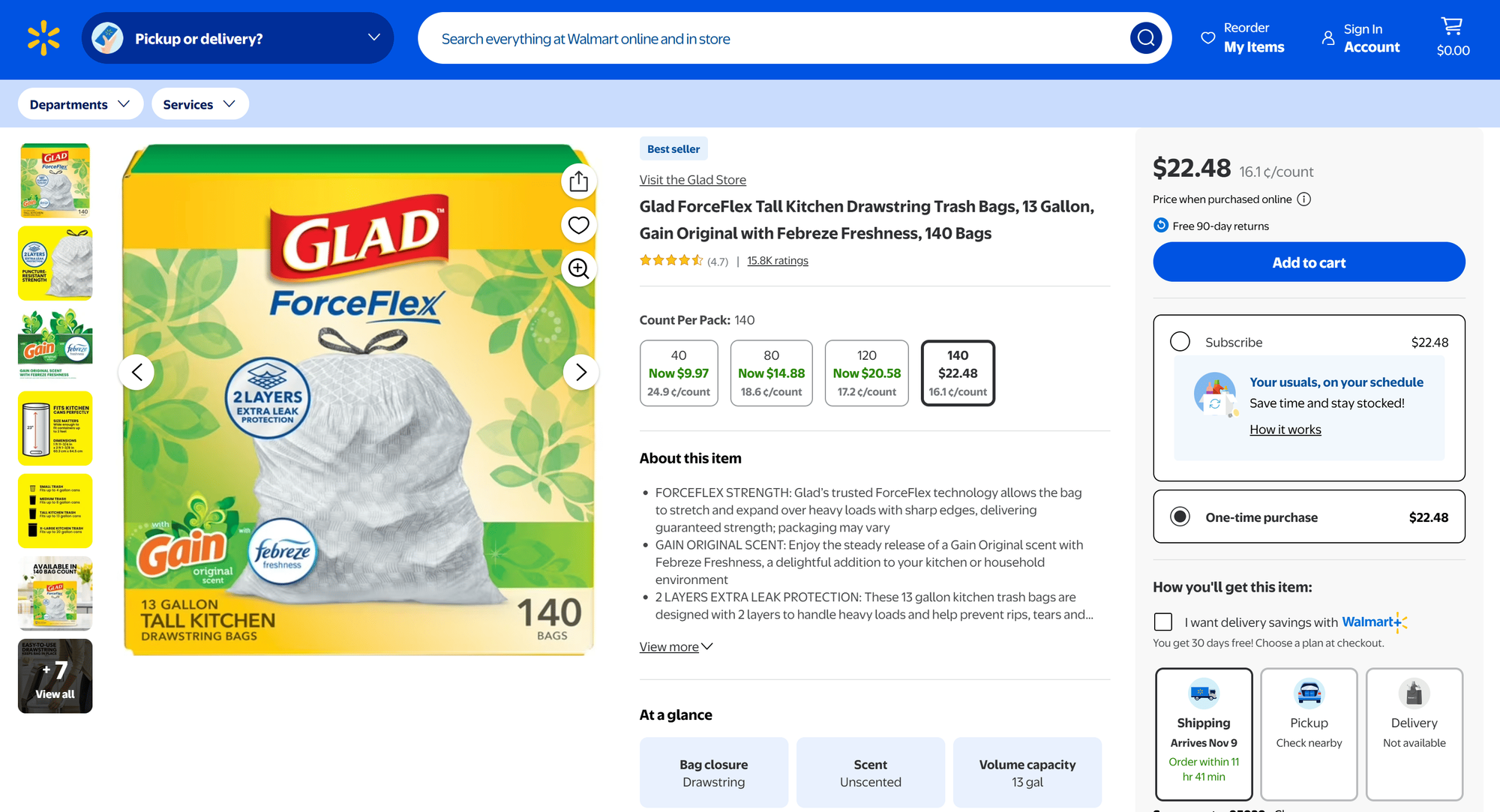

Focus on the two returned objects and note that each one contains the scraped product data. Below's the first result, which corresponds to the Glad ForceFlex product:

{

"url": "https://www.walmart.com/ip/Glad-ForceFlex-Drawstring-Gain-Original-Trash-Bags-13-Gallon-140-Count/15601805681",

"name": "Glad ForceFlex Tall Kitchen Drawstring Trash Bags, 13 Gallon, Gain Original with Febreze Freshness, 140 Bags",

"offers": {

"price": "$22.48",

"priceCurrency": "USD"

},

"gtin12": "012587226186",

"mpn": "1GAL91IOGP56",

"brand": {

"slogan": "Glad"

},

"image": "https://i5.walmartimages.com/seo/Glad-ForceFlex-Drawstring-Gain-Original-Trash-Bags-13-Gallon-140-Count_d9e0198d-405e-4837-92f9-e60aa9796072.78d14af2d430fcc2ee52aa6a9415130e.jpeg",

"description": "Let the stretchable strength of Glad ForceFlex handle all the messes our busy daily life can throw at it and then some. Glad Tall Kitchen Drawstring Trash Bags are designed with ForceFlex technology to give extra flex as it expands around sharp edges and heavy loads, so you can pack it in while resisting tears, rips and punctures. These trash bags are designed with two layers for extra leak protection. RipGuard technology prevent bags ripping to avoid leaks. Fight nasty kitchen odors with the exclusive Glad trash bag combination of steady release of Febreze freshness and irresistible Gain Original scent that traps, locks and neutralizes odors. The durable Grips The Can Drawstring ensures a tight grip on the trash can to keep it in place. Take the trash to the curb with no fear of leaks and tears, and no frustration. FEBREZE and GAIN are used under license from The Procter & Gamble Company or its affiliates."

}

As you can tell, the above data accurately reflects the information on the target Walmart product page:

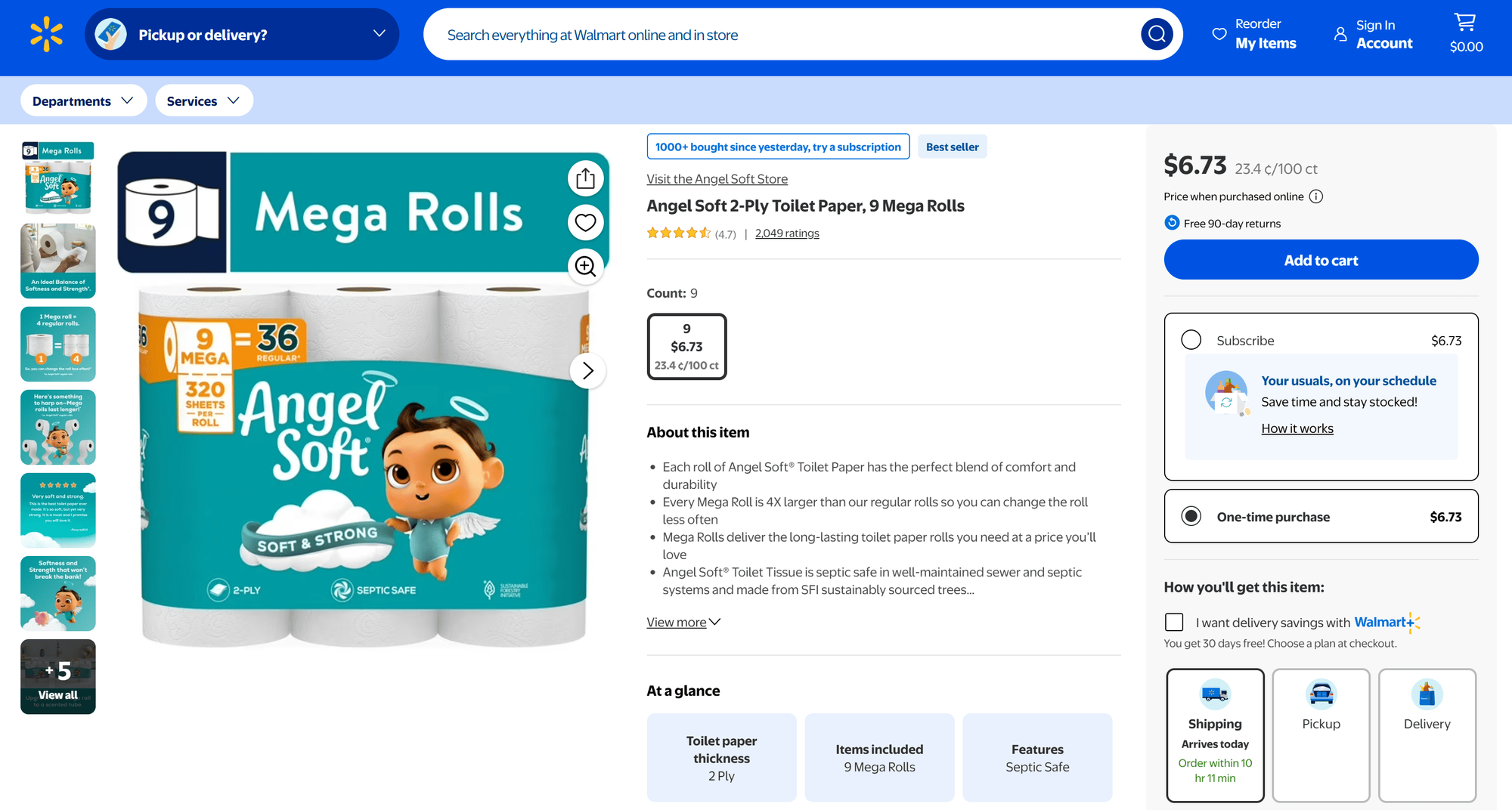

Then, inspect the second result, which corresponds to the Angel Soft product:

{

"url": "https://www.walmart.com/ip/Angel-Soft-2-Ply-Toilet-Paper-9-Mega-Rolls/708542578",

"name": "Angel Soft 2-Ply Toilet Paper, 9 Mega Rolls",

"offers": {

"price": "$6.73",

"priceCurrency": "USD"

},

"gtin12": "030400793950",

"mpn": "6LMSQZ7HTQYU",

"brand": {

"slogan": "Angel Soft"

},

"image": "https://i5.walmartimages.com/seo/Angel-Soft-2-Ply-Toilet-Paper-9-Mega-Rolls_a44abad2-13e1-4d20-a373-514e06307102.427436a0a965a80498e4dfaba18f03e1.jpeg",

"description": "Angel Soft® Mega Rolls are designed to last you longer, with rolls that are 4 times as big as our regular rolls. Plus, with 320 2-ply sheets on every Mega Roll, you’ll always have plenty of the softness and strength you love on hand. And like all your favorite Angel Soft® products, our Mega Toilet Paper Rolls are flushable and septic safe in well-maintained sewer and septic systems. Angel Soft® Toilet Paper. Soft and Strong. Simple. Each pack includes 9 Mega Rolls with 320 2-ply sheets per roll."

}

And again, it perfectly matches what you see on the product page:

In both cases, the original pages contain more data than what’s returned by default. This depends on how you configure E-commerce Scraping Tool, which offers several parameters for deep scraping, including reviews, sellers, AI overviews, and additional product properties.

Your Walmart scraper works correctly.

Next step: Collect different Walmart data

Now that your Walmart scraper is up and running, try adjusting the input parameters to extract more or different data. For example, start by setting the "additionalProperties" field to True:

run_input = {

"scrapeMode": "AUTO",

"additionalProperties": True, # <----------

"detailsUrls": [

{

"url": "https://www.walmart.com/ip/Angel-Soft-2-Ply-Toilet-Paper-9-Mega-Rolls/708542578"

},

{

"url": "https://www.walmart.com/ip/Glad-ForceFlex-Drawstring-Gain-Original-Trash-Bags-13-Gallon-140-Count/15601805681"

}

]

}

This enables the scraper to return richer product data:

Also, explore other supported input parameters of E-commerce Scraping Tool to expand your data extraction even further:

| Parameter Name | Key | Type | Description | Value Options / Default |

|---|---|---|---|---|

| Scrape mode | scrapeMode | Enum | Defines how data should be scraped: via browser rendering, lightweight HTTP requests, or automatically chosen mode. | "AUTO" (default), "BROWSER", "HTTP" |

| Product detail URLs | detailsUrls | Array | Direct URLs to individual product detail pages. | — |

| Category listing URLs | listingUrls | Array | URLs to category or listing pages containing multiple products. | — |

| Keyword for search | keyword | String | Keyword used to search for products across marketplaces. | — |

| Marketplaces for keyword search | marketplaces | Array | Marketplaces where the keyword search should be performed. | — |

| Include additional properties | additionalProperties | Boolean | Includes extra product-related properties in the response. | — |

| AI summary – data points | fieldsToAnalyze | Array | Data points to pass to AI for generating a summary. | — |

| AI summary – custom prompt | customPrompt | String | Custom AI prompt for processing data points; default prompt used if not provided. | — |

| Review detail URLs | reviewDetailsUrls | Array | Direct URLs to product detail pages for extracting reviews. | — |

| Review listing URLs | reviewListingUrls | Array | URLs containing multiple product reviews to be scraped. | — |

| Keyword for reviews | keywordReviews | String | Keyword used to search for product reviews across marketplaces. | — |

| Marketplaces for review search | marketplacesReviews | Array | Marketplaces where review keyword searches are performed. | — |

| Review sort type | sortReview | Enum | Defines how product reviews should be sorted; falls back to default if unsupported. | "Most recent" (default), "Most relevant", "Most helpful" |

| Include additional review properties | additionalReviewProperties | Boolean | Includes extra review-related properties in the response. | — |

| Seller profile URLs | sellerUrls | Array | Direct URLs to seller or store profile pages for extracting seller info. | — |

These options enable the following features:

- All-in-one e-commerce scraper: Extracts structured product and pricing data from major online retailers, marketplaces, and catalogs - even across multiple sites in a single run.

- Broad platform support: Compatible with leading global and regional platforms such as Amazon, Walmart, eBay, Alibaba, Target, Flipkart, MercadoLibre, Lidl, Alza, Decathlon, Rakuten, and many more.

- Flexible input methods: Scrape data from individual product URLs, category or listing pages, or perform keyword-based searches.

- Multiple export formats: Output the scraped data as JSON, CSV, Excel, or HTML for easy integration into your workflows.

- AI summary: Process extracted data using prompts to automatically generate insights and summaries.

- Highly customizable: Configure the scraper to match your needs by choosing the scrape mode (

HTTP,Browser, orAuto), including additional properties, and extracting reviews or seller information, etc.

Conclusion

While this project focused on Walmart product scraping, E-commerce Scraping Tool supports many other scenarios, such as extracting product data from one platform and reviews from the equivalent product on another.

Explore what this Actor offers and use the Apify SDKs to further expand your e-commerce data automation toolkit.

FAQ

Why use an e-commerce scraping tool via API for Walmart scraping?

Using E-commerce Scraping Tool via API is ideal when you already have URLs generated from another system, or even from previous runs of the tool on category pages. You can feed the URLs directly into your cloud-based scraper and have it retrieve detailed product data.

What is the difference between E-commerce Scraping Tool and other Walmart Actors?

The main difference between E-commerce Scraping Tool and other Walmart Actors available on Apify Store is that the former supports multiple e-commerce sites through a single interface, enabling data comparison and enrichment across platforms. In contrast, the Walmart-specific Actors focus exclusively on Walmart pages.

Is using E-commerce Scraping Tool to scrape Walmart data legal?

Yes, as the input URLs supported by E-commerce Scraping Tool, whether for Walmart or any other e-commerce site, are public pages. Check out Is web scraping legal? for more details.