If you want to do data analysis, research online shopping prices, track the prices of some items, or just study the market, you need to gather price data. However, downloading a public dataset is a bad solution, as most of these datasets are not updated frequently, and product prices change every day.

The best way to collect price information is by scraping data directly from websites, as this helps you build a complete up-to-date dataset quickly.

This step-by-step tutorial will help you scrape a website using Python. We'll extract pricing data from Ebay.com in this case, but the script is applicable to most e-commerce sites. We'll guide you from setup to deployment on Apify.

Guide for scraping prices from websites

To build this scraper, we’ll be using both selenium and beautifulsoup library along with ChromeDriver (no local download needed).

Note that while this guide is applicable to scraping prices from any website, we’ll be writing the code for scraping products from eBay.

- Set up your environment

- Understand the website structure

- Write the scraper code

- Deploy on Apify (optional but recommended)

1. Setting up your environment

Here's what you’ll need to continue with this tutorial:

- Python 3.5+: Make sure you have installed Python 3.5 or higher.

- Create a virtual environment (optional but recommended): It’s always a good practice to create a virtual environment (

venv) to isolate dependencies and avoid conflicts between packages.

python -m venv myenv

source myenv/bin/activate # On macOS/Linux

myenv\\Scripts\\activate # On Windows

- Required libraries: Install them by running the command below in your terminal —

selenium,beautifulsoup4, andwebdriver-manager.

pip install selenium beautifulsoup4 webdriver-manager

To import the libraries, use the following code:

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

from webdriver_manager.chrome import ChromeDriverManager

from bs4 import BeautifulSoup

import time # built-in library

If you want to avoid local downloads and set-up, skip the process and deploy on Apify.

2. Understanding the web structure

The first rule of thumb for scraping any website is to analyze and understand the structure of the website so that you know where you can scrape data from.

If you take a close look at this page on eBay in this case, you can note these points:

- Each listing is inside a

<li>element. - Inside each listing is an

<h3>element with the class nametextual-display bsig__title__text. It’s the name of the product.

- This is followed by a nested

<div>with a<span>element of the classtextual-display bsig__price bsig__price--displayprice. This is the price of the product.

- All of these listings are in a

<ul>element with the classbrwrvr__item-results brwrvr__item-results--list.

Most other e-commerce sites follow the same structure, and the only things that change are the class names and tag types (i.e., <h3> and such). The key is to select and right-click on the elements you want to extract and select "Inspect".

Now that you have a clear understanding of the web structure, you can start writing the script to scrape the prices of these products.

3. Writing the script

First, write a function that initializes the web driver with a user header and fetches the intended URL, using beautifulsoup to parse the content.

def scrape_ebay_headphones():

# Initialize WebDriver with webdriver-manager

service = Service(ChromeDriverManager().install())

options = webdriver.ChromeOptions()

options.add_argument("--headless")

options.add_argument(

"user-agent=Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/122.0.0.0 Safari/537.36")

driver = webdriver.Chrome(service=service, options=options)

url = "https://www.ebay.com/b/Headphones/112529/bn_879608"

items = [] # empty list to store

try:

print("Fetching page...")

driver.get(url)

time.sleep(5) # waiting for page to load

# In real-world applications, consider using WebDriverWait for more efficient waiting

# parsing the page

soup = BeautifulSoup(driver.page_source, 'html.parser')

Thereafter, you can use the find_all() function to fetch the listing container and the listings as below:

listings_container = soup.find('ul', class_='brwrvr__item-results brwrvr__item-results--list')

if not listings_container:

print("Could not find listings container")

return items

# finding all product items

product_containers = listings_container.find_all('li', class_='brwrvr__item-card brwrvr__item-card--list')

items_found = 0

Once successfully extracted, you can write a loop to go through each of the product listings and extract the required fields: product title and price.

for container in product_containers:

try:

# Get product name

name_elem = container.find('h3', class_='textual-display bsig__title__text')

name = name_elem.text.strip() if name_elem else "Name not found"

# Get price

price_elem = container.find('span', class_='textual-display bsig__price bsig__price--displayprice')

price = price_elem.text.strip() if price_elem else "Price not found"

items.append({

'name': name,

'price': price

})

items_found += 1

print(f"Item {items_found}: {name} - {price}")

if items_found >= 10:

break

except AttributeError:

continue

return items

If there's an error, you can add a print statement to return the error and, finally, make the driver quit.

except Exception as e:

print(f"Error: {e}")

return []

finally:

driver.quit()

And that’s pretty much it. All that’s left to do is to run the function via a main() function as below:

def main():

print("Starting eBay headphone price scraper...")

scraped_items = scrape_ebay_headphones()

print("\nScraped Items Summary:")

for i, item in enumerate(scraped_items, 1):

print(f"{i}. {item['name']} - {item['price']}")

if __name__ == "__main__":

main()

Once run, this scraper would return an output with 10 scraped items like the following image.

Here's the complete script for scraping prices from a website using selenium and beautifulsoup.

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

from webdriver_manager.chrome import ChromeDriverManager

from bs4 import BeautifulSoup

import time

def scrape_ebay_headphones():

# Initialize WebDriver with webdriver-manager

service = Service(ChromeDriverManager().install())

options = webdriver.ChromeOptions()

options.add_argument("--headless")

options.add_argument(

"user-agent=Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/122.0.0.0 Safari/537.36")

driver = webdriver.Chrome(service=service, options=options)

url = "https://www.ebay.com/b/Headphones/112529/bn_879608"

items = []

try:

print("Fetching page...")

driver.get(url)

time.sleep(5) # Wait for page to load

# Parse page source with BeautifulSoup

soup = BeautifulSoup(driver.page_source, 'html.parser')

# Find listings container

listings_container = soup.find('ul', class_='brwrvr__item-results brwrvr__item-results--list')

if not listings_container:

print("Could not find listings container")

return items

# Find all product items

product_containers = listings_container.find_all('li', class_='brwrvr__item-card brwrvr__item-card--list')

items_found = 0

for container in product_containers:

try:

# Get product name

name_elem = container.find('h3', class_='textual-display bsig__title__text')

name = name_elem.text.strip() if name_elem else "Name not found"

# Get price

price_elem = container.find('span', class_='textual-display bsig__price bsig__price--displayprice')

price = price_elem.text.strip() if price_elem else "Price not found"

items.append({

'name': name,

'price': price

})

items_found += 1

print(f"Item {items_found}: {name} - {price}")

if items_found >= 10:

break

except AttributeError:

continue

return items

except Exception as e:

print(f"Error: {e}")

return []

finally:

driver.quit()

def main():

print("Starting eBay headphone price scraper...")

scraped_items = scrape_ebay_headphones()

print("\nScraped Items Summary:")

for i, item in enumerate(scraped_items, 1):

print(f"{i}. {item['name']} - {item['price']}")

if __name__ == "__main__":

main()

4. Deploying to Apify

Although you have your own website price scraper now, it lacks 2 important features: automated scraping and convenient storage. To solve this, you can deploy the scraper on a platform like Apify.

Follow these steps to deploy this script on Apify:

#1. Create an account on Apify

- You can get a forever-free plan - no credit card is required.

#2. Create a new Actor

- First, install Apify CLI using NPM or Homebrew in your terminal.

- Create a new directory for your Actor:

mkdir website-prices-scraper

cd website-prices-scraper

- Then, initialize the Actor by typing

apify init.

#3. Create the main.py script

- Note that you would have to make some changes to the previous script to make it Apify-friendly. Such changes are importing Apify SDK and updating input/output handling.

- You can find the modified script on GitHub

#4. Create the Dockerfile and requirements.txt

- Dockerfile:

FROM apify/actor-python:3.8

# Installing dependencies

RUN apt-get update && apt-get install -y \

wget \

unzip \

gnupg \

libnss3 \

libgconf-2-4 \

libxss1 \

libappindicator1 \

fonts-liberation \

libasound2 \

libatk-bridge2.0-0 \

libatk1.0-0 \

libcups2 \

libgbm1 \

libgtk-3-0 \

libxkbcommon0 \

xdg-utils \

libu2f-udev \

libvulkan1 \

&& rm -rf /var/lib/apt/lists/*

# Installing ChromeDriver

RUN wget -O /tmp/chromedriver.zip https://chromedriver.storage.googleapis.com/114.0.5735.90/chromedriver_linux64.zip && \

unzip /tmp/chromedriver.zip -d /usr/local/bin/ && \

rm /tmp/chromedriver.zip

# Installing Chrome

RUN wget -q -O - https://dl-ssl.google.com/linux/linux_signing_key.pub | apt-key add - && \

sh -c 'echo "deb [arch=amd64] http://dl.google.com/linux/chrome/deb/ stable main" >> /etc/apt/sources.list.d/google-chrome.list' && \

apt-get update && apt-get install -y google-chrome-stable && \

rm -rf /var/lib/apt/lists/*

# Copying all files to the working directory

COPY . ./

# Installing necessary packages

RUN pip install --no-cache-dir -r requirements.txt

# Setting the entry point to your script

CMD ["python", "main.py"]

- requirements.txt:

beautifulsoup4

selenium

webdriver-manager

asyncio

apify-client

#5. Deploy

- Type

apify loginto log in to your account. You can either use the API token or verify through your browser. - Once logged in, type

apify push, and you’re good to go.

Once deployed, head over to Apify Console > Your Actor > Click “Start” to run the scraper on Apify.

Once the run is successful, you can view the output of the scraper on the “Output” tab.

To view and download output, click “Export Output”.

You can select/omit sections and download the data in different formats, such as CSV, JSON, Excel, etc.

5. Building a price tracker

Since the scraper is deployed on Apify, you can schedule it to run hourly, daily, or monthly and then track any price changes by writing a script to retrieve saved data and the latest data.

Also note that you don’t need to write a script to save the data, as Apify saves the output for all the runs separately on the “Runs” tab.

To act on those pricing insights efficiently—whether it's updating listings, syncing inventory, or reconciling sales, you might want to explore the best ecommerce integration software solutions that help connect and automate ecommerce operations across channels.

Follow these steps to set up your tracker:

- #1. Schedule the Actor by clicking the three dots (•••) in the top-right corner of the Actor dashboard > Schedule Actor option. You can change the schedule however you’d like.

Once the scraper is run a few times (to capture any price changes), run this script:

from apify_client import ApifyClient

# Initializing the ApifyClient with your API token

client = ApifyClient('apify_api_jeZW0VGgVfWHQSfbxFrLB7dDenujz900JumS')

# Function to retrieve the latest dataset items

def get_latest_dataset_items(dataset_id):

dataset_client = client.dataset(dataset_id)

items = dataset_client.list_items().items

return {item['name']: item['price'] for item in items}

# Function to retrieve historical price data from the key-value store

def get_historical_data(kv_store_client, key='historical_prices'):

try:

record = kv_store_client.get_record(key)

if record is not None:

return record.get('value', {})

else:

return {}

except Exception as e:

print(f"Error retrieving historical data: {e}")

return {}

# Function to save historical price data to the key-value store

def save_historical_data(kv_store_client, data, key='historical_prices'):

try:

kv_store_client.set_record(key, data)

except Exception as e:

print(f"Error saving historical data: {e}")

# Function to detect price changes

def detect_price_changes(current_data, historical_data):

price_changes = {}

for name, current_price in current_data.items():

historical_price = historical_data.get(name)

if historical_price is not None and historical_price != current_price:

price_changes[name] = {

'old_price': historical_price,

'new_price': current_price,

}

return price_changes

# Function to retrieve the latest dataset ID from the most recent actor run

def get_latest_dataset_id(actor_id):

actor_runs = client.actor(actor_id).runs().list().items

if actor_runs:

return actor_runs[-1]['defaultDatasetId']

else:

raise ValueError("No recent runs found for the actor.")

# Function to get or create a persistent Key-Value Store

# Function to get or create a persistent Key-Value Store

def get_or_create_kv_store(kv_store_name):

try:

# Use the get_or_create method to retrieve or create the key-value store

store = client.key_value_stores().get_or_create(name=kv_store_name)

return client.key_value_store(store['id'])

except Exception as e:

raise RuntimeError(f"Error accessing or creating KVS: {e}")

def main():

# Specifying the actor ID

actor_id = 'buzzpy/website-prices-scraper' # Replace with your actor's ID

try:

# Retrieving the latest dataset ID from the most recent actor run

dataset_id = get_latest_dataset_id(actor_id)

# Get or create a persistent key-value store

kv_store_name = 'latest-ebay-run' # Ensure this is the name you want

kv_store_client = get_or_create_kv_store(kv_store_name)

# Retrieving current price data

current_data = get_latest_dataset_items(dataset_id)

# Retrieving historical price data

historical_data = get_historical_data(kv_store_client)

# Detecting price changes

price_changes = detect_price_changes(current_data, historical_data)

# Output detected price changes

if price_changes:

print('Price changes detected:')

for name, prices in price_changes.items():

print(f"Product {name}: {prices['old_price']} -> {prices['new_price']}")

else:

print('No price changes detected.')

# Updating historical data with current prices

historical_data.update(current_data)

# Saving updated historical data back to the key-value store

save_historical_data(kv_store_client, historical_data)

except Exception as e:

print(f"Error: {e}")

if __name__ == '__main__':

main()

This code retrieves historical price data from the Apify Key-Value Store (KVS), compares it with the current data, and detects price changes.

If any changes are detected, the output would be similar to:

Price changes detected:

Product : ->

If any errors persist, double-check your dataset ID and key-value store.

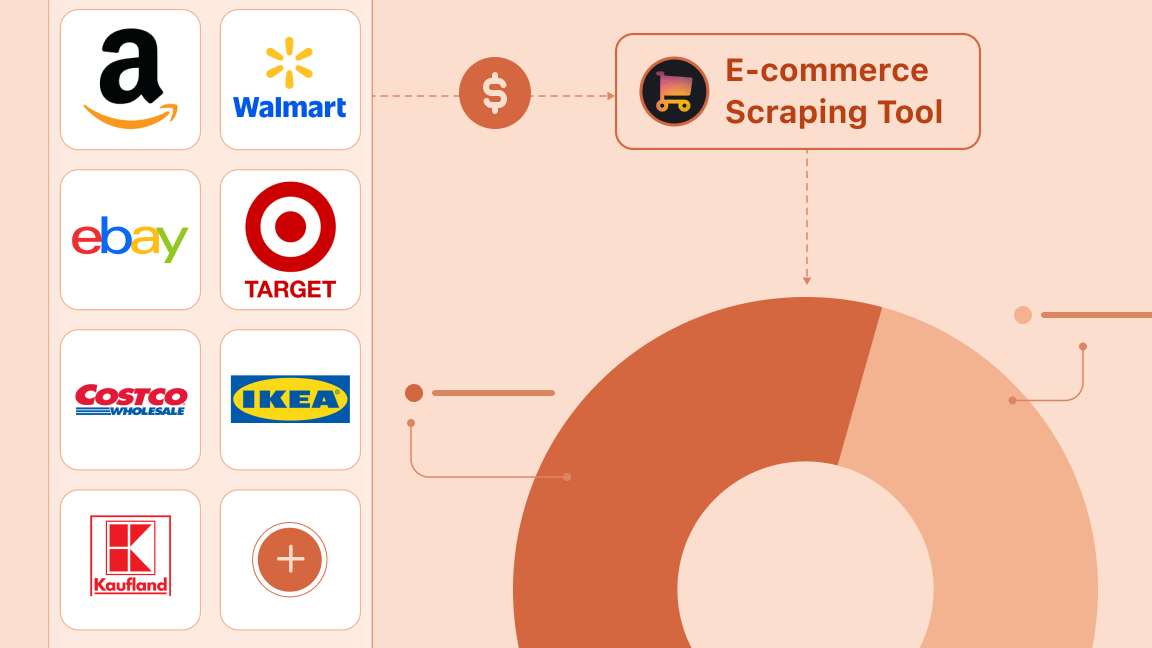

Scraping prices with Apify

If you don’t want to go through the hassle of setting up your environments and writing scripts, you can use ready-made scrapers on Apify. They're efficient no-code tools, and they're free to try.

Some of the popular scrapers are the Walmart product detail scraper, Amazon product scraper, and Google shopping insights scraper.

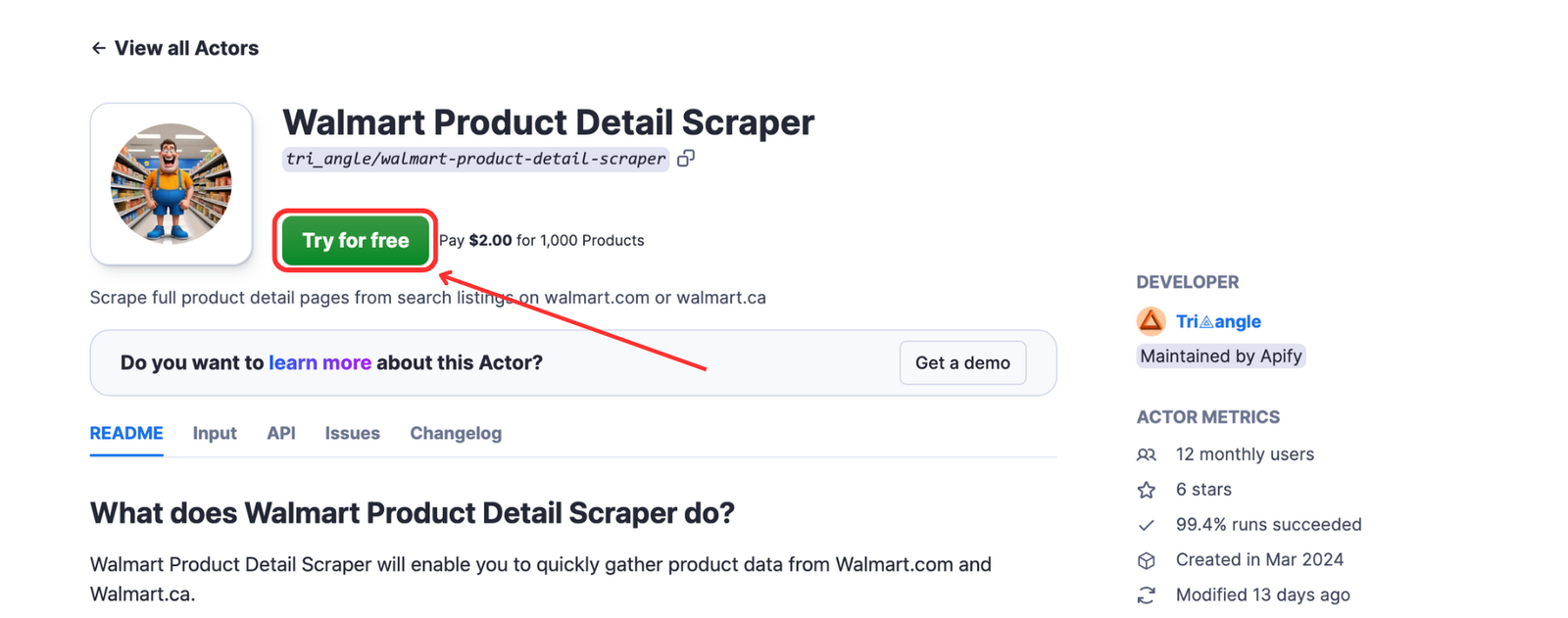

For example, the following is how you can use Apify’s Walmart product details scraper to scrape information without code:

- #1. Go to the Walmart Product Detail Scraper Actor page.

- #2. Click the “Try for Free” button.

- #3. Go to Walmart.com and search for a product you’d like to track. For example, “headphones”. Then, copy the URL of the search, which should follow this format:

https://www.walmart.com/search?q=searcheditem - #4. Head back to the scraper in Apify Console and paste the URL to the input field

- #5. Click the “Save and Start” button to run the scraper.

The output would be something similar to this:

Summary

In this tutorial, we built a scraper for extracting prices from websites. With a few tweaks on selector elements, it’s applicable to most e-commerce sites. We also looked at how we can deploy it to Apify for better features, like automated scraping and convenient storage.

Frequently asked questions

Why scrape prices from websites?

Scraping prices from websites can be useful for 1. Market research (e.g. extracting information on pricing trends for reports. 2. Price tracking (e.g. tracking price drops for great deals on products. 3. Competitor analysis (e.g. collecting competitor pricing data to study pricing strategies).

Is price scraping legal?

Yes, scraping prices from websites is legal in many cases when the data is public. But be sure to always review a site’s robots.txt and legal policies before attempting to scrape. Learn more in Is web scraping legal?

How to scrape pricing data

To scrape pricing data, you can use one of Apify’s e-commerce scrapers. They allow you to scrape metadata from websites, such as product titles, images, brand names, and URLs, while also supporting query-based search options.

Is Python good for price scraping?

Yes, Python is good for price scraping because it offers powerful libraries like BeautifulSoup, Scrapy, and Selenium for handling different types of websites. Its simplicity and strong community support make it ideal for extracting and parsing data from websites.

What Python libraries are best for price scraping?

The best library depends on the way you use them; in general, beautifulsoup is best for simple HTML parsing (mostly for static sites), selenium is great for handling JavaScript-heavy sites, and crawlee is good for scalable and efficient crawling.