At Apify, speed matters - especially when you’re running 2 million containers a day. We call those containers Actors - our serverless cloud programs for web scraping, automation, and AI agents - and they form the backbone of our platform. At that scale, even small inefficiencies in startup times can snowball into serious delays and infrastructure costs.

So we went all in on performance, and the result was huge: a 500% boost in Actor container startup times.

In this post, we’ll break down the practical engineering strategies that got us there. From switching operating systems to smarter Docker image management, EBS snapshot tricks, and fine-tuned scheduling on AWS EC2, we’ll share real-world insights that you can apply to your own containerized workloads.

Whether you're running thousands of containers or just want to optimize startup performance, you'll find something useful here.

Key wins at a glance

| Change | Metric before | Metric after | Gain |

|---|---|---|---|

| OS swap (Amazon Linux → Ubuntu) | 0.7s median startup | 0.3s | 2.3× faster |

| Base-image pre-pull + EBS snapshot | 0 cached layers | Images pre-extracted | 2 min faster cold start |

| Targeted allocator | 95ᵗʰ pct 6.9 s | 1.4s | 5× faster |

| Larger disks (300 → 500 GB) | 16k IOPS | 6k IOPS | –62 % IOPS |

| Overall startup | 7s | 1.2s | ≈ 500 % faster |

OS migration: Amazon Linux → Ubuntu (2.3 × faster)

Would you believe that containers start twice as fast on Ubuntu as on Amazon Linux? After we switched our EC2s to Ubuntu, the median time to start a container improved by a factor of 2.3.

Historically, we’ve always stuck with AWS-recommended solutions. This meant that even when choosing the operating system for EC2, we followed AWS's guidance and primarily iterated around Amazon Linux.

At the same time, our platform handles millions of containers daily, which results in significant demands on the system, orchestration, and monitoring.

It’s important to acknowledge that containers, while widely used today, still represent a somewhat cutting-edge technology. Although they have been around for quite some time, their widespread adoption has driven a surge in development. With the increasing number of contributors and users, the container ecosystem is evolving rapidly.

On the other hand, Amazon Linux maintains a fairly conservative approach to package versions and, most notably, the kernel. This conservative stance poses challenges in containerized environments. In fact, just by changing the kernel -or, more accurately, the operating system - we achieved a significant performance boost, even at the cost of diverging from Amazon Linux.

Two-layer image caching

Pulling full images over the network is the single biggest drag on cold starts, so we cache aggressively at two levels:

1. Pre-pulling base Docker images

Pre-pulling base Docker images significantly improved Actor startup times by reducing the need to download and extract layers during execution.

Every Actor can have numerous builds. Each build is unique and has a Docker image created during the build process. After creation, it is stored in our private image repository (AWS ECR). When a build gets executed, it runs on our custom runtime built on top of AWS EC2 instances.

Since every image is unique, optimizing startup time is challenging - each image must be pulled.

However, Docker provides caching logic for individual image layers. If your image is based on an image that’s already present on the machine, the layers from the base image will be reused without the need to pull all the layers.

Apify provides base Docker images. These base images include the necessary environment setup, such as Node.js or Python, and preinstalled packages. By starting from these images, developers can avoid setting up everything from scratch.

We cache the base Docker images on all our EC2 instances by pulling them during instance startup - a technique we call “base image pre-pulling.”

While this approach brought a noticeable performance improvement, it wasn’t perfect. That’s why we went even further and introduced EBS snapshots.

2. EBS snapshot with base images and most used builds

We replaced the empty Docker volume with an EBS snapshot containing every base image plus the 50 most-used Actor builds from the last 24 h.

The results:

- Makes cold-instance launch 2 minutes faster overall

- Drops disk demand from 16k IOPS to 6k IOPS

- Eliminates pull timeouts entirely

As mentioned previously, we pre-pulled all the base images during the EC2 instance startup. This had several downsides:

- The startup time of the EC2 instance was slow due to the image pulling, which reduced our ability to quickly scale due to high user demand

- The EC2 disks required high IOPS to sustain the parallel image pulls

- Sometimes, image pulls timed out, resulting in suboptimal startup times for some runs

So, we decided to completely rethink our approach. Previously, the images were pulled to an empty EBS volume that was attached to the EC2 instance and served as the underlying storage for Docker. Instead of an empty EBS, we now restore a snapshot that already contains all previously pre-pulled images.

It does have its pitfalls—typically, data is restored from snapshots using lazy loading from S3 ( https://aws.amazon.com/blogs/storage/addressing-i-o-latency-when-restoring-amazon-ebs-volumes-from-ebs-snapshots/ ). EBS volumes fully hydrated this way have their maximum performance. This can be further supported by AWS's Fast Restore feature, although it comes at a significant cost.

However, in our testing, standard restore performance was sufficient - and even outperformed pulling images directly from ECR.

The main benefit is that the images are already extracted and become fully functional once hydrated. Hydration can also be triggered by starting the Docker daemon during instance startup.

So far, we’ve measured that an instance typically has an overhead of about 60 seconds, during which the volume is created from a snapshot in the user data and attached to the instance, followed by the startup of the Docker daemon.

This strategy allowed us to bypass AWS’s Warm Pool feature, which doesn’t work for us because of our use of mixed instances in the Auto Scaling Group (ASG).

Performance may vary, especially during AWS operations like creating and attaching EBS volumes, but on average, our instances start about 2 minutes faster. This enables us to scale more flexibly and has improved the container initialization metric in the higher percentiles.

Every day, we create a new snapshot that includes pre-pulled base images along with the 50 most-used Actor builds from the past 24 hours.

During EC2 instance startup, we attach this EBS snapshot, which solves multiple issues:

- Cold instance startup time has improved by 2 minutes

- Disk IOPS and throughput requirements dropped significantly - from 16,000 IOPS to 6,000

- No more pre-pull timeouts, since we no longer pre-pull anything

Following the success of EBS snapshots, we continued optimizing performance.

Smarter custom allocator

We cut Actor startup times in the 95th percentile from nearly 7 seconds to under 1.5 seconds.

We started Apify even before Kubernetes was a thing, which is why we’re still running our own custom runtime for Actors. This includes a custom EC2 instance autoscaler and allocator.

While maintaining our own infrastructure comes with some overhead, it also allows us to implement low-level, Apify-specific optimizations with incredible results. Specifically, we enhanced our custom allocator to target instances that already had the required Actor build.

Targeted allocation turned out to be a game changer - cutting Actor startup times in the 95th percentile from nearly 7 seconds to under 1.5 seconds. For us, that’s a revolution.

To put it in Kubernetes terms: Imagine you have thousands of different pods on your platform - with new ones constantly being added - and you can start any of them with a 95% chance they’ll be running within 1.5 seconds.

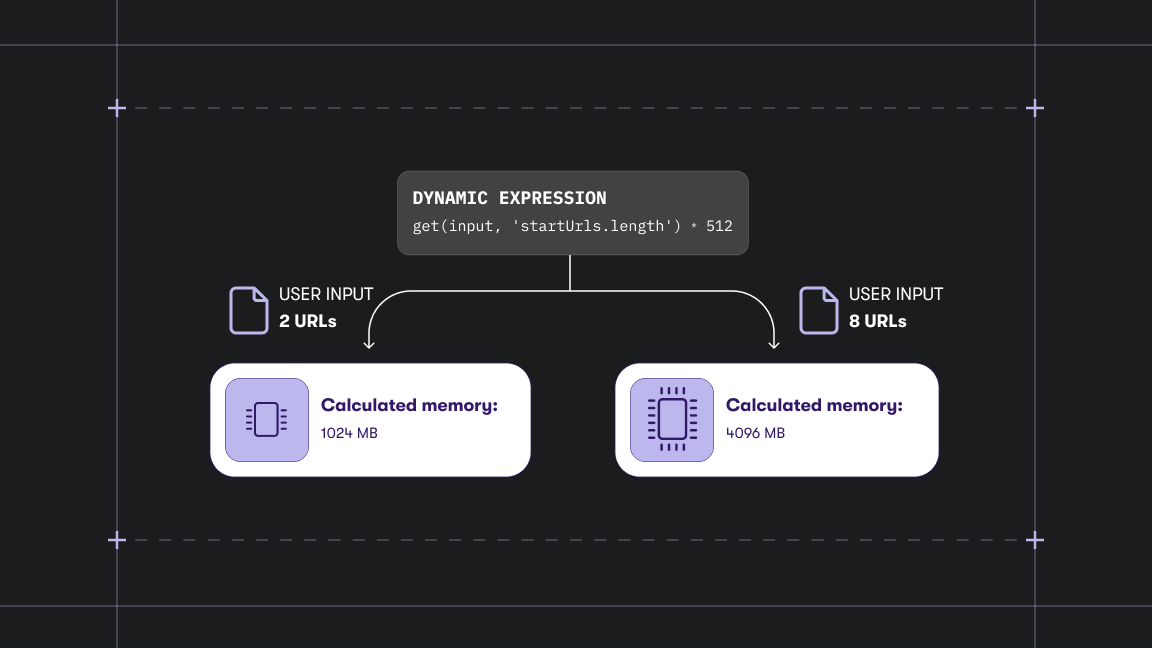

When an Actor run is executed, it must be allocated to an EC2 instance that will run the container. Our custom allocator can only choose from instances with enough available RAM to run the container. Among these, we select the one that will have the least RAM remaining after allocation.

This logic helps us keep some instances completely idle, which is useful when scaling down the EC2 fleet - we can safely terminate idle instances without interrupting any running Actors.

Additionally, a few more subtle rules influence allocation:

- We limit the number of Actor builds per instance to prevent disk overload

- We prefer instances that haven’t started a new container in the past second. This prevents overloading an instance with multiple simultaneous container starts

While this strategy was already working well, we wanted to explore further improvements to Actor startup performance.

For each EC2 instance, we began tracking which Actor builds are present. The allocator now uses this data to prefer instances that already have the required build. If that’s the case, the Actor can start almost immediately, since the image is already present.

But smart allocation is only as good as the cache it relies on. So the next logical step was to give our instances more disk space to retain more builds.

Bigger drives to cache more Actor builds

Increasing EC2 disk size allowed us to cache more builds, resulting in 99% of Actor runs starting in under 10 seconds.

EC2 instances cache pulled builds locally, but with limited disk space, we had to frequently clean up unused images. This meant that even semi-frequent builds might not stay cached, leading to more ECR pulls and slower startup.

We solved this simply: bigger drives. Specifically, we increased the size from 300 GB to 500 GB. We could go even higher, but that would increase costs, so we chose 500 GB as the sweet spot - balancing our need to cache many builds with the cost of larger drives.

With more storage, we can retain more builds between cleanups, increasing the likelihood that a requested build is already available. It’s a small change with a big impact, especially as usage patterns evolve over time.

In summary

No single silver bullet delivered a 500% speedup; stacking five pragmatic tweaks did. If you run thousands or millions of containers, copy these steps:

- Pick the fastest kernel

- Cache layers twice

- Schedule for cache hits

- Buy enough disk to keep what you pull

- Continuously measure. It will point you to the next bottleneck - and the next easy win.