Beware – this blog post is a story about me and my precious bikes, not documentation nor a technical deep dive.

Spoiler alert – it's a story with a happy ending: these two machines ⬇️

Scraping bikes to understand and compare

You can visit the nearest bike store, trust a random salesperson, and ride your new bike in a few minutes – but where's the fun in that?

Let's be geeks and over-engineer it a little (or a lot).

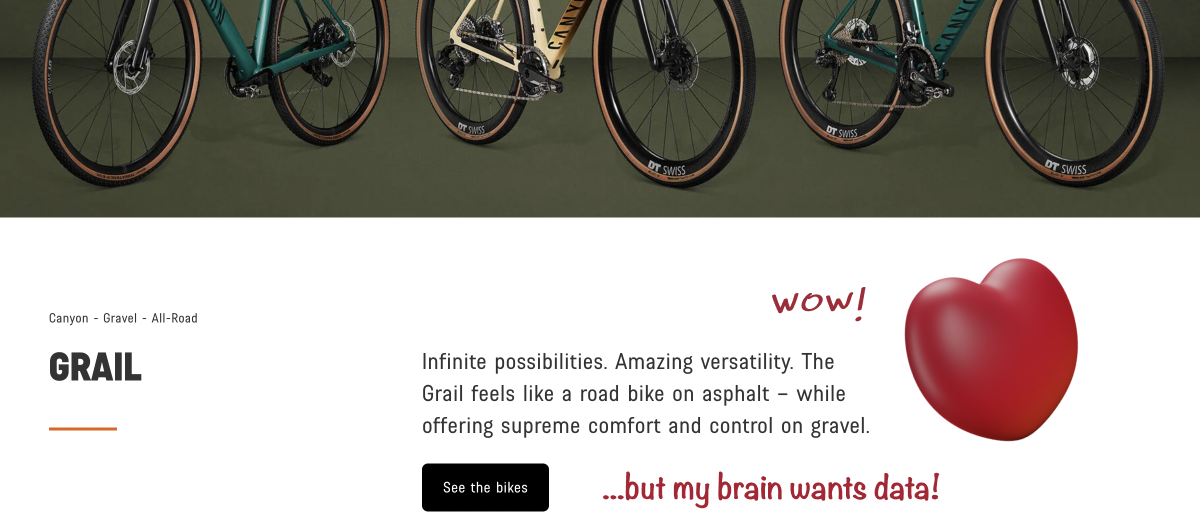

For the sake of this blog post, and because I'm a Canyon bikes fanboy, I'll be focusing on their bikes. But the same applies to any bike manufacturer with a website – so hopefully, all of them.

Even with this limited scope, Canyon currently offers 233 different models. I could behave like a normal person and just browse through their website. But the website is made for your heart – to lure you with beautiful images, monumental claims, and marketing buzzwords.

I like all that, but I also want data, I want context, I want comparisons. I want to understand. Basically, I want a spreadsheet.

No problem with Apify.

Using our open-source SDK, it's just a matter of writing the selectors to extract the data from the website and slight data processing. Apify will take care of the rest – running, queueing, concurrency, anti-bot protection, retries, storing the results, scheduling, etc. This 20-minute video by Ondra Urban is my favorite explanation of what Apify does for devs.

The code below is just a redacted example – you can find the full code or even just run the web scraping tool on Apify Store. Don't get discouraged by the word "Store" – it's totally free!

const pid = request.url.match(/\/(\d+)\.html$/)[1] // e.g. 2448

const allComponentsUrl = `${BASE_URL}/on/demandware.store/...?pid=${pid}`

const { body } = await Apify.utils.requestAsBrowser({ url: allComponentsUrl })

const $$ = cheerio.load(body)

item.img = $(`.productHeroCarousel__picture img`).attr(`src`)

item.name = $(`h1`).text().trim() // e.g. `Speedmax CF 7 Disc`

item.price = common.parsePrice($(`.productDescription__priceSale`).text()).amount

// section with Frame, Fork, Rear derailleur, Chain, ...

const allSections = $$(`.allComponents__sectionSpecListItem`).toArray()

for (const section of allSections) {

const title = $$(section).find(`.allComponents__sectionSpecListItemTitle`).text().trim()

const definitionItems = $$(section).find(`ul li`).toArray()

const [first, ...rest] = definitionItems

let main = $$(first).text().trim() // e.g. Shimano Ultegra R8000

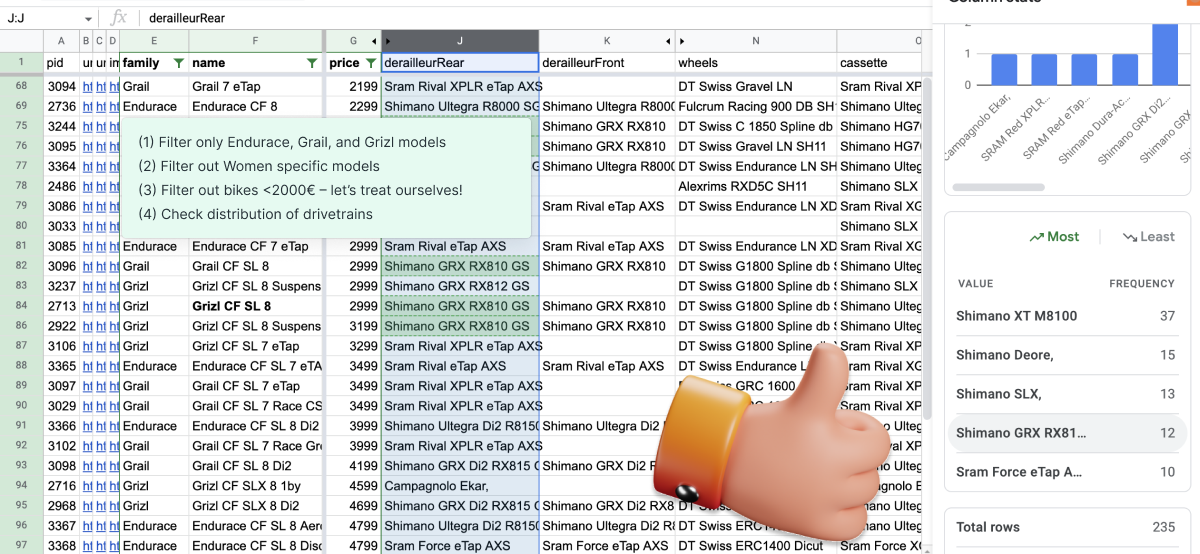

After running the scraper and exporting data to Google Sheets, we have access to all the good stuff:

- filtering just the relevant bikes "families": for Canyon, that will be Endurace, Grizl, Grail, etc.

- filtering out women-specific geometries

- setting the price range to reasonable values

- applying conditional formatting – i.e., different colors for SRAM and Shimano.

- using columns stats to analyze trends

Now I'm confident that "Canyon Grizl CF SL 8" is right for me! Let's get it in the next section!

Buying the bike – not so simple

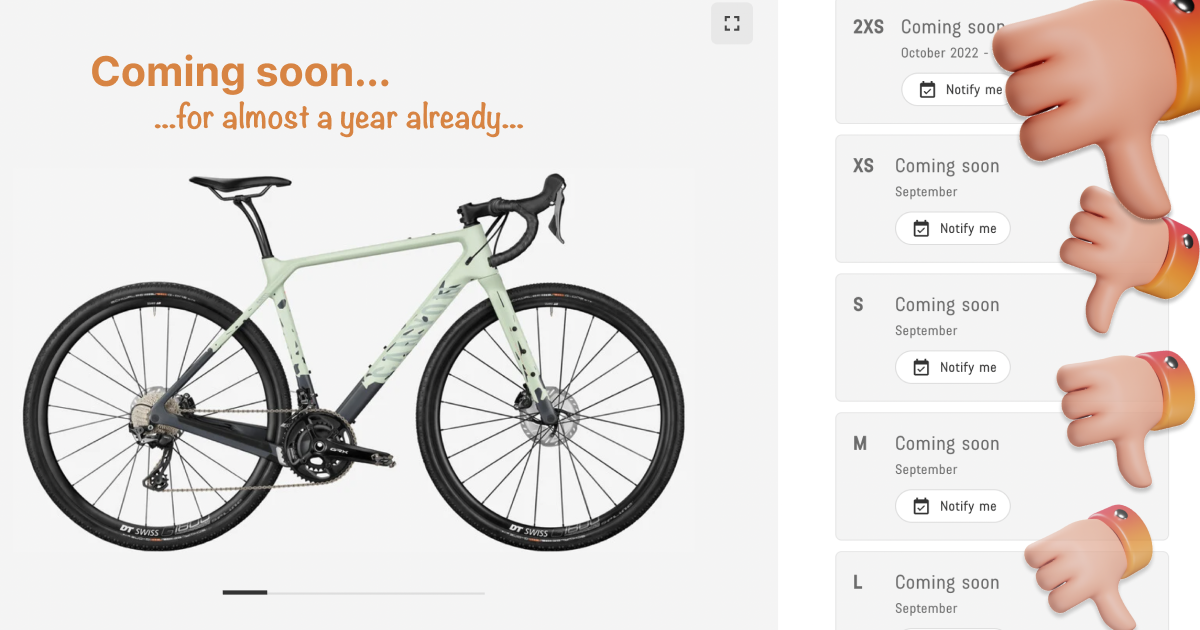

Now we have The Chosen One – we dream about it, tell all our friends about it, and have it as a wallpaper. And we are ready for business, so let's buy it! Well... not so fast. Sadly, buying bikes these days is not so simple. Most of the models are out of stock most of the time.

When somebody else cancels their existing order, the bike will become available again... until somebody else snatches it. Let's make sure we're the ones doing the snatching.

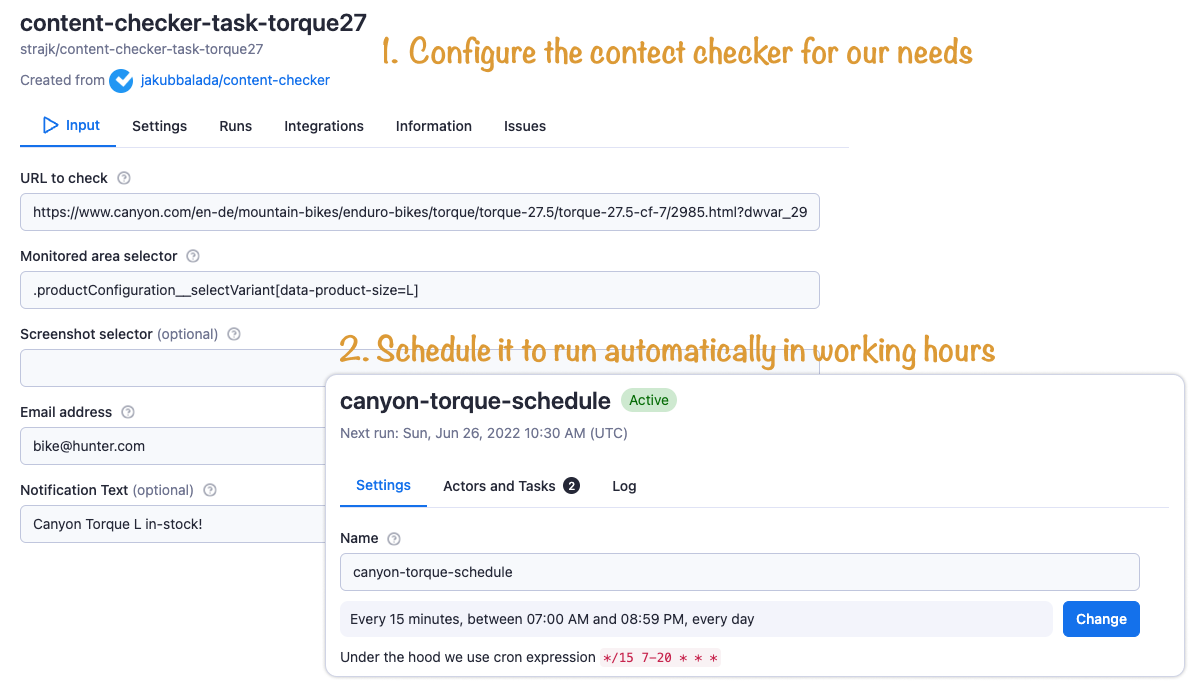

Let's use our Content Checker (fun fact: written by Apify co-founder Jakub Balada), and schedule it to run "Every 15 minutes, between 07:00 AM and 08:59 PM, every day".

And voilà! After a few weeks, the email arrives, and after a few more days, the bike arrives 🚀

A few months and hundreds of kilometers later, the bike is still out of stock on the website.

Now that we have a gravel bike for trips, training, and group rides, let's focus on a second bike purely for fun. For that, let's try to find some good deals on the second-hand bike marketplace.

Monitoring second-hand bikes marketplace

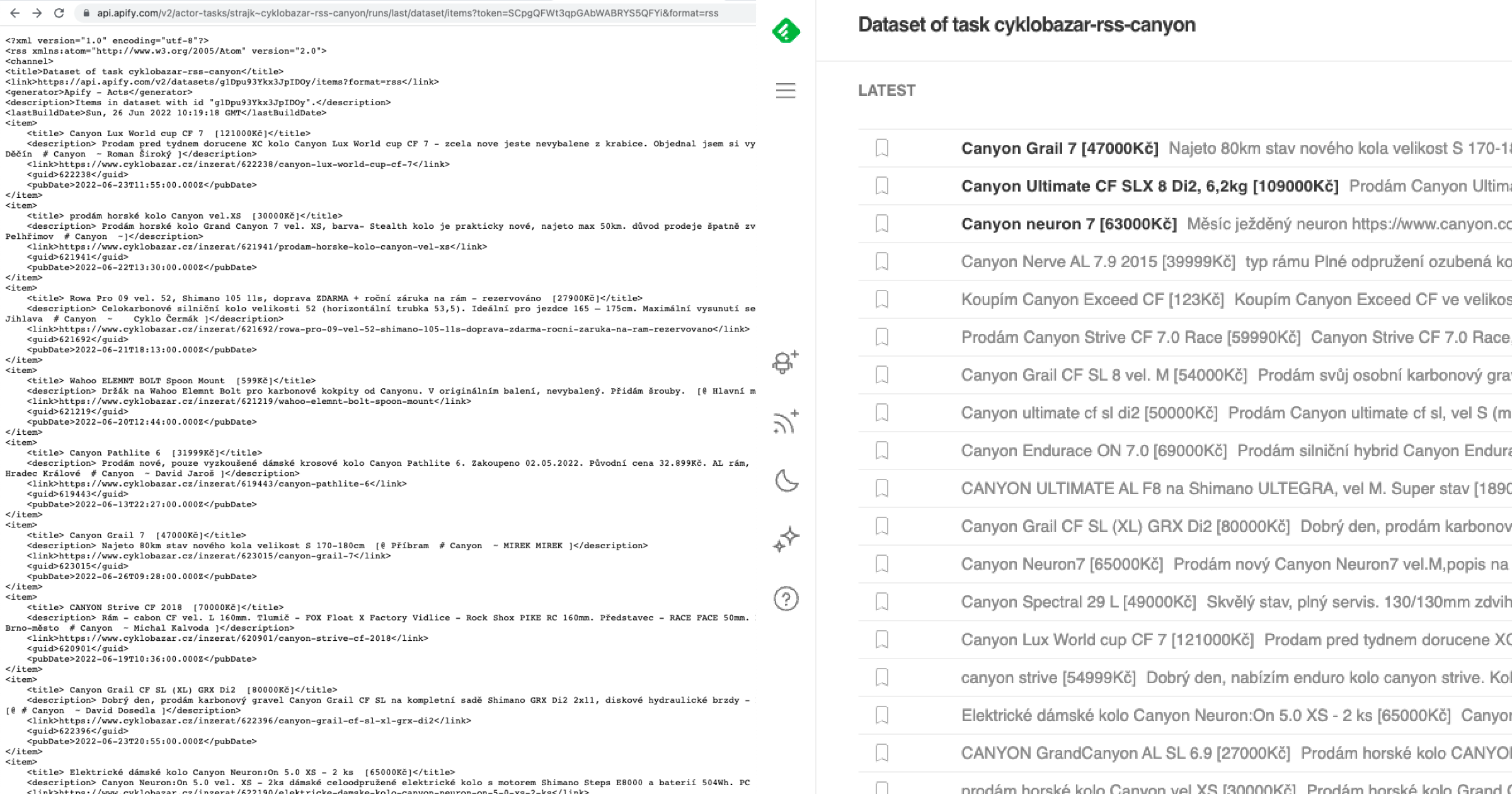

In the Czech Republic, we are lucky to have a well-known website for offering/buying second-hand bikes – Cyklobazar.

It has filtering options - by type, brand, size, components, and just about everything else. But it lacks one critical feature: notifications about new offers.

Let's build it ourselves. Again, it's so simple with Apify.

In this case, sending an email on every new offer seems like overkill for me, especially when there's a perfect standardized solution for that problem, battle-tested for decades 👉 RSS feeds.

Let's write an actor to feed new offers matching our criteria to our favorite RSS reader. (I'm using Feedly RSS reader in the screenshot below, but my favorite is NewsBlur!)

Apify will again take care of all the boring parts – we just need to specify the extraction selectors, save the data in RSS-compatible format, and schedule the scraper to run every half an hour.

The following code is a redacted example snippet – you can find the complete code, and run the scraper on Apify Store.

async handlePageFunction ({ request, $ }) {

/* If on first page, handle pagination */

if (!request.url.includes(`vp-page=`)) {

const totalPages = parseInt($(`[class=paginator__item]`).last().find(`.cb-btn`).text())

for (let i = 2; i <= totalPages; i++) {

const Url = new URL(request.url)

Url.searchParams.set(`vp-page`, i.toString())

await requestQueue.addRequest({ url: Url.toString() })

}

}

$(`.col__main__content .cb-offer`).each((i, el) => {

const link = $(el).attr(`href`)

const id = link // e.g. /inzerat/621592/prodej-horskeho-kola-trek-procaliber-9-6

.split(`/`)[2] // e.g. 621592

const title = $(el).find(`h4`).text()

const dateRaw = $(el).find(`.cb-time-ago`)

.attr(`title`) // Vytvořeno 31. 5. 2022, 14:36

.replace(`Vytvořeno `, ``) // 31. 5. 2022, 14:36

const date = dateFromString(dateRaw)

const desc = $(el).find(`.cb-offer__desc`).text()

const price = $(el).find(`.cb-offer__price`)

.text()

.replace(/\s/g, ``) // remove all whitespaces

const location = $(el).find(`.cb-offer__tag-location`).text()

const brand = $(el).find(`.cb-offer__tag-brand`).text()

const user = $(el).find(`.cb-offer__tag-user`).text()

Apify.pushData({

title: `${title} [${price}]`,

description: `${desc} [@${location} #${brand} ~${user}]`,

link: `${BASE_URL}${link}`,

guid: id,

pubDate: date.toISOString(),

})

Read more about turning websites into RSS.

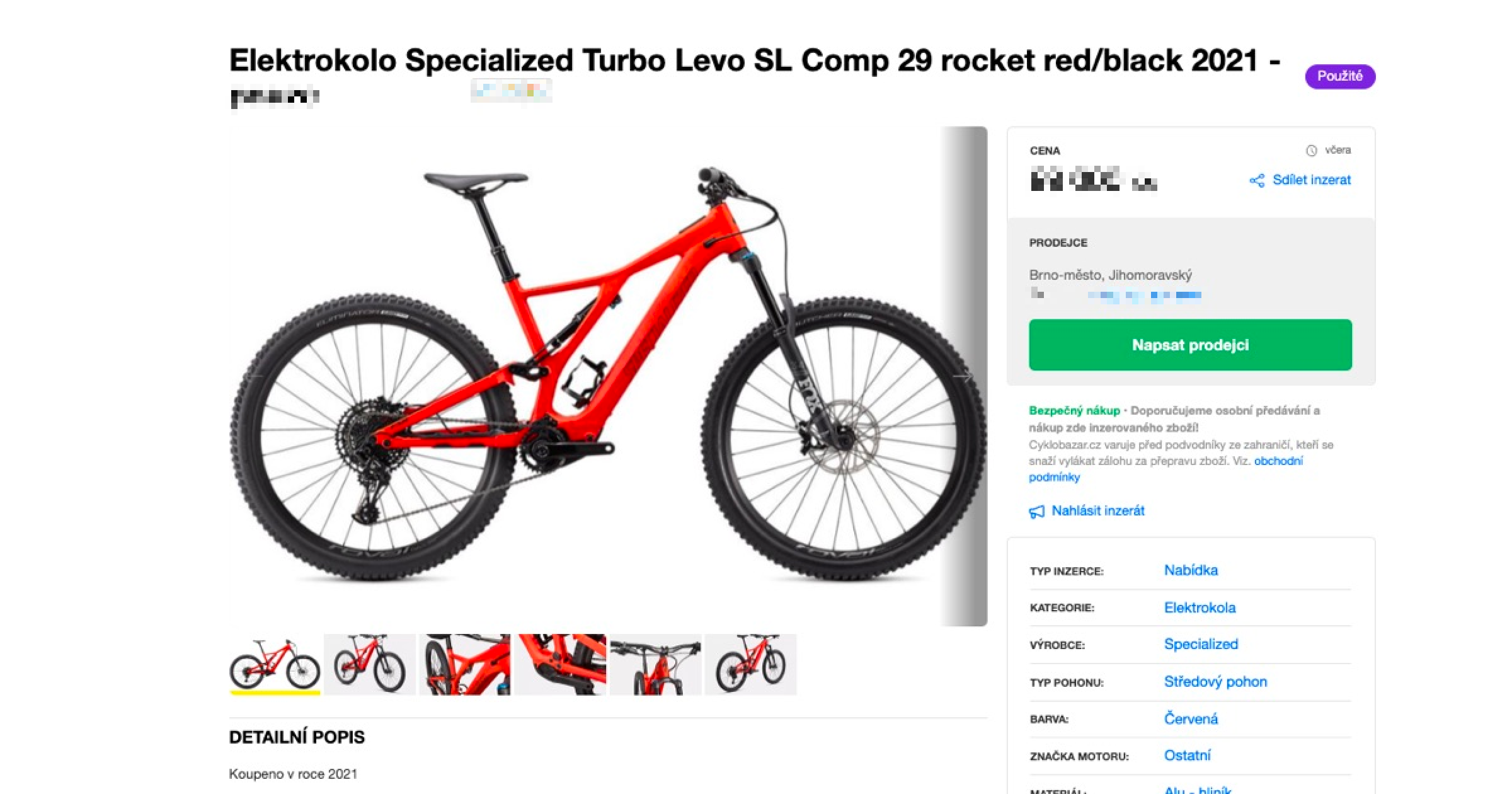

After a few weeks, the perfect deal appeared. I called the person an hour later. In three hours, I had the bike.

Scraping e-shops for deals

Now we have the bikes and no money left 💸 But we need some gear – pedals, shoes, helmets, bibs, jerseys, multitools, spare tires, and so much more.

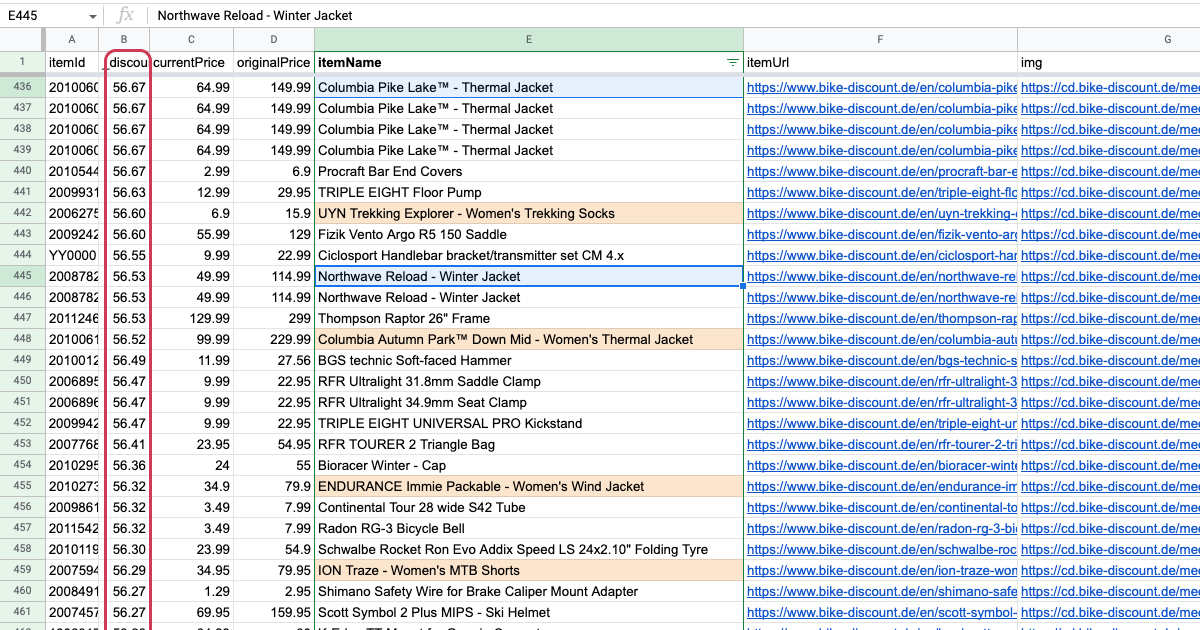

Luckily, cyclists like to show off in the latest gear. That often means that the stuff from previous years is discounted, a lot! Let's scrape a few bike-specific e-shops, sort the products by discount, and use Google Sheets filtering to narrow down the results ⬇️

I wrote a bunch of scrapers for my favorite cycling e-shops. Run them and check the source code on Apify Store 👇

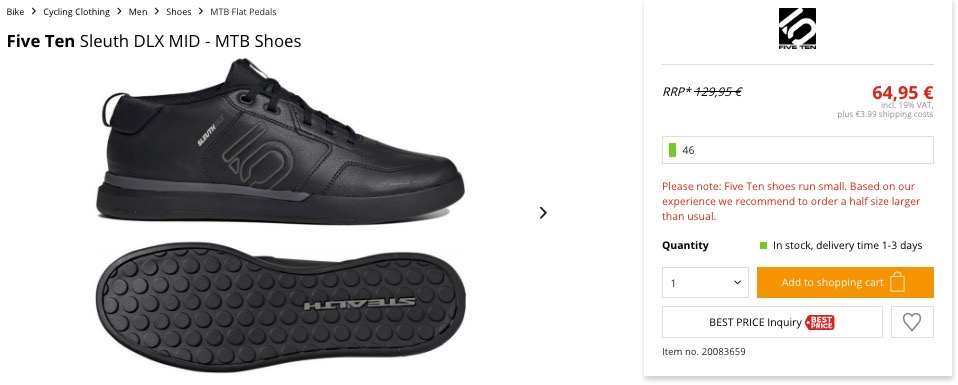

Five Ten shoes for half the price! Oh, yes!

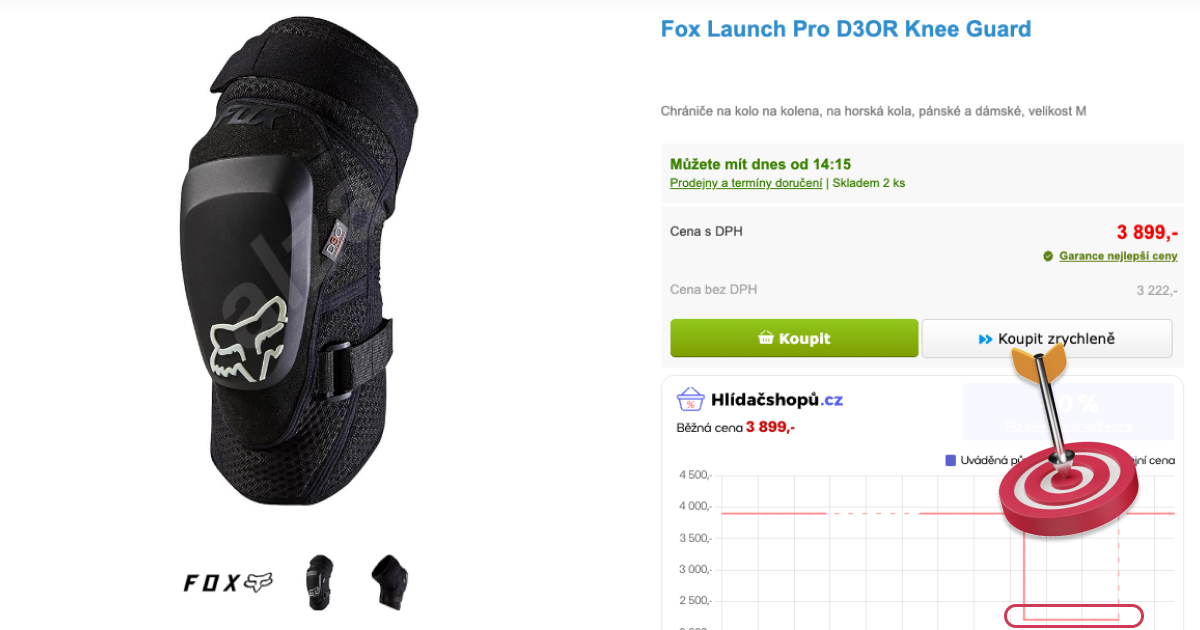

Our price-checking browser extension verified Fox knee pads for almost half the price. Guess when I bought them 😎

Epilogue

We had fun, satisfied our inner geeks, played with the internet, bought a few bikes, and saved some money. I hope you enjoyed it. If you did, think about joining Apify and working and riding with us! You can join our gravel gang or our shred gang. Or both!

Note – sadly, I was unable to convince Apify to put the bikes as a business expense for E2E platform testing. Better luck next time!