Today, Apify is happy to announce Crawlee, the successor to Apify SDK 🥳

The new library has full TypeScript support for a better developer experience, even more powerful anti-blocking features, and an interface similar to Apify SDK, making the transition for SDK developers a breeze.

If you're already familiar with Apify SDK, go ahead and read the upgrading guide to learn more about the changes. Now, without further ado, let's talk about Crawlee!

Motivation

Four years ago, Apify released its open source Node.js library for web scraping and automation, Apify SDK.

During this time, Apify SDK played a crucial role in powering hundreds of actors on Apify Store and helping developers and businesses build performant and scalable scrapers (read about how a retail data agency saved 90% costs on web scraping).

While Apify SDK successfully filled the gap for a modern web scraping library for the Node.js ecosystem, there was a problem. Despite being open source, the library's name caused users to think its features were restricted to the Apify platform, which was never the case.

From the beginning, our goal was to build the most powerful web scraping library while making this technology widely accessible to the developer community.

With this in mind, we decided to split Apify SDK into two libraries, Crawlee and Apify SDK. Crawlee will retain all the web crawling and scraping-related tools and will always strive to be the best web scraping library for its community. At the same time, Apify SDK will continue to exist but keep only the Apify-specific features.

What's new in Crawlee?

More than just a rebranding, Crawlee comes with significant upgrades and new features:

🛠 Features

- TypeScript support

- Configurable routing

👾 HTTP Crawling

- HTTP2 support that mimics browser TLS, even through proxy

- Generation of browser-like headers based on real-world data

- Replication of browser TLS fingerprints

💻 Real browser crawling

- Generation of human-like fingerprints based on real-world data

Most of those features were already available as configuration options in Apify SDK, but after months of testing, we’ve decided to turn them on by default in Crawlee.

For a full breakdown of all Crawlee features, head over to crawlee.dev/docs/introduction

Why Crawlee?

Open-source ❤️ Crawlee runs anywhere

Crawlee is an open-source library that you can integrate with your own applications and deploy to any infrastructure, including the Apify platform, which is optimized for web scraping and automation use cases.

Batteries included 🔋 Crawlee has everything you need for web scraping and automation

Crawlee builds on top of popular web scraping and browser automation libraries, such as Cheerio, Puppeteer, and Playwright, offering three advanced crawler classes: CheerioCrawler, PuppeteerCrawler, and PlaywrightCrawler.

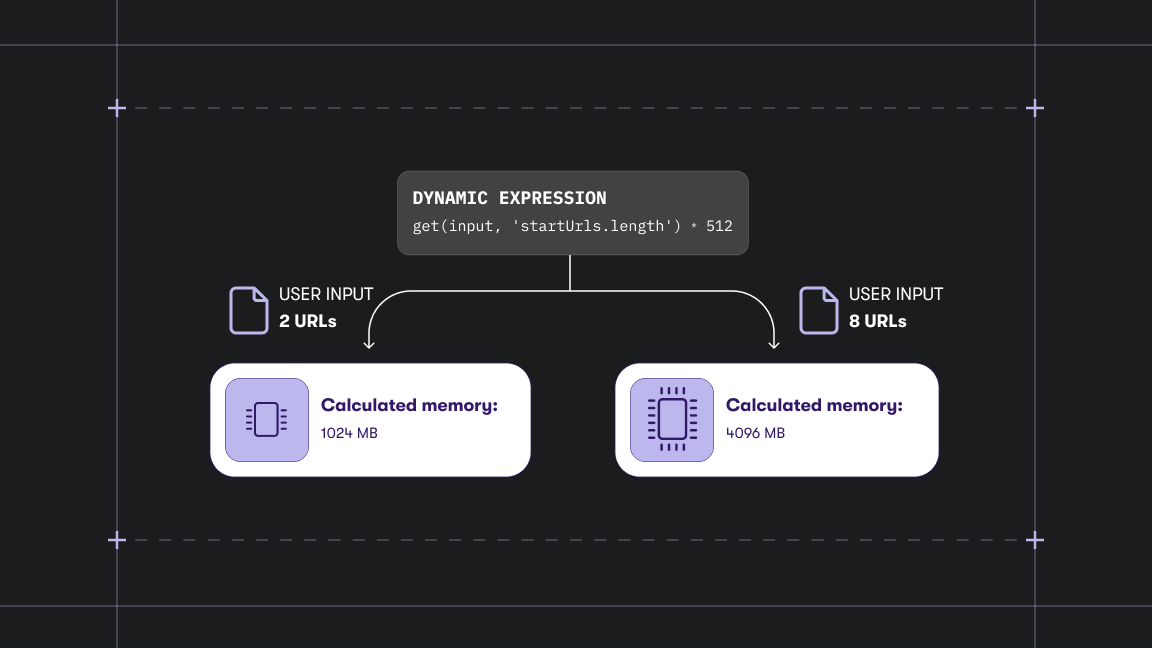

The crawlers automatically scale with available system resources, rotate proxies based on success rates, manage a queue of URLs, persistently store data and more. You can crawl web pages in parallel, run multiple headless browsers, and extract data from any website at scale.

Reliable ⚖️ Crawlee has built-in modern anti-blocking features

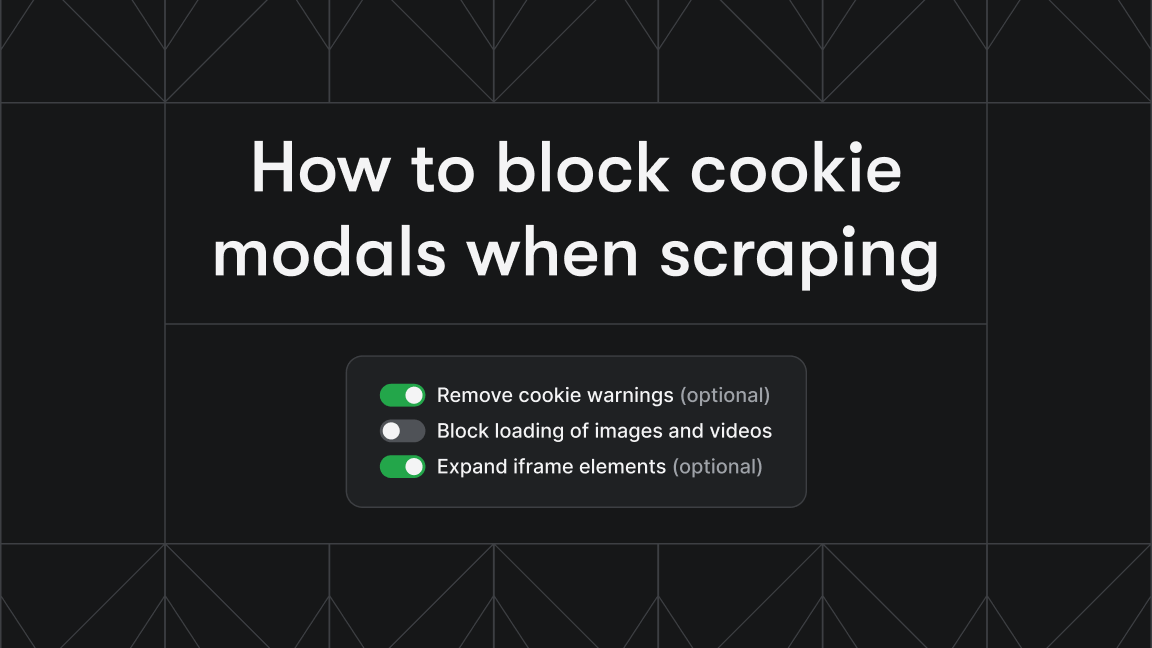

Crawlee’s auto-generated headers, browser TLS fingerprints, and proxy rotation tools enable you to fly under the radar of anti-scraping protections and avoid blocking.

Modern websites often employ advanced browser fingerprinting techniques to track “bot-like” behavior and accurately identify visitors even across different IP addresses.

Crawlee generates human-like fingerprints based on real browsers to protect your crawlers from being blocked, with zero configuration necessary. And if the default config does not cut it, you can always tweak its parameters.

Let’s start Crawleeing!

If you have Node.js installed, you can try Crawlee in a minute. All you need to do is run the command below and choose one of the available templates for your crawler.

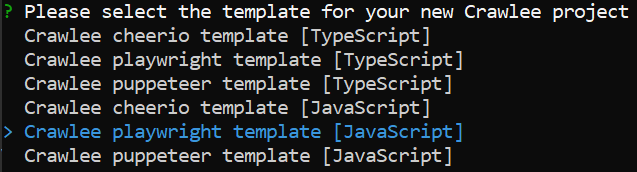

npx crawlee create my-crawlerIf you don't already have Crawlee installed, wait for a few seconds while the installation completes, and you will see the following options show up on your terminal:

For this example, we will choose the Playwright JavaScript template. Wait until all the required dependencies are installed. It might take a minute because Crawlee will install Playwright and its browsers. Then open your newly created crawler folder and run it.

cd my-crawlernpm startFor more information, check the Crawlee documentation and follow the First Crawler section for a detailed, step-by-step guide to creating your first crawler.